Introduction

For this module, my main objective is to create a 360 VR experience using Maya, Paint tool SAI and Premiere Pro in order to make an interactive, animated art gallery, serving mainly as a portfolio piece and a narrative vehicle for users to explore within a VR space. The goal here with this project is to also explore VR’s capabilities in storytelling.

The inspiration for this concept came from the interactive Alice in Wonderland VR experience I discussed within my research proposal prior, more specifically in it’s unique visual presentation which allows users to interact with the mixed 2D and 3D space. This style, as mentioned in interviews and promotional material, was inspired by pop-up books to give that 3D effect to 2D art pieces. This effect allows these images to ‘come to life’ and add depth within the user’s perspective, making the experience as if the user is exploring through the book’s narrative.

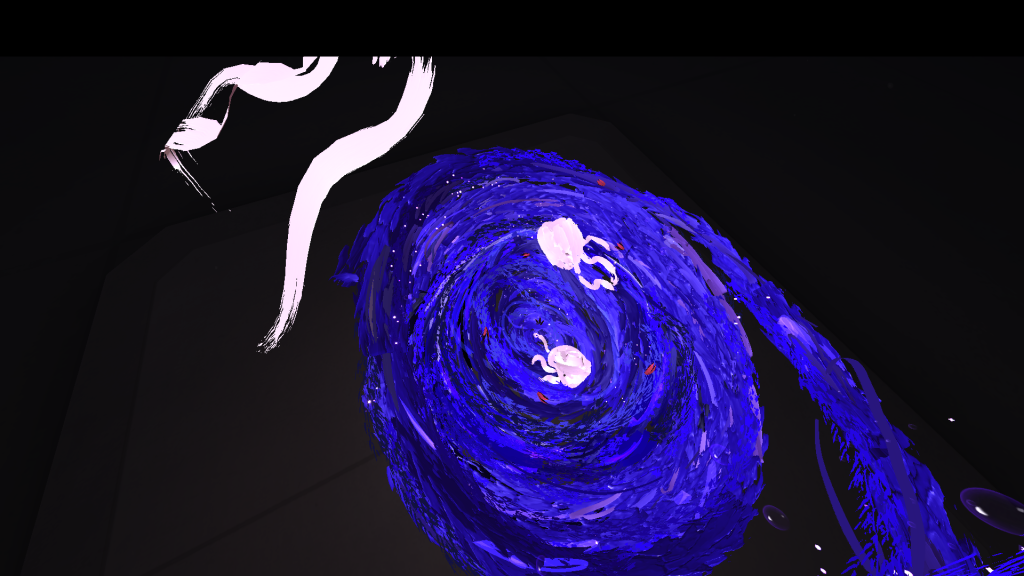

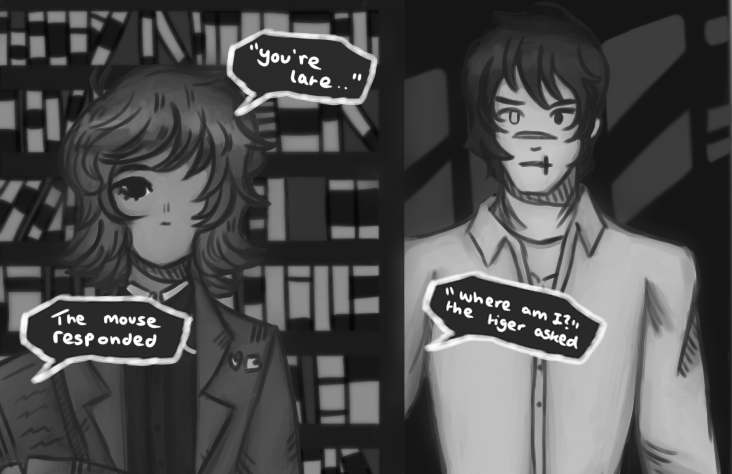

Therefore, in this project, I want to be able to replicate this effect within the art gallery space, allowing users to essentially weave through the world’s story. In this case, the plan is to replicate something similar to a pop up book but with manga, a form of Japanese graphic novels. Before I could start creating these art pieces, I had to research different visual styles in order to establish the tone and aesthetic to the 360 experience.

Idea Generation and Research

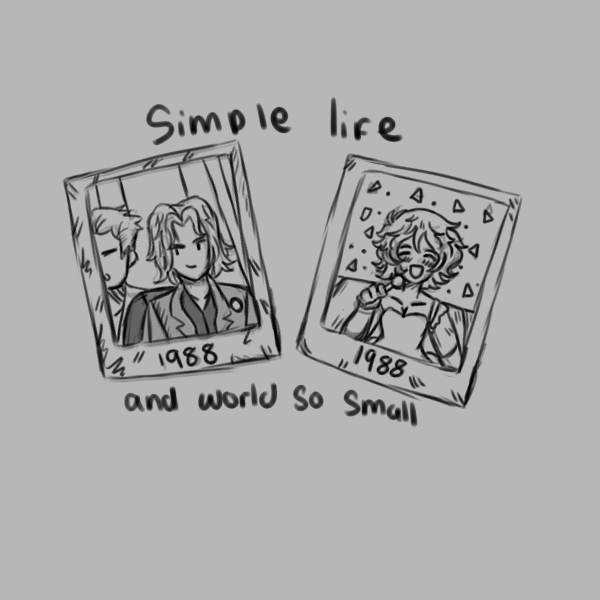

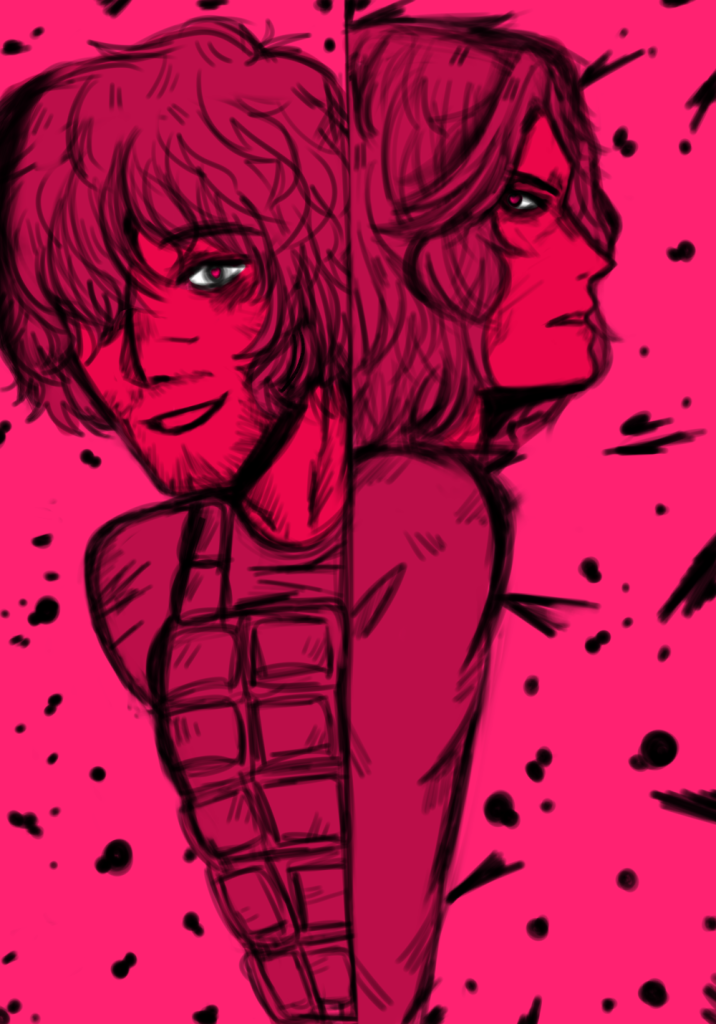

As mentioned previously, I wanted to create a similar pop-up effect using manga so each art piece that I make would essentially follow a similar style in order to make my art consistent whilst unique with each piece. The main goal when creating this collection of art is to show my best work as an artist whilst still forming a cohesive narrative with visual guides.

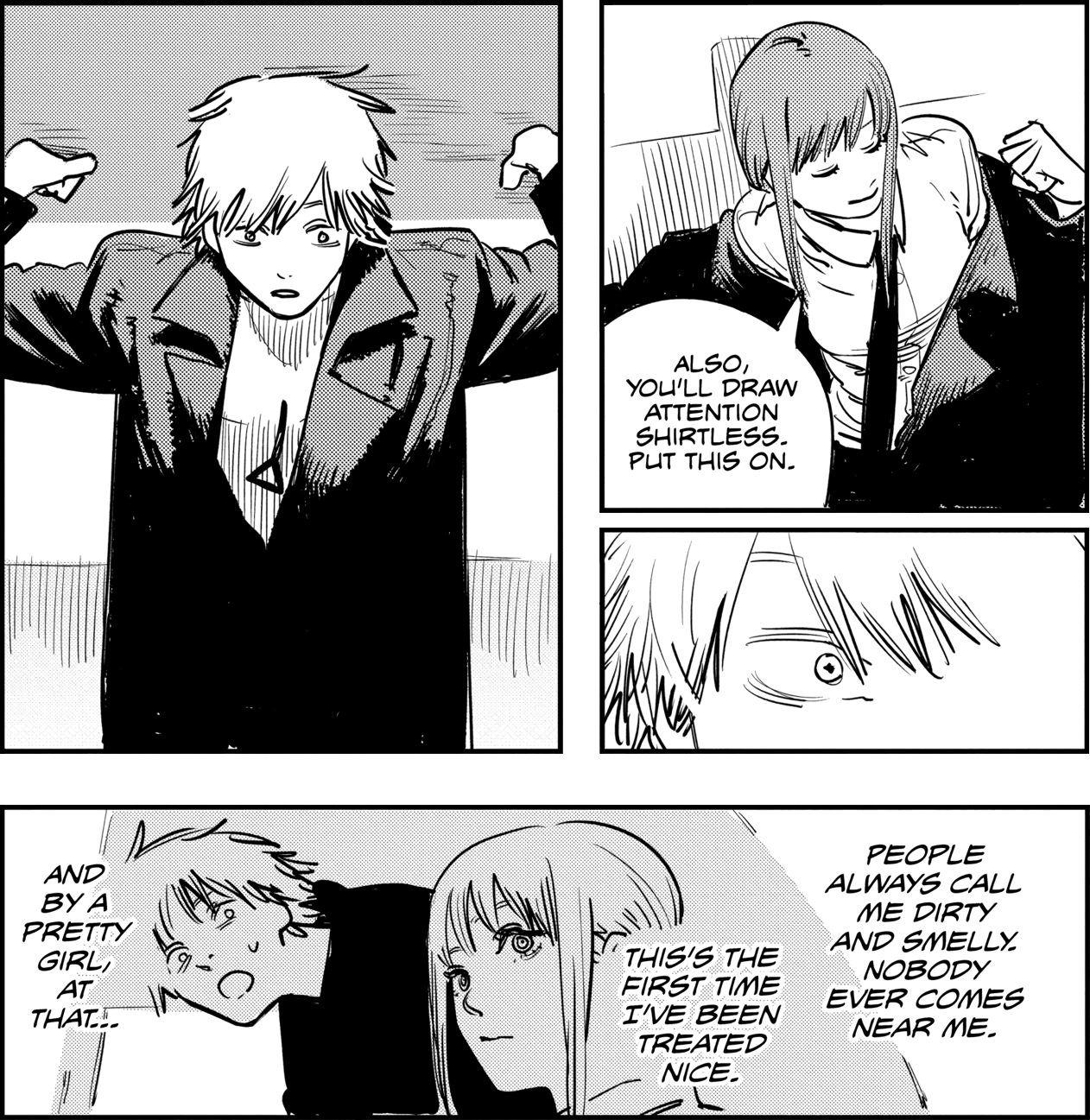

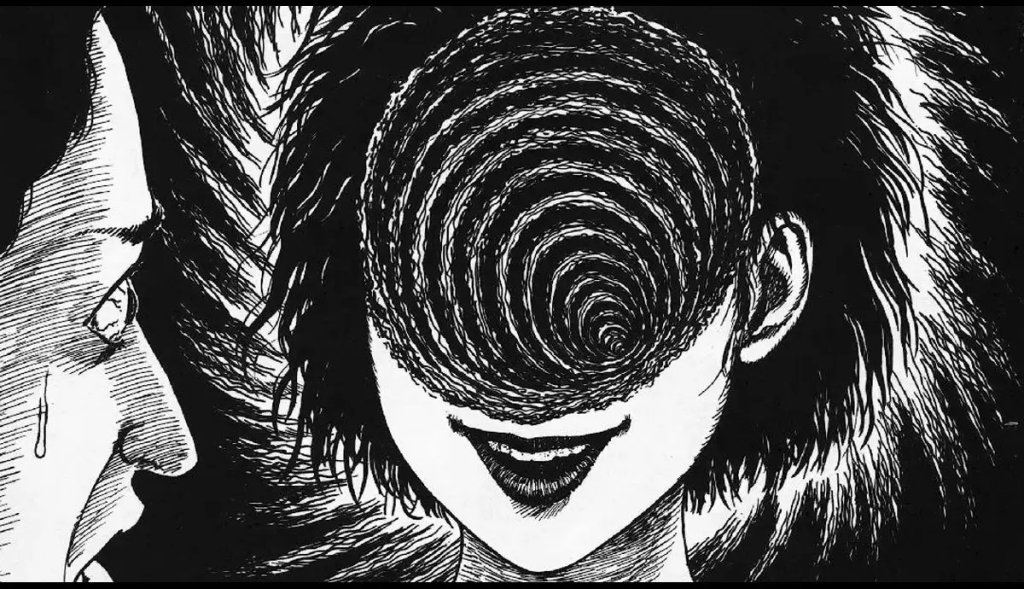

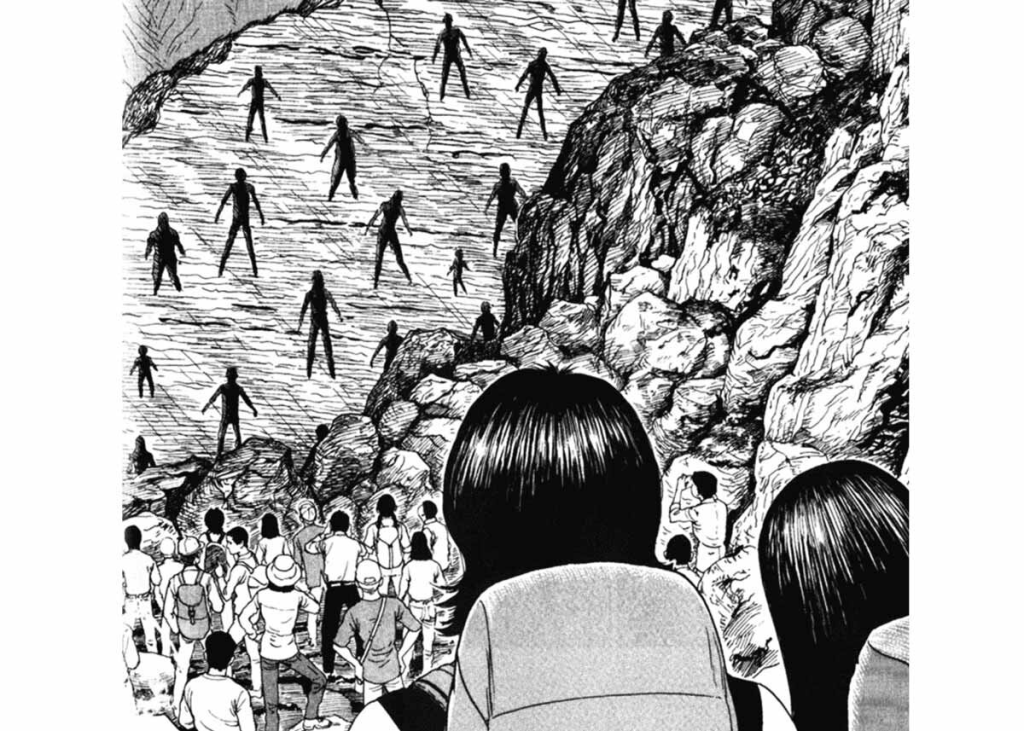

So, for artstyle, I started to research horror and action manga in order to understand the different techniques they use to make dynamic pieces in each panel. Whilst, in my research proposal, I discussed manga influences and it’s impact with the visual design of the world and it’s characters. I needed to put this theory into practice – so I started to research manga techniques by reading manga such as Chainsaw Man and Junji Ito’s works.

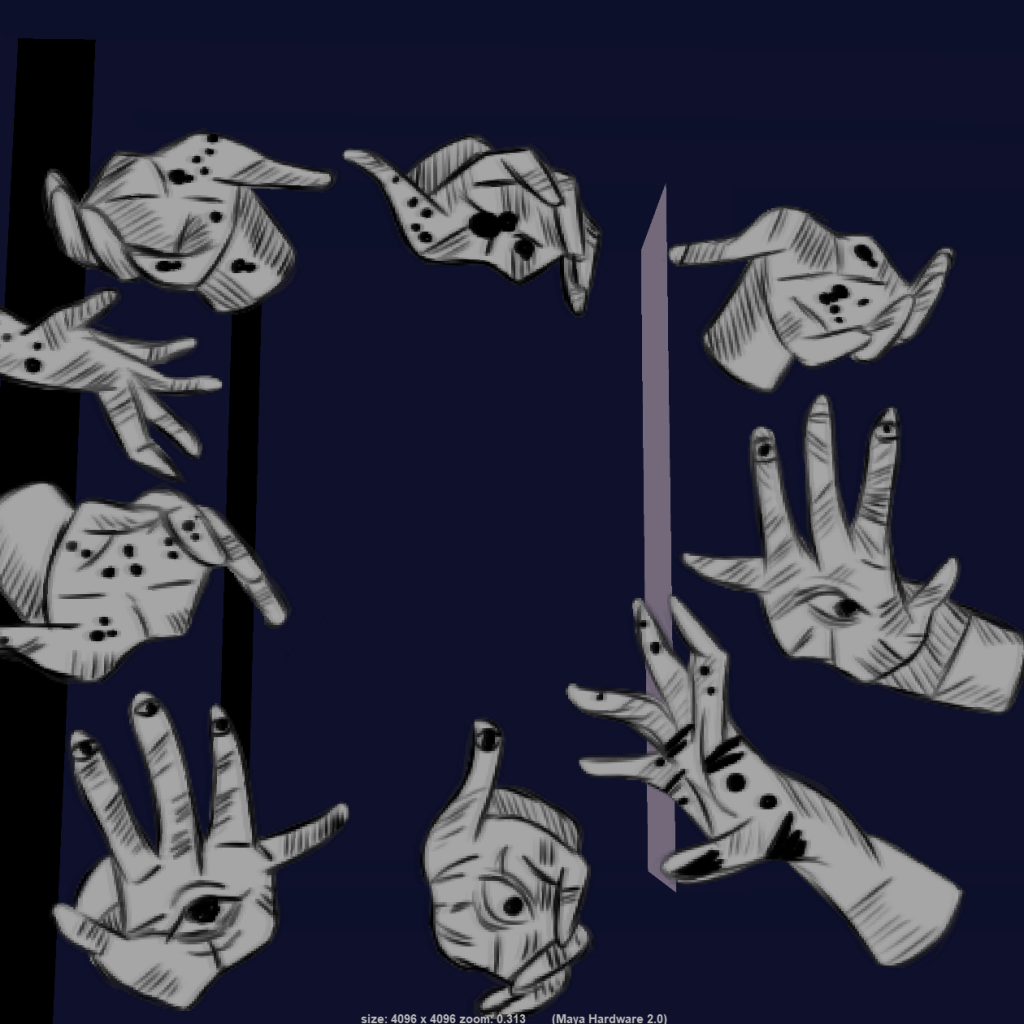

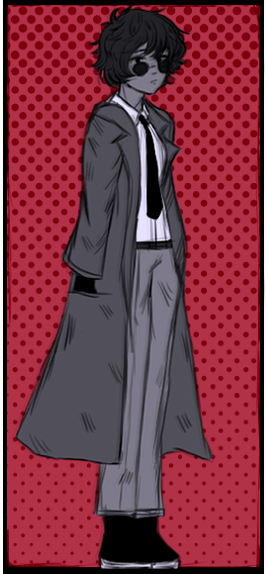

As shown here, Manga panels tend to use a lot rigid, thick lines within, being used to to amplify form in clothing, expressions and dynamic action, making the panels feel more alive especially during fight scenes. For shadows, they often using a solid black, gradients and cross hatching to create this illusion. This research had allowed me to start experimenting with this art style through designing the main character for my VR project.

As I was researching influences for world building, I researched Limbus Company, A Korean horror gacha game known for it’s stylised art and extensive world building in it’s narrative. The game is part of a series that focuses on a hopeless world and a fight for survival.

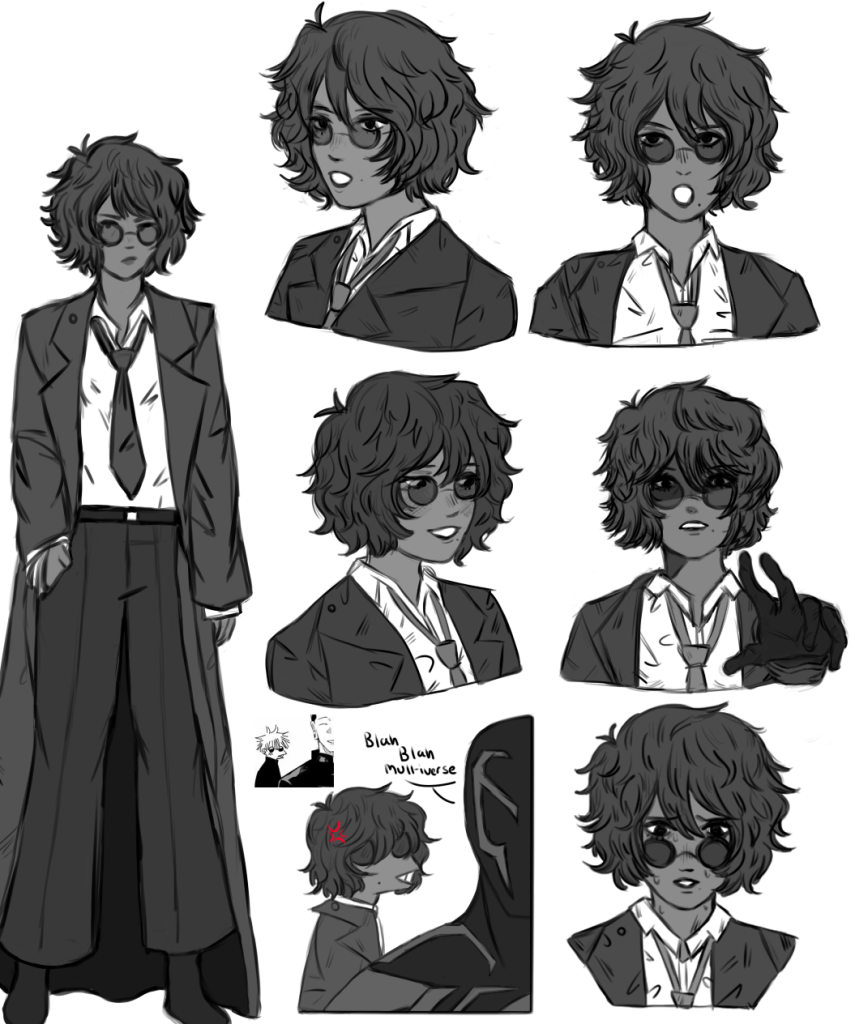

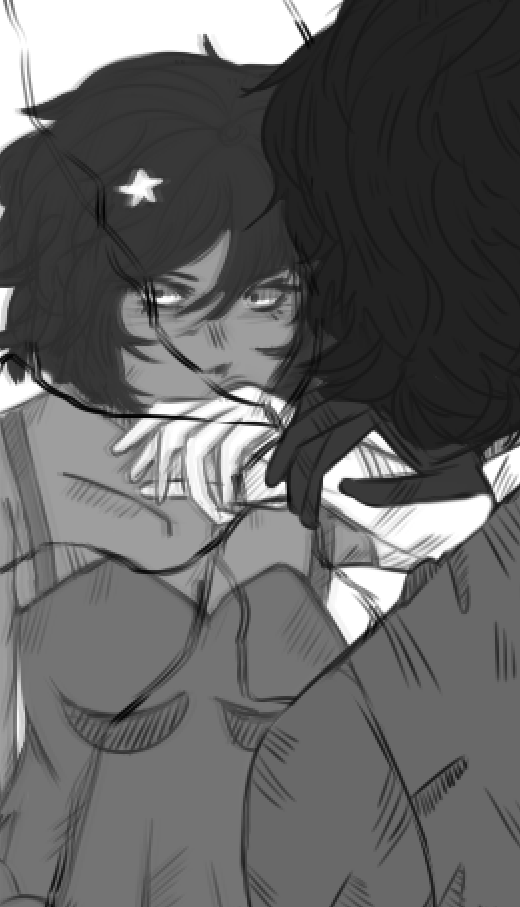

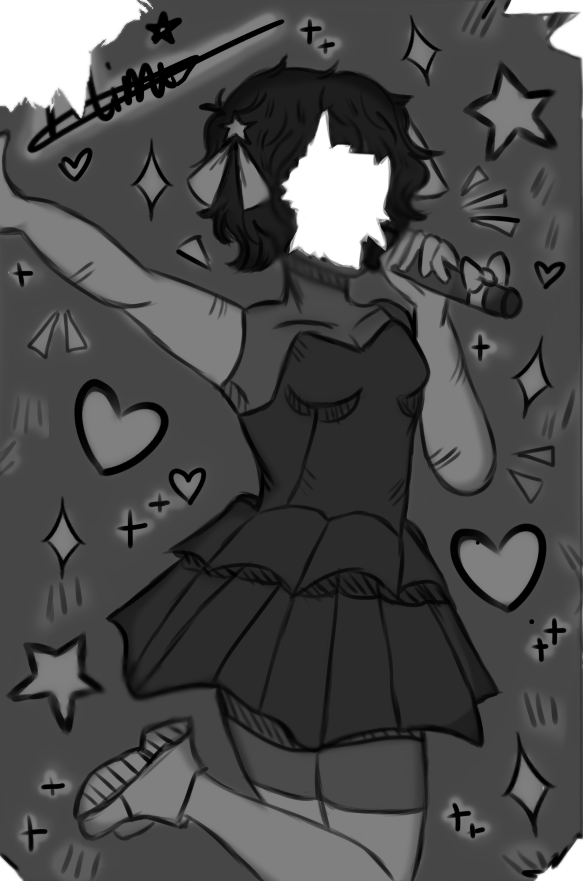

I created expression sheets mainly to visually convey the character’s personality through her expressions and her clothing. Since I used my research as references for applying techniques, this has also allowed me to create my own style of line work within my art, adding form to elements such as the hair, facial features and the clothes. Shadows were also applied to one of the expressions to amplify her emotive reaction without exaggeration.

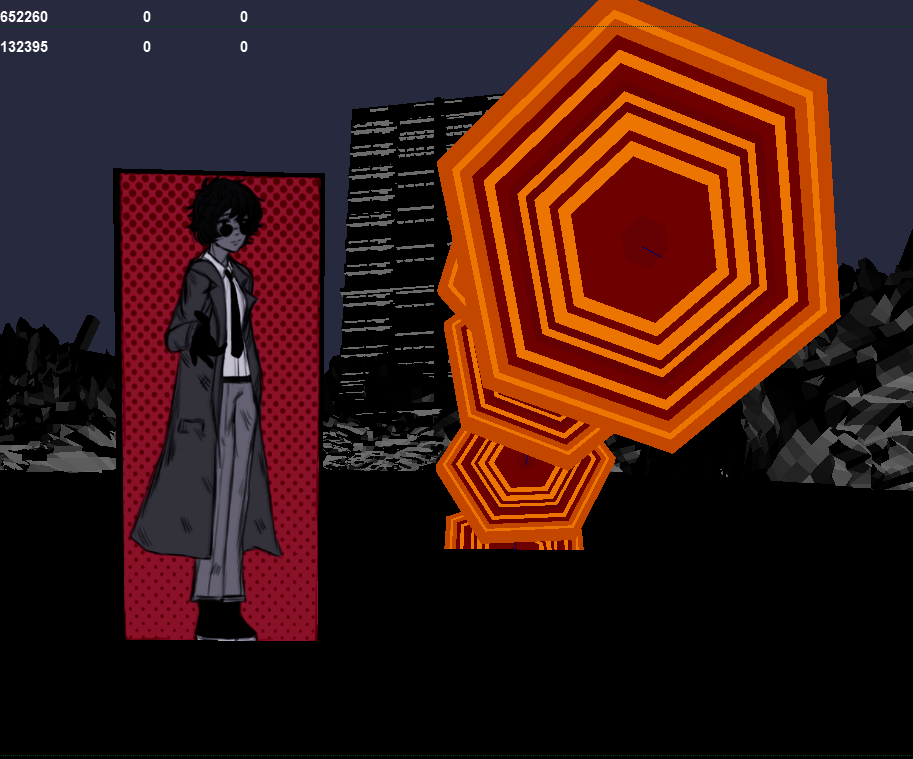

This character, Mimi, is often depicted as emotionless or calm so when I was working with the expression sheet, the main focus was for her expressions to hold little or to no exaggeration whilst still giving her character life, including stronger emotions such as happiness or anger. Whilst this piece served mainly to practice reflecting the manga style, I was able to get a better visual understanding on how I wanted to portray Mimi during the experience – a silent guide for the player that merely observes. Because, essentially, this VR experience is navigating through her story and her world. Similar to Limbus Company, her world is cruel and unforgiving, so my main objective was to express how that world affected her. Even if she is the primary focus of the experience, She’s not the centre of the world, she’s merely a part of it.

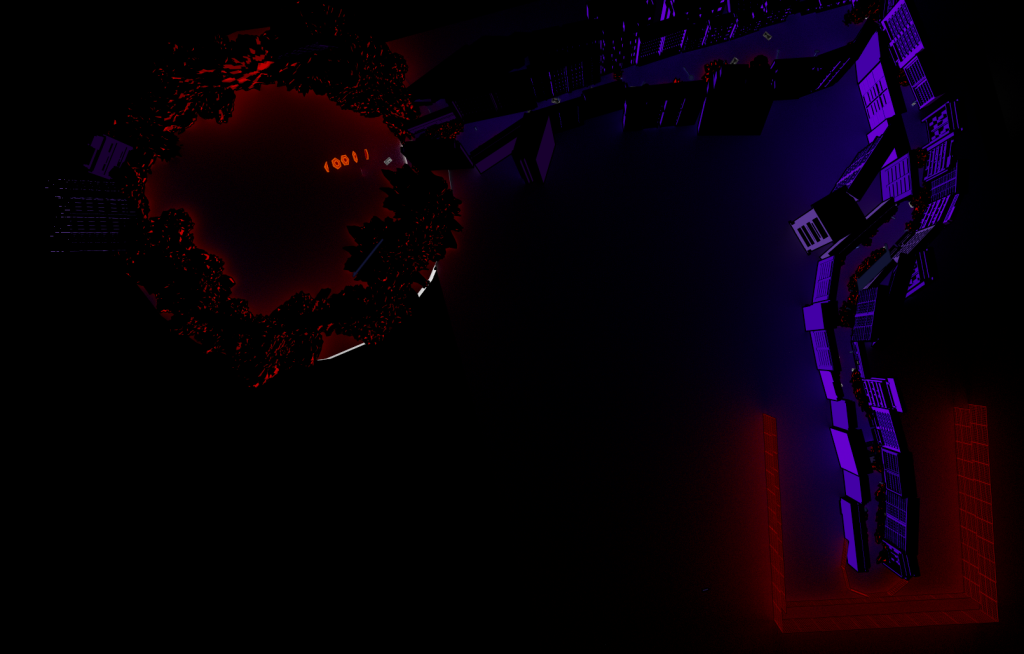

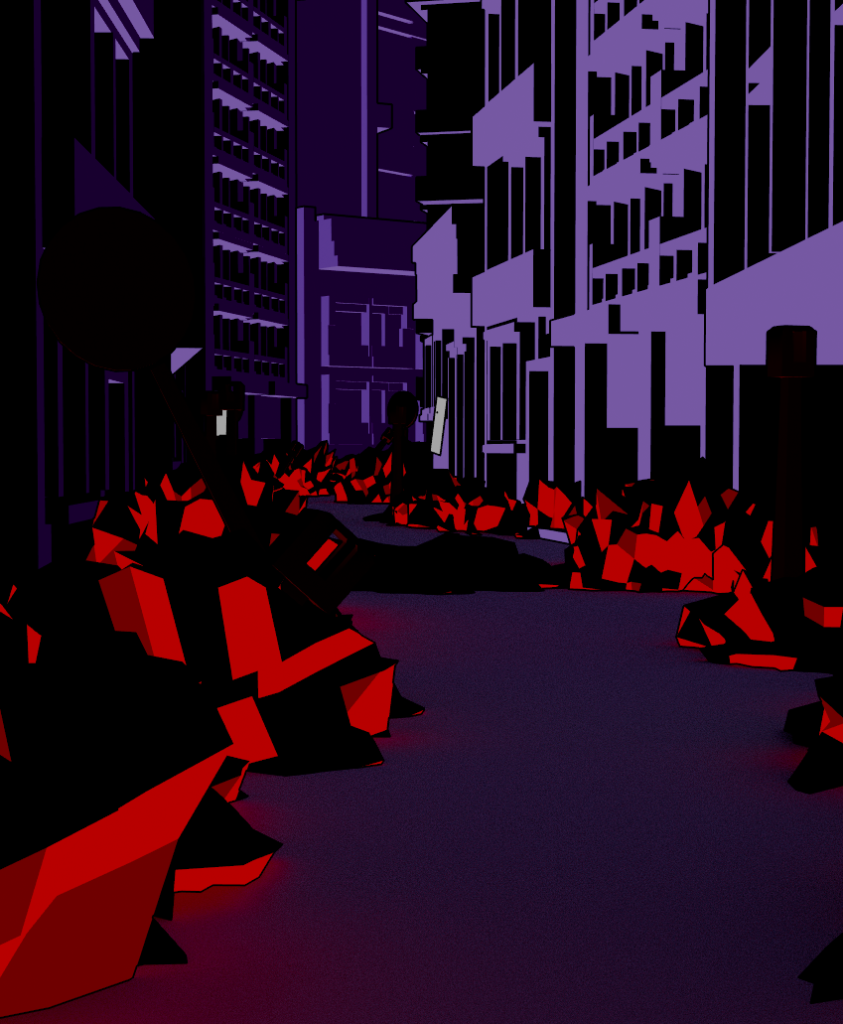

In terms of Mimi’s world, Limbus Company is set in a dystopian city with different sections representing ‘wings’ of each area, each place being corrupted by companies that enforce destructive laws upon a section they reside in. The areas are often depicted as either clean but incredibly artificial or a place filled with nothing but destruction – an area that has once inhabited a thriving population. It’s cruel world and it’s environments show that people are barely surviving. Each area encompasses greys, darker tones or contrasting brighter reds to emphasize it’s unwelcoming nature – some areas are even destroyed and filled with death.

Whilst, I will keep gore to a minimum for user experience, this research further influenced the environment and narrative design for Mimi’s world by setting a visual tone for the project. A world that the main character is desperately trying to save but despite her ambitions, this world is cruel, surrounding by destruction in her wake. At this point during my research, I set up another objective for the environment creation – to create a striking contrast within the world’s surroundings, creating a theme: Order and Chaos. This is not only to keep a consistent style to the environment but to also visually amplify the main character’s struggle as she tries to ‘save’ the user from the destructive, dark world they inhabit.

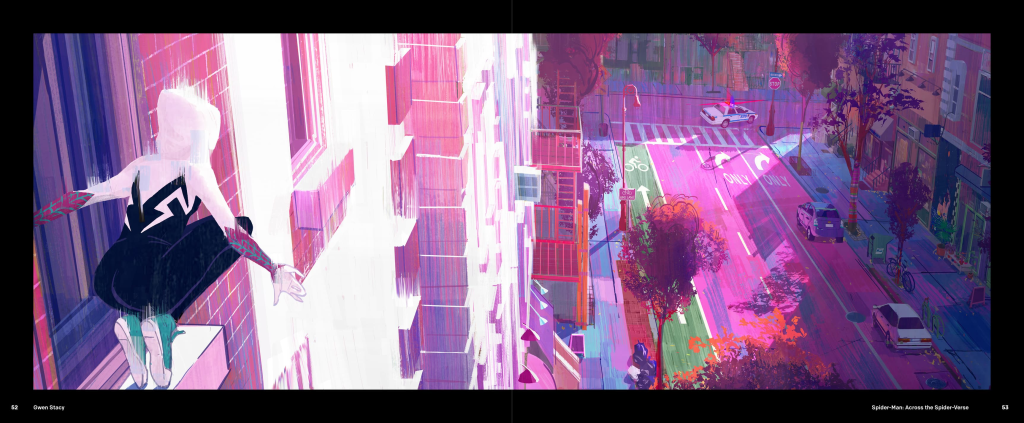

Another large inspiration for the visual world building, is Spiderman Across the Spiderverse – this is due to it’s distinctive comic book style within it’s animation, often using contrasting lighting, cross hatching and halftone texturing and the animation’s use of different art styles to represent different universes within the film, adding in various details to amplify story telling aspects such as intense emotional scenes or to foreshadow and visually indicate a character’s struggle or mindset throughout the narrative.

For instance, in Gwen Stacy’s universe, scenes are often presented with a watercolor effect with vibrant paint streaks that reflects the lighting and the mood of the scene using a different colour scheme to create a vibrant contrast.

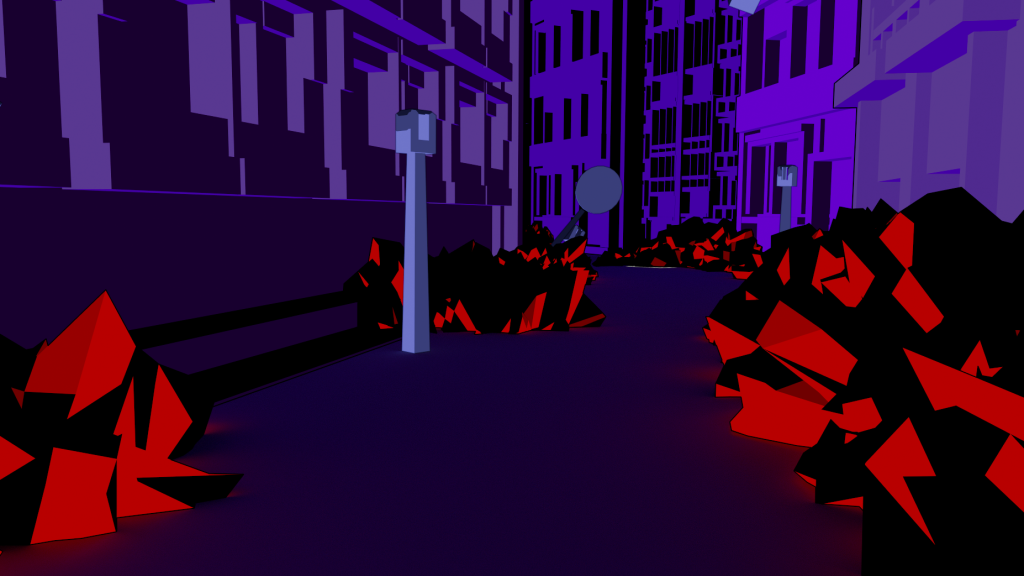

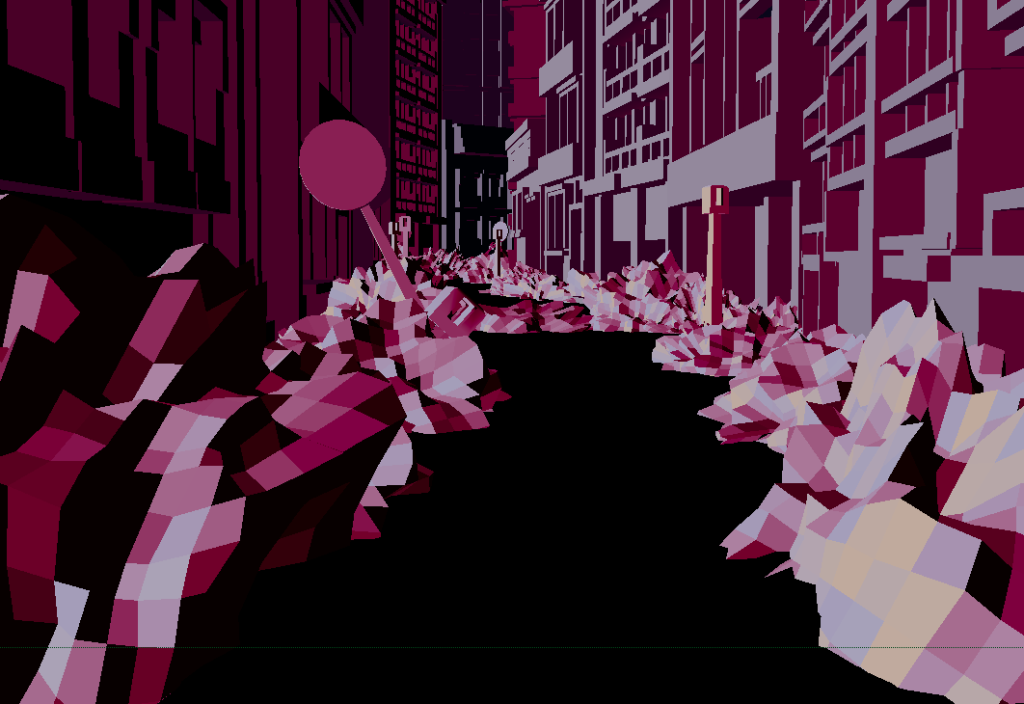

Whilst I want to show a dark world, similar to Limbus Company, within my VR environment, I also want to replicate the same vibrance that Spider verse visually presents. Both media being entirely different in it’s visual and narrative structure. So I explored the Telltale game: The Wolf Among Us to find a middle ground between these two contrasting pieces.

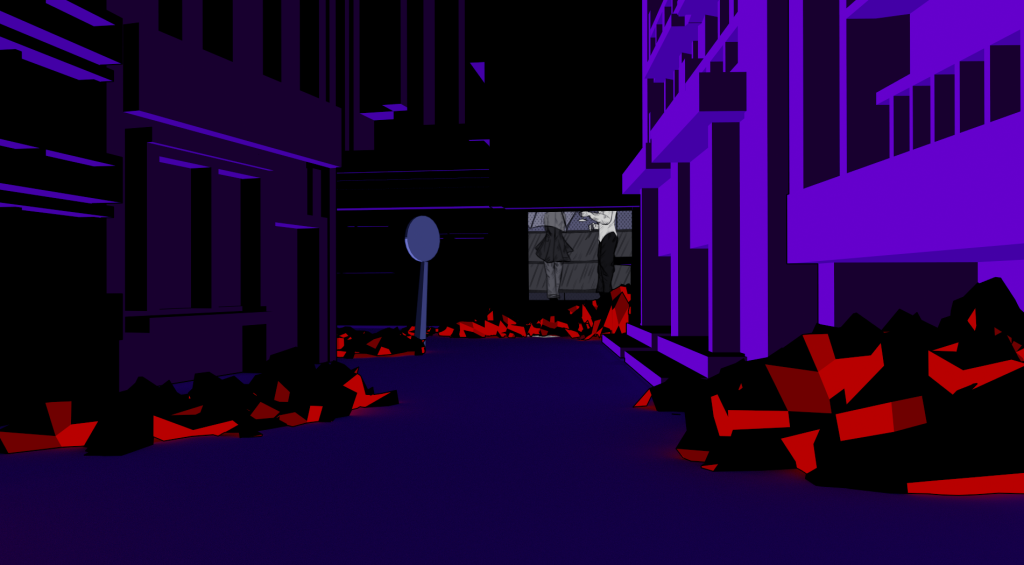

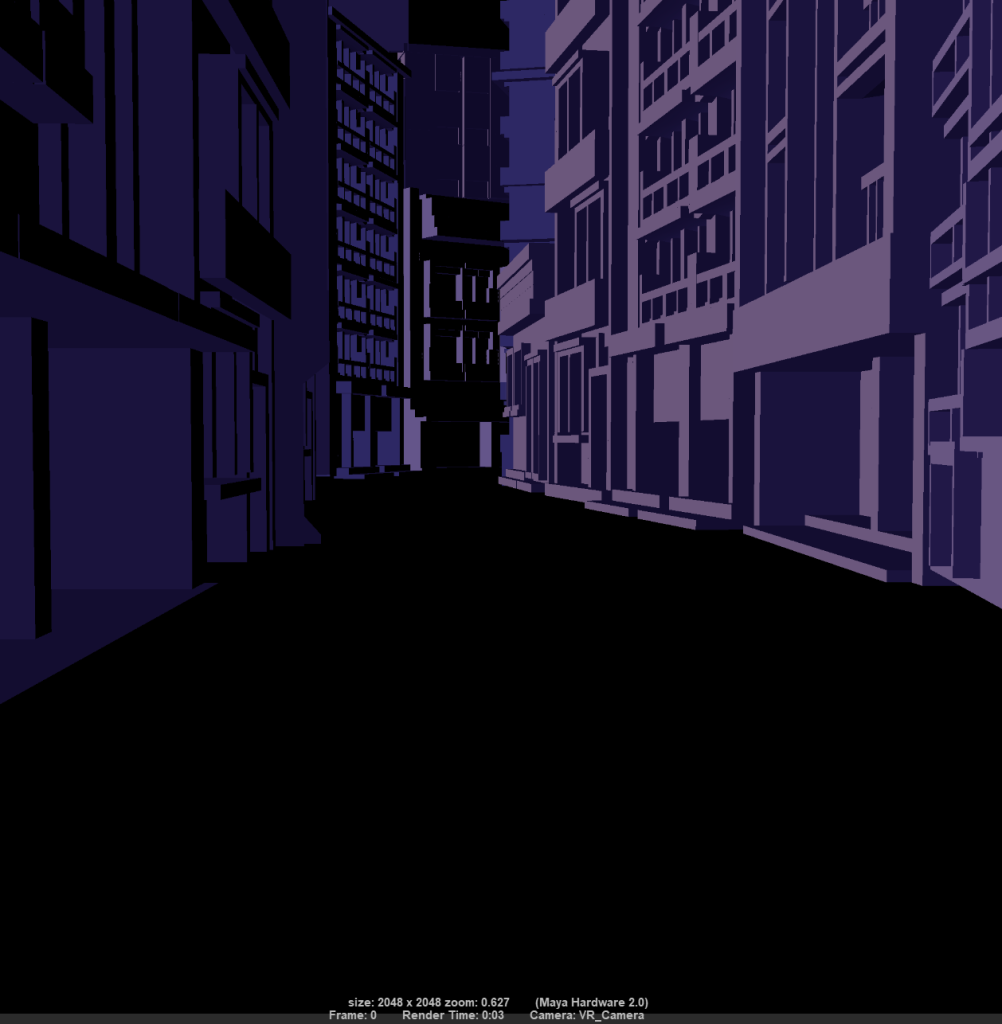

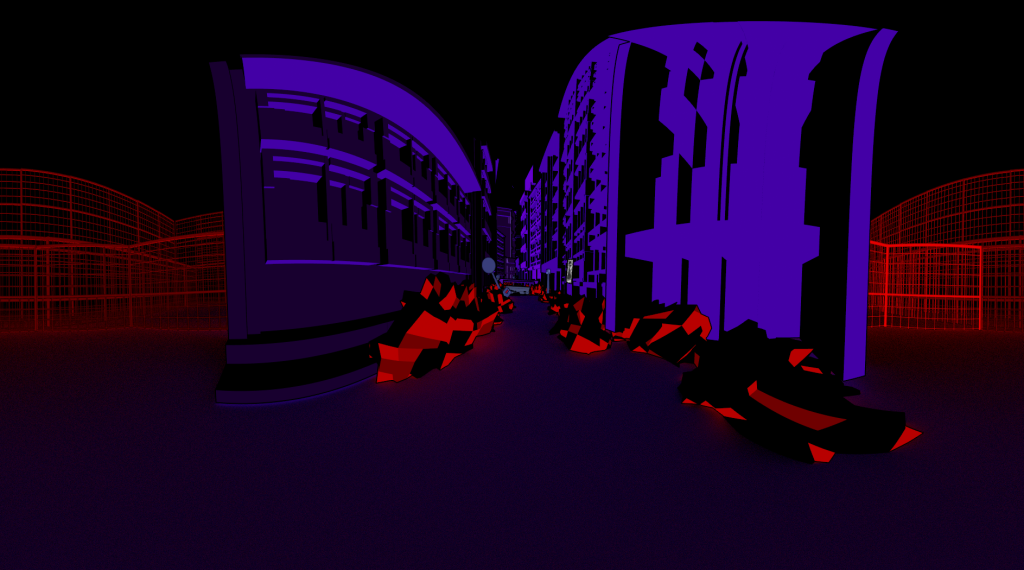

As shown, whilst the colors are vibrant, it’s often using purples and hot pinks to showcase the darker side of the city’s nightlife – with the colours also being affected by the lights and shadows in each scene. It’s visual style, therefore, is appealing without being too cluttered whilst also visually indicating the desired narrative tone to the scene.

The environments and characters also adorn thick linework to replicate the comic style of off the series the game is based off of.

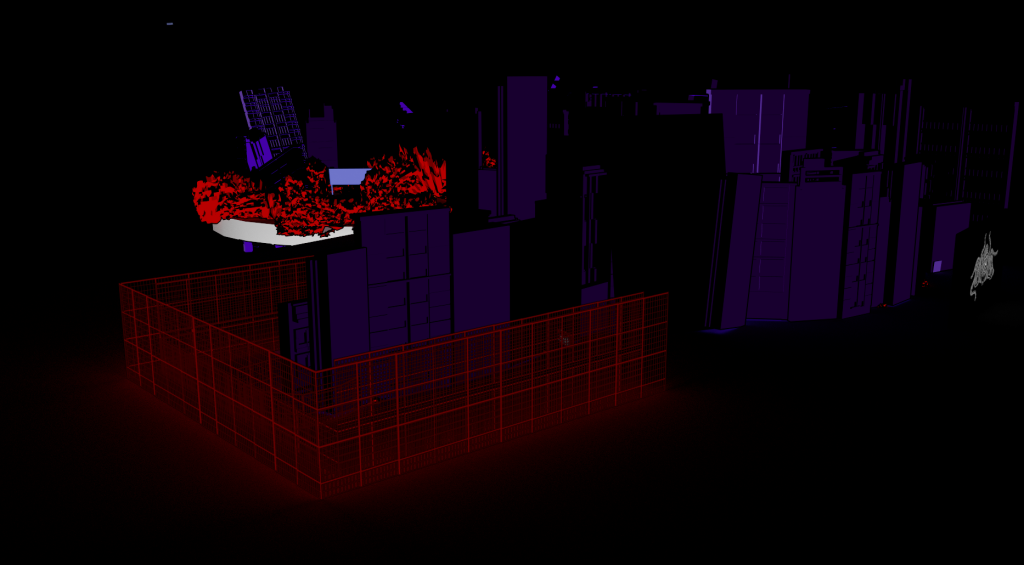

These uses of contrasting, visually appealing colours bring the dark world to life. During my experimentation with colour theory during the later stages of my Maya environment section (Transition to Arnold Rendering), The Wolf Among Us’s visually striking style served as the main inspiration when working with the 3D style, adding a distinct contrast between the manga panels and the area.

In terms of VR visual styles, Lies beneath, A VR horror game, is also another effective example of implementing the comic style within a dark and gritty world.

Project Management

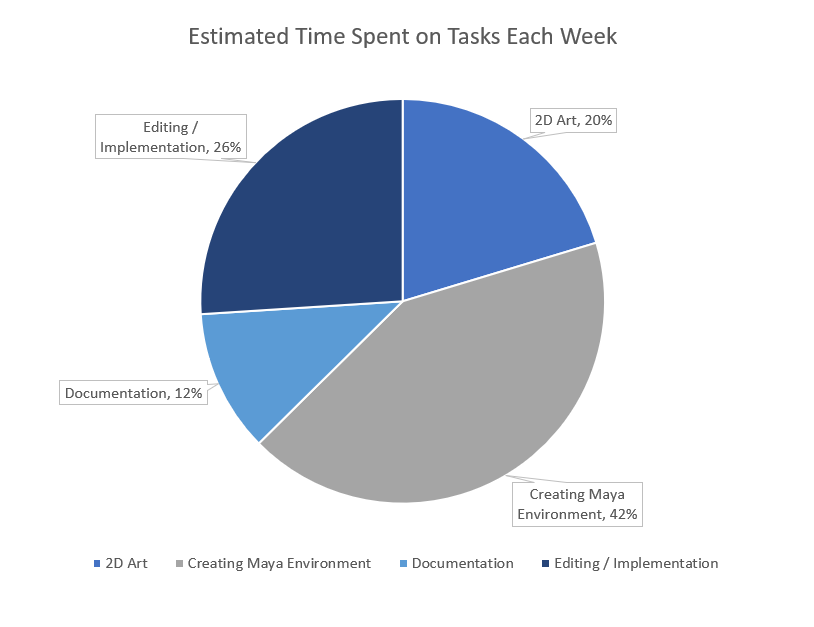

My workflow throughout the project mainly consisted with prioritising the creation of the Maya environment and the 2D art.

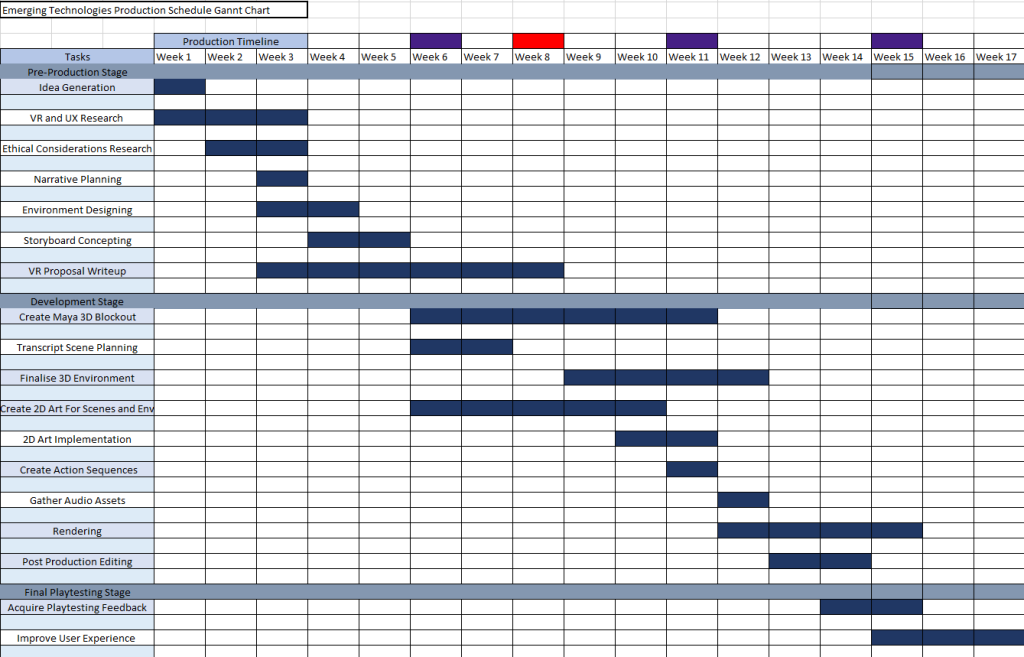

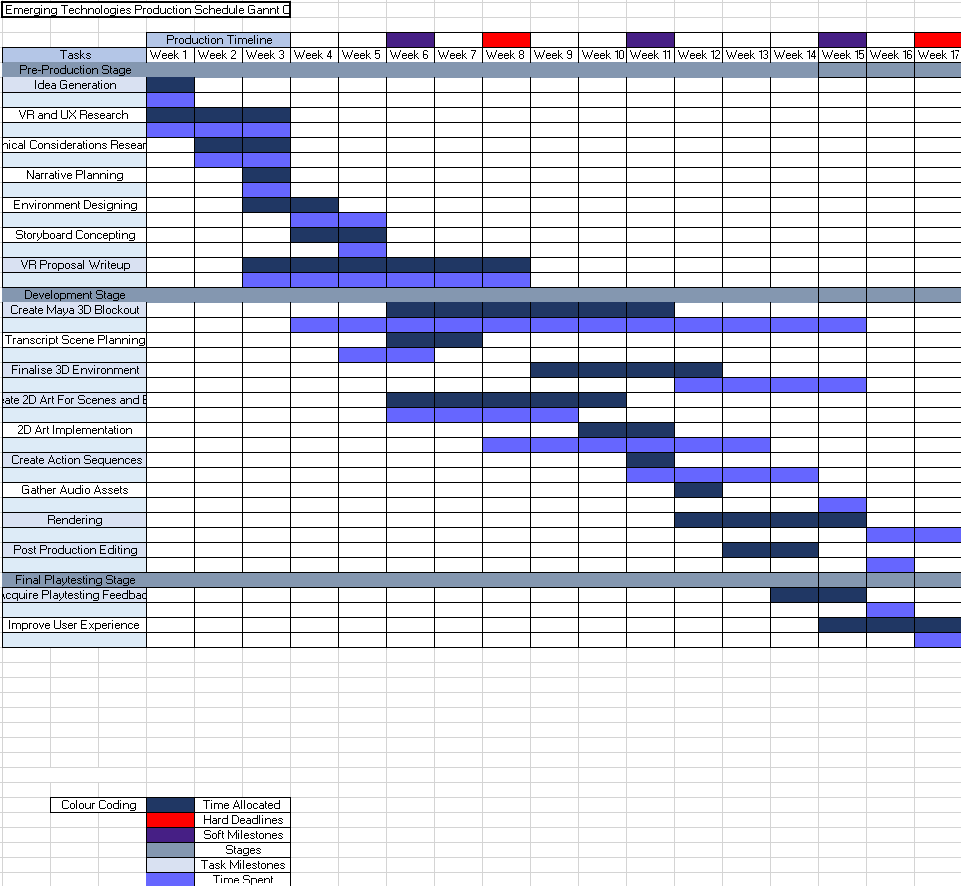

As shown in the gannt chart, – a lot of time is dedicated to these milestones such as: asset making, environment creation, rendering and user testing. This is to allow myself enough time to prioritize in understanding and translating 2D concepts into 3D. This is due to my main proficiency being in character modelling rather than environment modelling or hardsurface modelling.

The reason behind creating my own models for this project is to develop and improve my 3D modelling skills and, through this project, hopefully develop or even replicate my own style in a 3D space. Since this production is also a portfolio piece, there’s also an opportunity to be able to showcase what I learnt alongside presenting my strengths as a designer and artist.

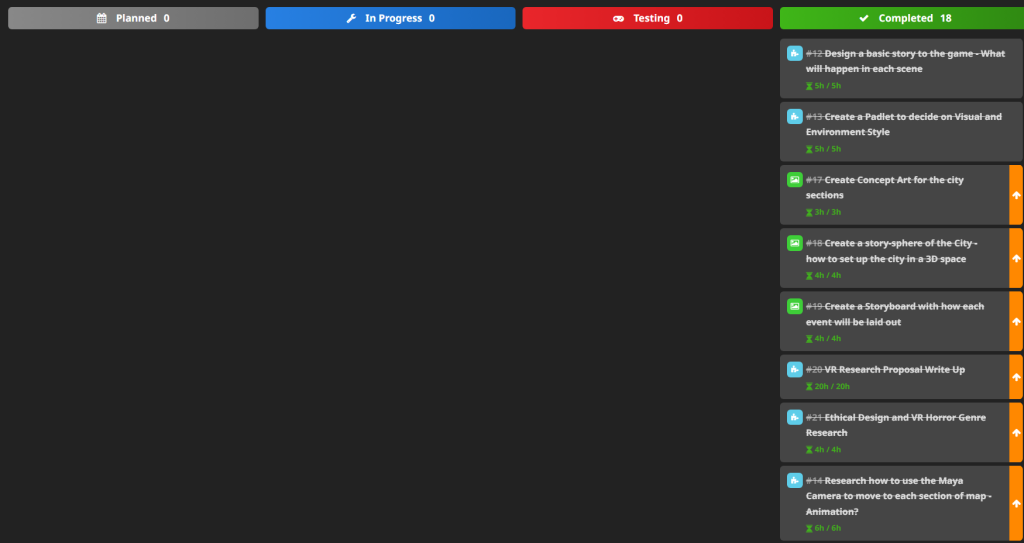

Each Milestone in the gannt chart and HacknPlan helps break down the workflow into manageable tasks for each section of the project. This way I can manage my time in between creating the VR space, making 2D art for the gallery and updating my project documentation weekly within this portfolio. The ideal work schedule is to work 45 hours a week. This time is split according to how much time needs to be spent regardless of milestone progression. This is because, the objective of this weekly schedule is to keep my time management consistent within the project, although, this is an estimated schedule.

The main challenges I faces during the project was taking into account obstacles that would drastically change the vision of the project as a whole in terms of cutting out smaller features or adjusting or changing visual styles entirely due to time constraints or technical foresights.

The main example and the biggest challenge of the project was the switch in rendering hardware – from Maya 2.0 to Arnold Shader. Originally, the main plan for rendering was to use Maya Hardware 2.0 rather than Arnold Shader.

At the time, this was due to how efficient the process would be, being able to render the entire experience within 30 minutes. The Materials, lighting and images were all created and optimized with this hardware in mind. However, unfortunately, despite efforts within editing and post production, it wouldn’t be able to register the environment’s surroundings, even in an 180 perspective, the footage would distort, making the experience incredibly disorientating and nauseous to preview.

Even with a 180 VR experience, it would easily break the immersion and this alternate plan would also go against my 360 VR objective, even if there needs to be compromises – the project’s main objectives should remain as a constant for a better, high quality prototype.

So this meant switching to an AI toon shader, adjusting and experimenting with different light sources and making adjustments to the 2D art. This lead to a week delay in terms of production scheduling.

For example, because PNG’s can only be read by arnold shaders if they had an alpha mask.

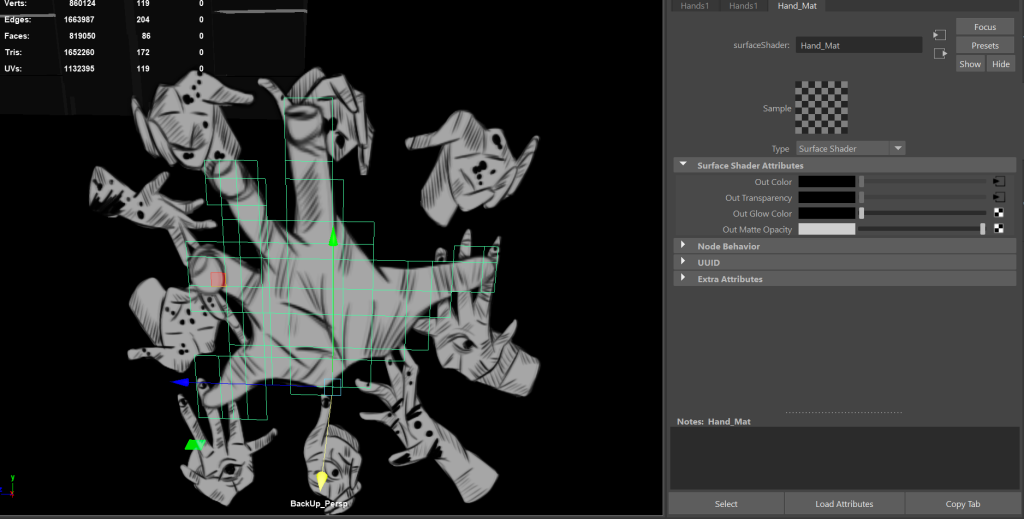

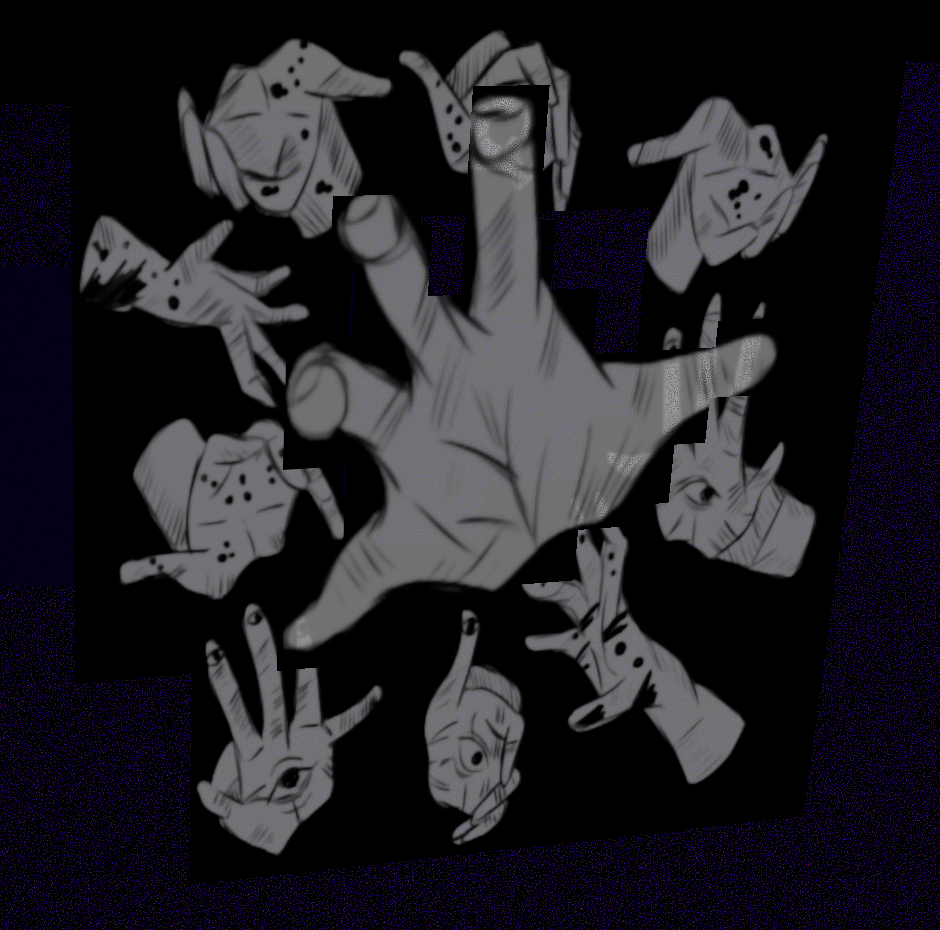

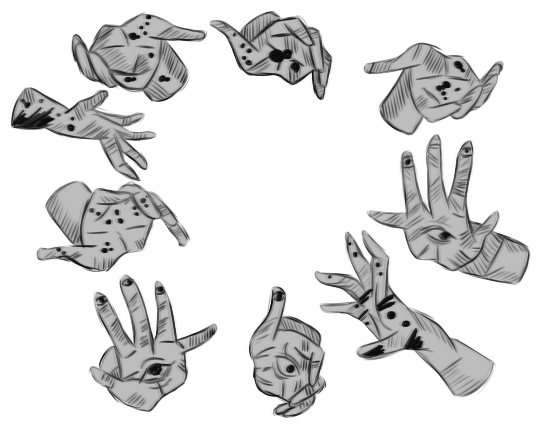

Only Maya hardware can translate the transparency. Therefore, due to time constraints, I had to find an alternate solution rather than creating an alpha mask for each art piece. For the hands art, for instance, I needed to use the multi-cut tool to cut out the black areas surrounding the hands, creating a hole within multiple hands to appear from. On the other hand, I had to find a different solution for the action panels, so I added a halftone background to keep that manga – comic aesthetic consistent even if it meant sacrificing the illusion of Mimi’s precense in the environment.

This also meant I had to cut out minor features such as more artwork to showcase a variety of advertisements in the world although this collection is to mainly to add in world building depth into the experience, to give users a further understanding on Mimi’s universe.

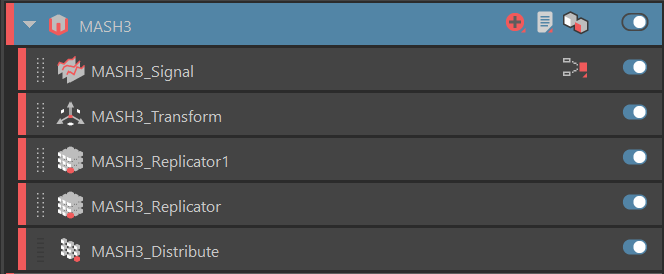

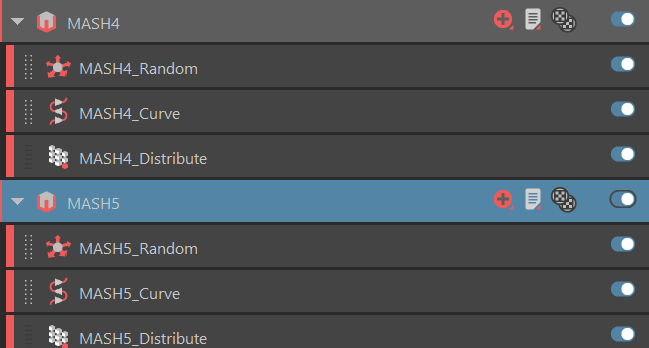

Whilst they may seem minor from a technical perspective, since this experience relies on art to guide the player, in future improvements I’d like to continue adding more art to make the city district feel lived in before it’s destruction. I would like to, therefore, add more Mash networks to further simulate further presence within the city as well as more monsters to emphasize the horror tone of the experience.

Here is the final gannt chart, showing the predicted time spent in comparison to the actual time spent on each task. In conclusion, I need to make me work schedule more flexible in order to allow delays such as these to not affect my workflow as much as it did in this project. But despite this delay, I was able to make enough changes and find alternate solutions to prevent further delays in my schedule.

Software Proficiency

During the project, I’ve been working with different software in order to bring the art gallery together. This area is seperated into 3 sections in order to showcase my workflow in detail.

2D Art – Paint Tool SAI

For the 2D art for example, I used Paint Tool SAI to create the art pieces – software that I have extensive proficiency with. Paint Tool SAI, whilst not as advanced as Clip Studio Paint (professional art software that specialises in anime art), it still allowed me to learn different techniques to replicate the manga style.

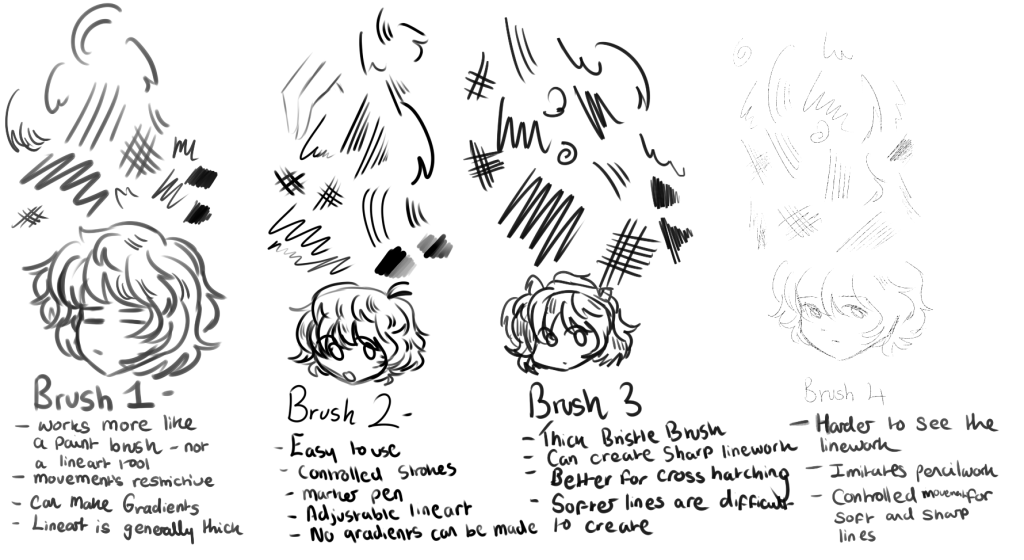

Whilst researching manga artists, I started to experiment with different brushes in my other works in order to see what would make the cleanest linework since, normally, in my art – my line art tends to be rough.

I found that brush 2 would be the best to use because when making short or long linestrokes, the brush was easier to control, creating clean lines even with dynamic strokes.

My workflow for this section is to work on an art piece once a day – this is mainly to avoid burnout as I was working on the Maya environment simultaneously. Most of the art pieces were made with the storyboard I made in mind: ( https://samsudeen-2021.hulldesign.co.uk/2023/11/13/emerging-technologies-research-proposal/ ).

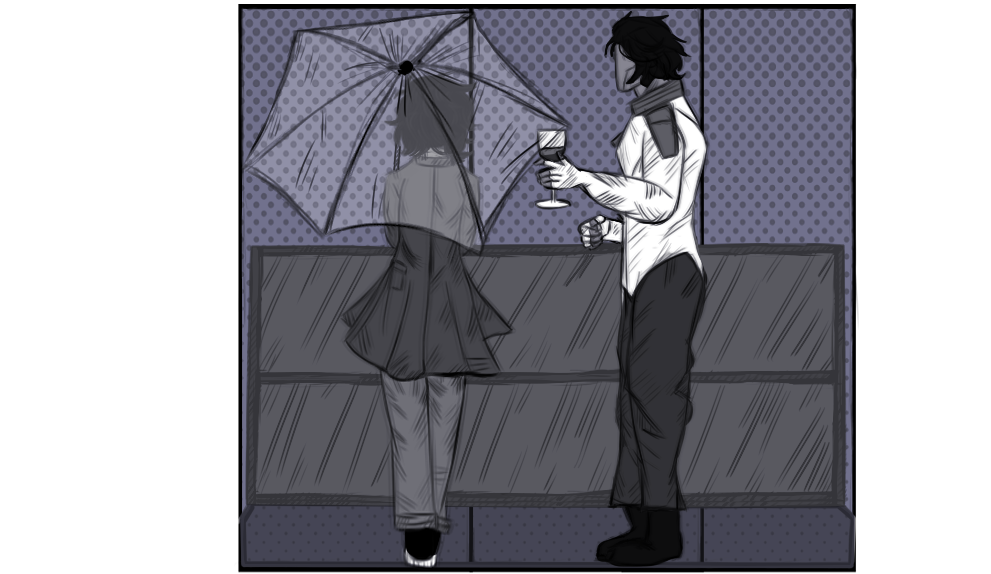

However, for the window section of the art gallery, I changed a couple of art ideas in order to add to the psychological horror theme of the world’s narrative and to also showcase Mimi’s melconcholic personality. I also added in some advertisements to show that transition between the first and second section of the VR environment.

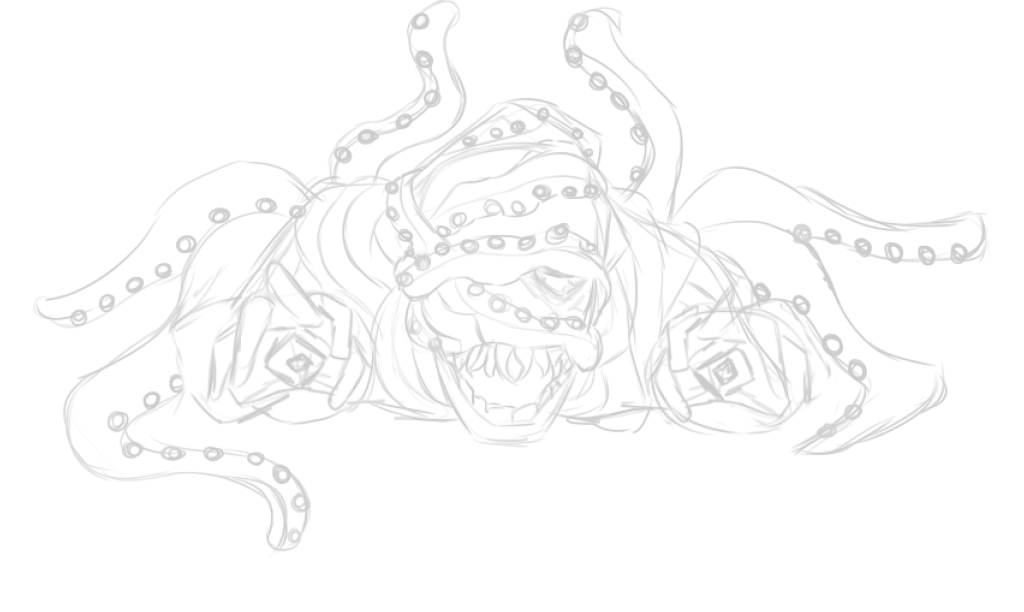

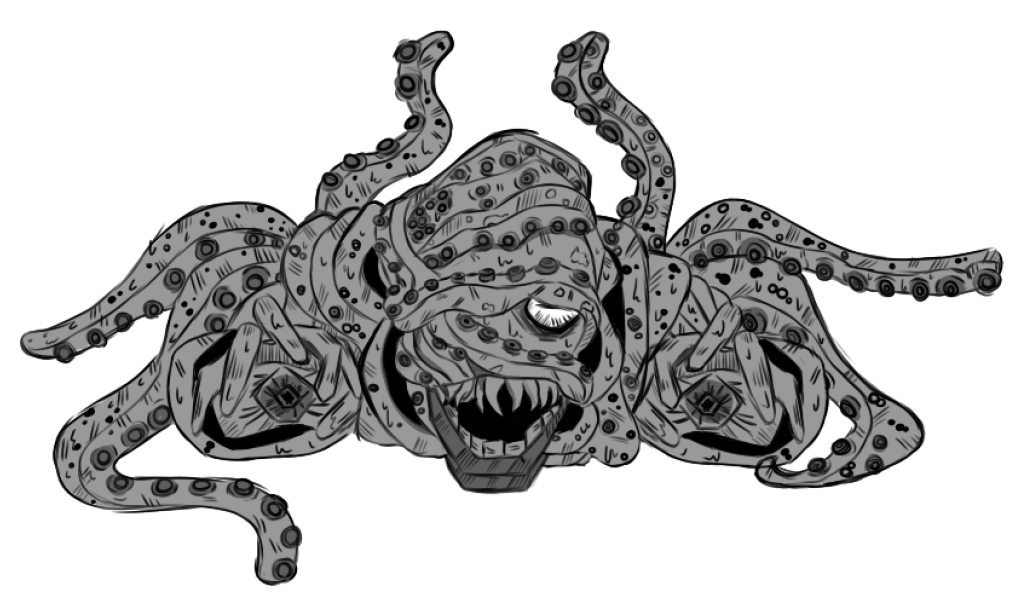

As shown in this example, this is how my workflow consisted within each piece. I usually started off by sketching out the basic shapes and composition. Then, I work on the lineart, adding more details and cross hatching to the piece to add movement and depth. And finally, I worked on the coloring and shading process. Usually, in manga, shading is mainly consisted of cross hatching and black gradients.

However, whilst I was working on the art, I was testing the Maya environment – the majority of it’s environments are covered in black mainly due to lighting. I wanted to keep this to make the contrasting textures more effective.

But this also meant having to use a different shading techniques to prevent the art from blending into the background.

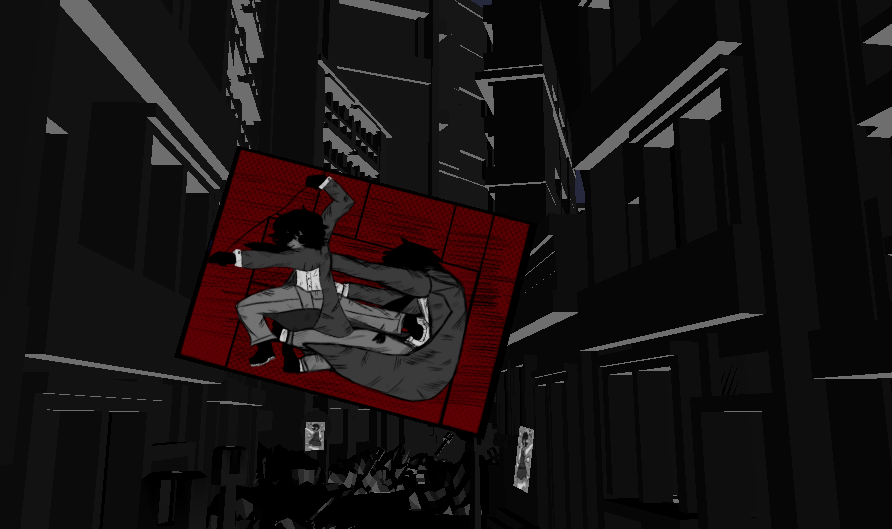

Eventually, as mentioned previously in the Project Management section, I had to add some halftone backgrounds due to shader changes. This was mainly added to action panels.

Here is a gallery of all of my art used for the project.

Environment and Asset Creation – Maya

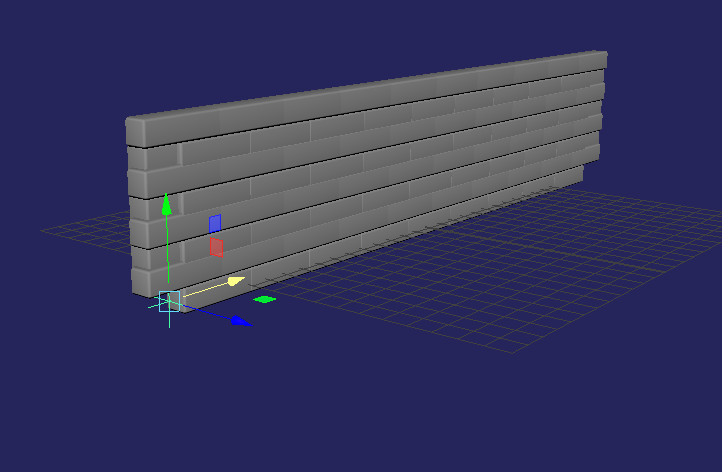

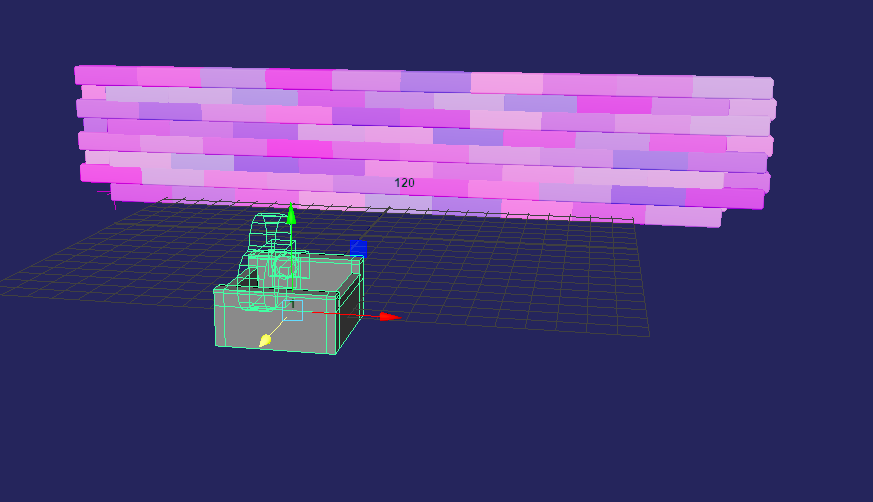

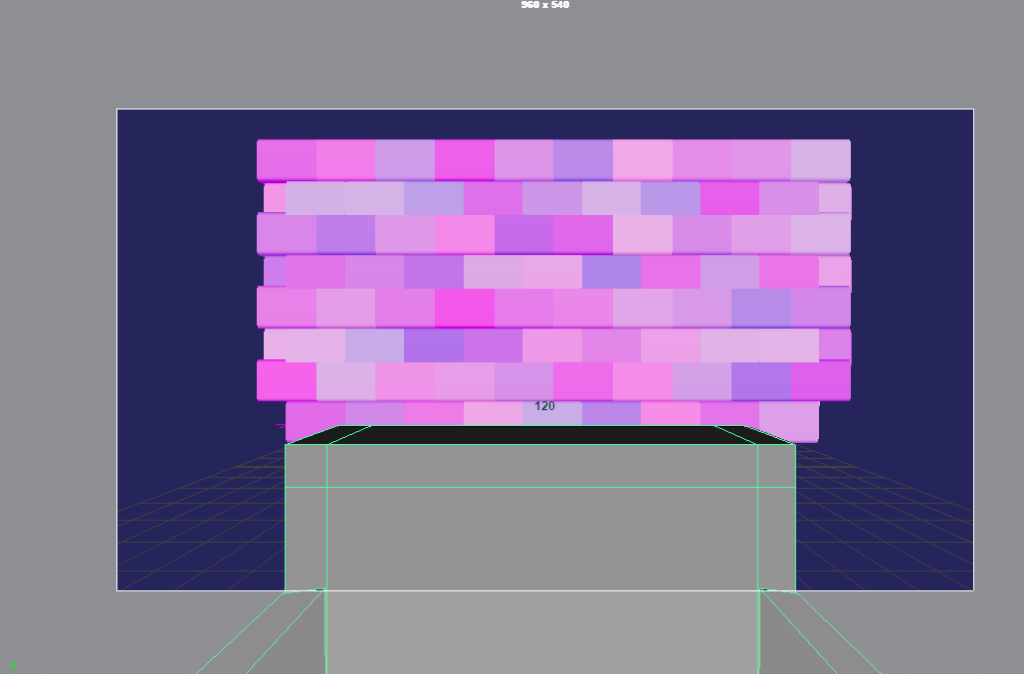

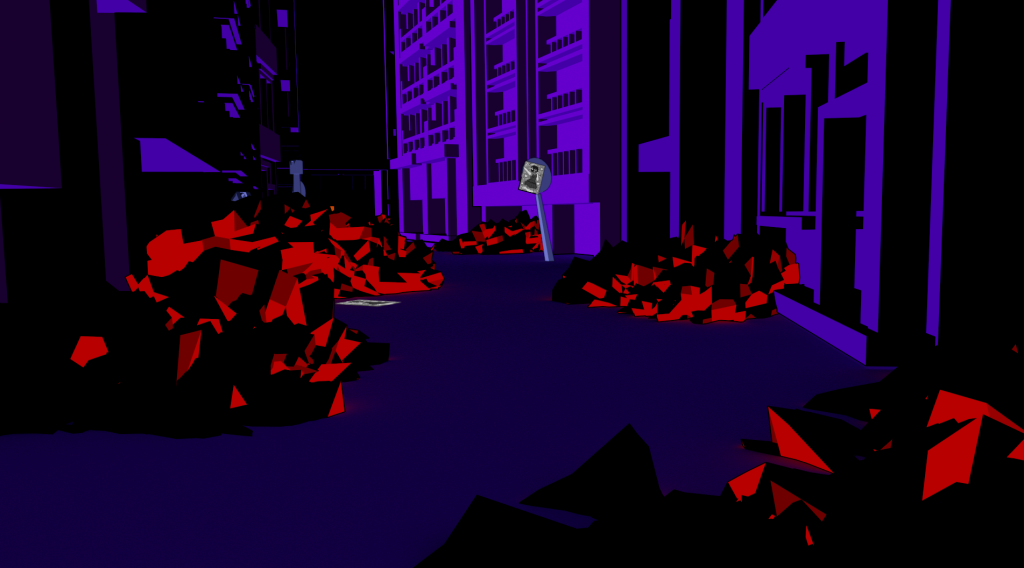

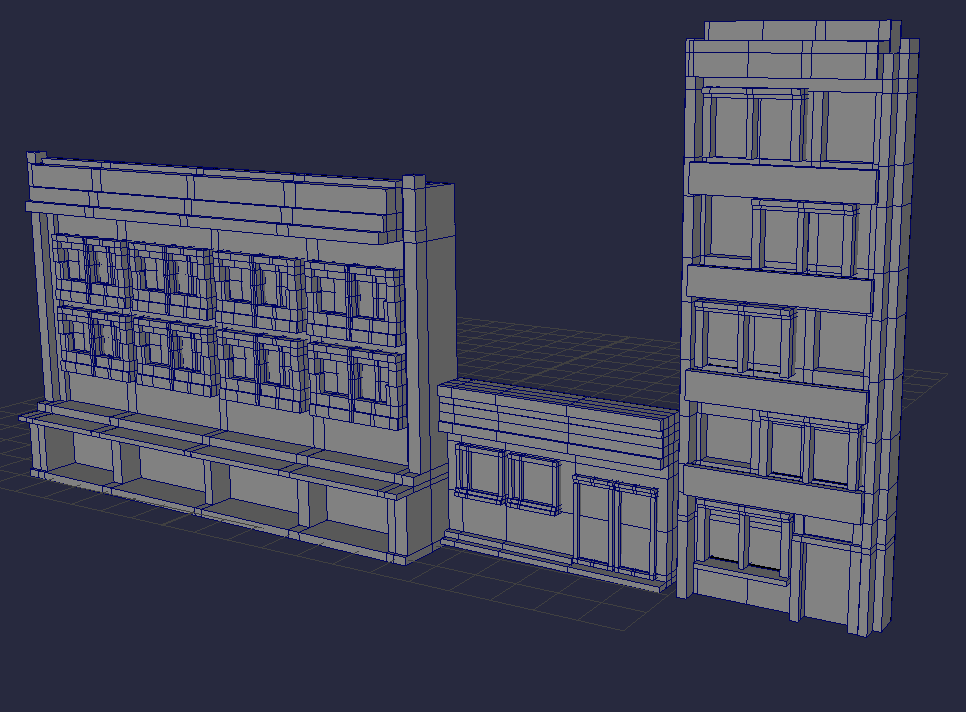

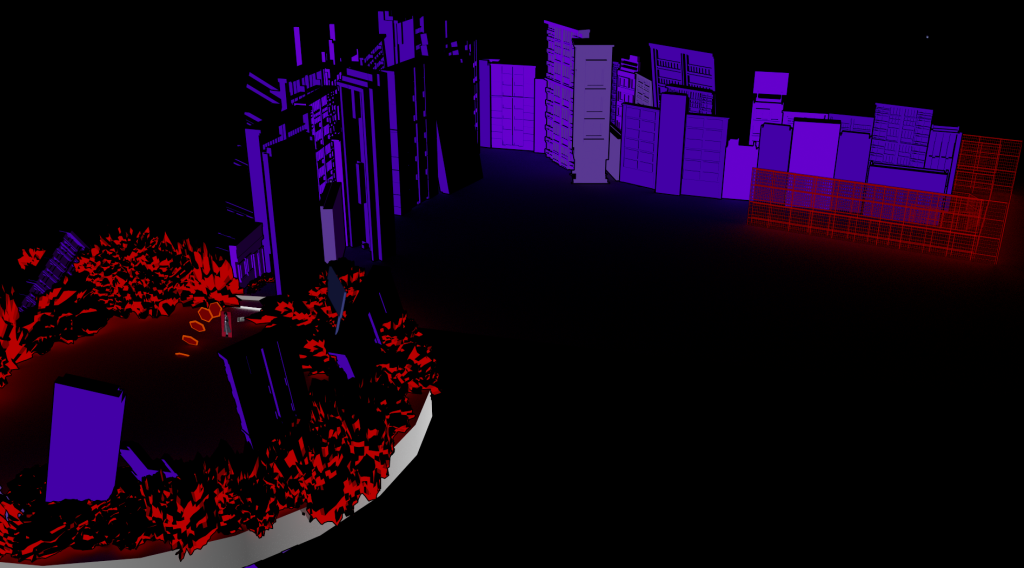

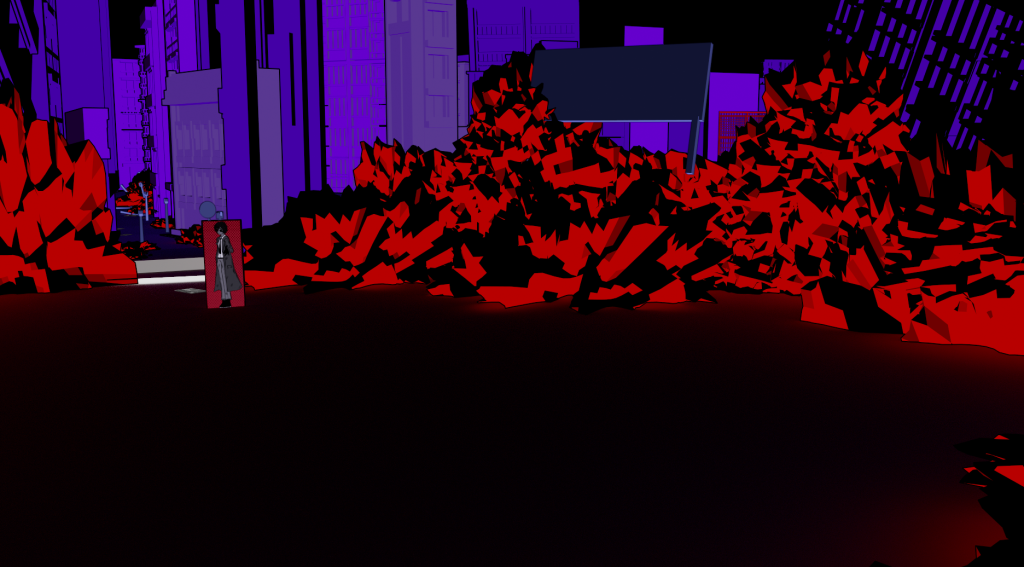

For my Maya workflow, I began with asset creation, making different buildings that could impose over the environment and the user. The main objective here is to make the buildings appear intimidating and artifical, almost as if it isn’t meant to be standing in an environment filled with horrors and destruction, once again, going back to the main established theme of chaos vs order.

I used this image as reference for multiple of the building assets. This is due to how visually striking the city looks within this composition. A city that stands tall, making the character appear small despite being the main focus of the image, an effect that I’d like to apply in a VR setting.

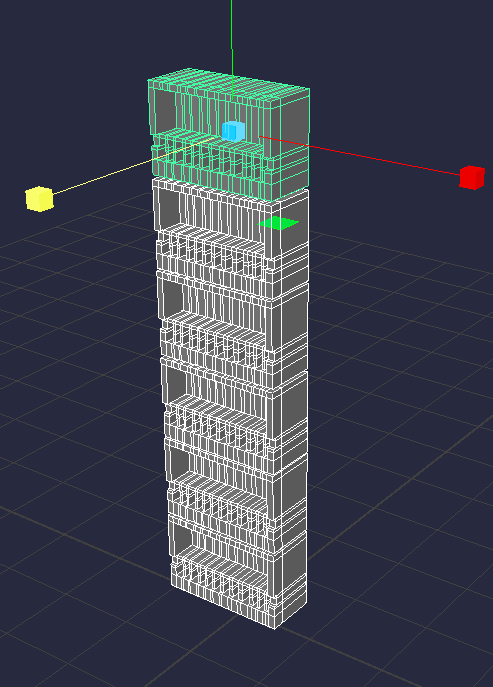

This is mainly achieved by making different buildings, each with different sizes and details that allow them to stand out.

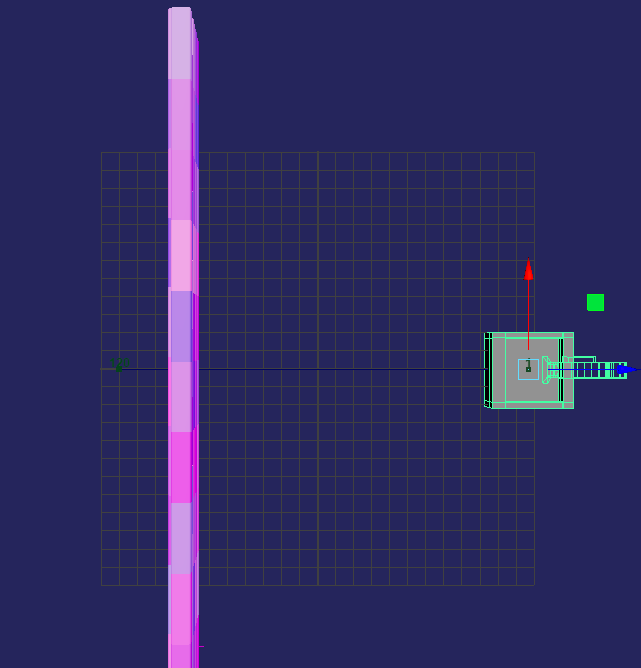

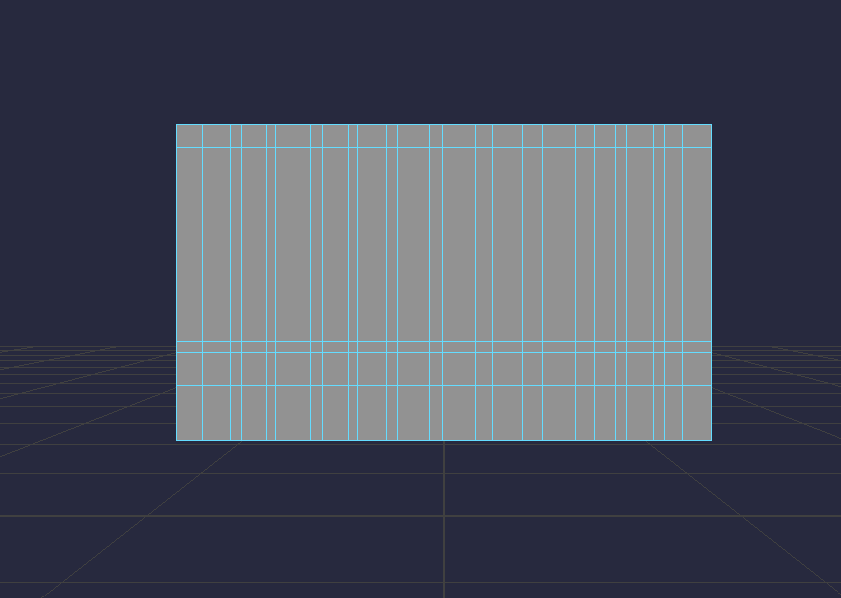

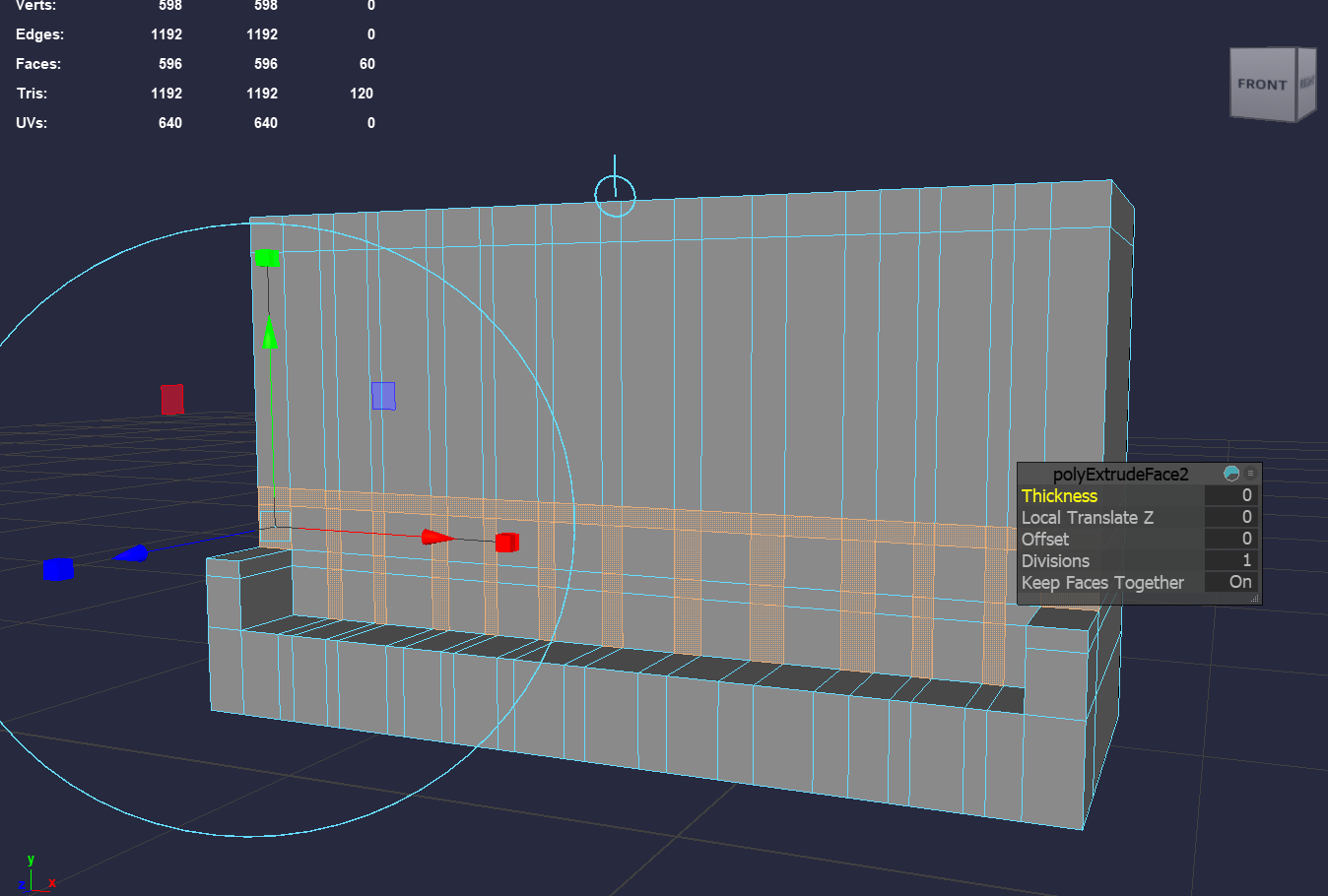

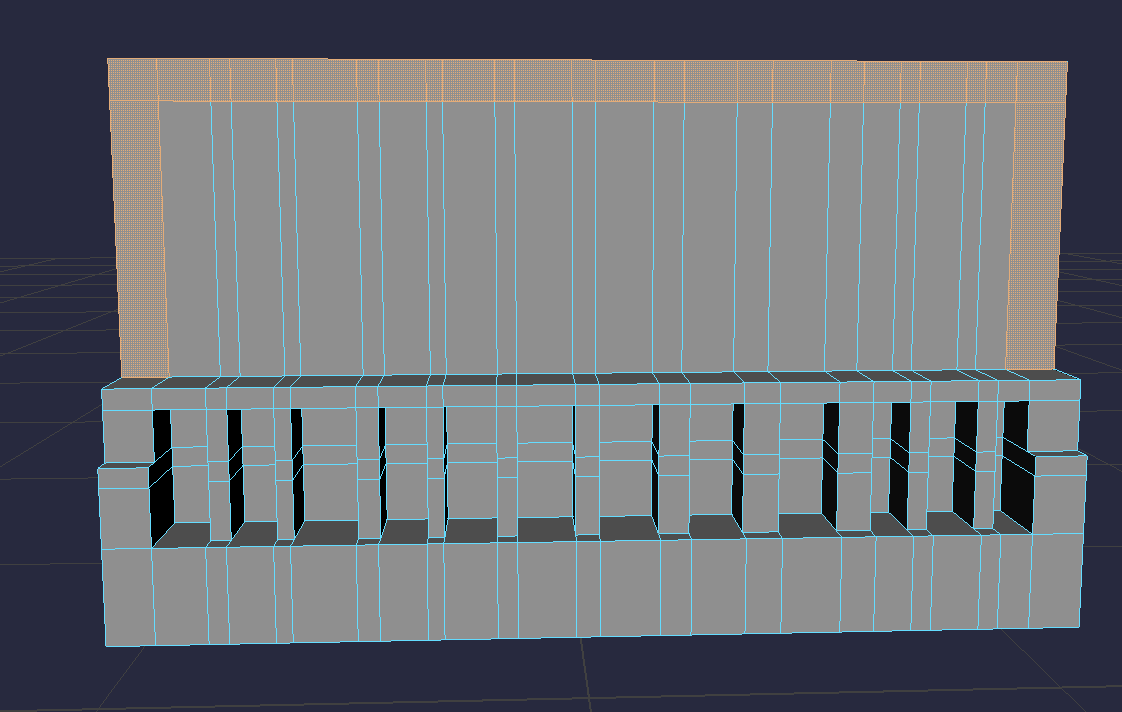

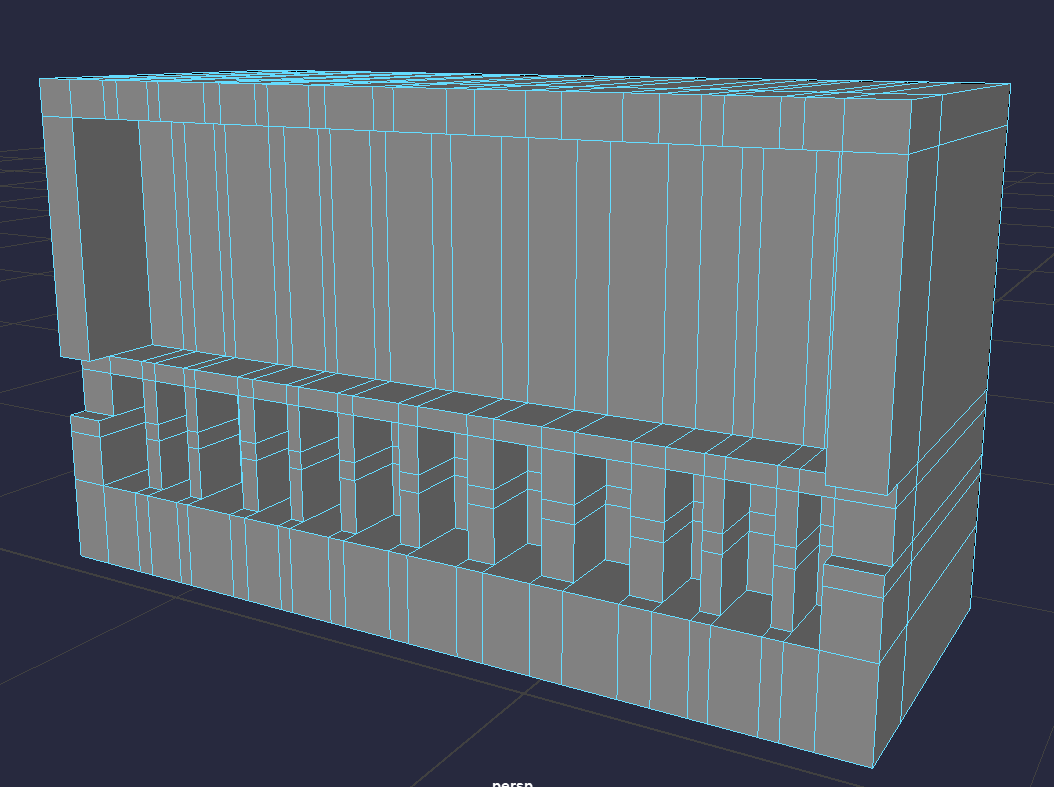

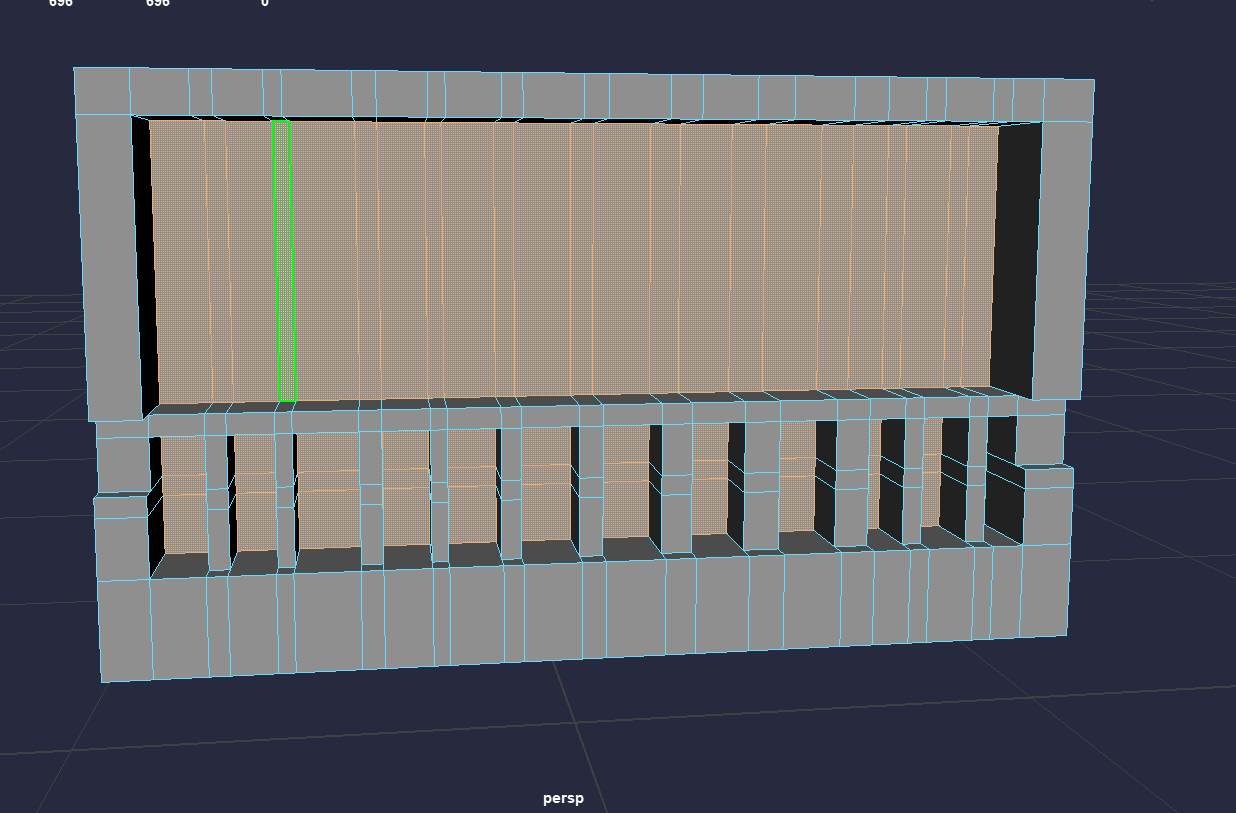

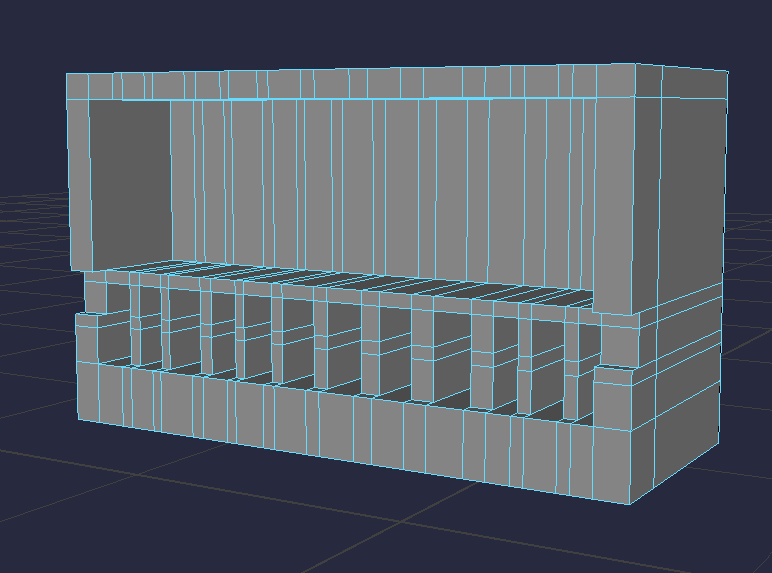

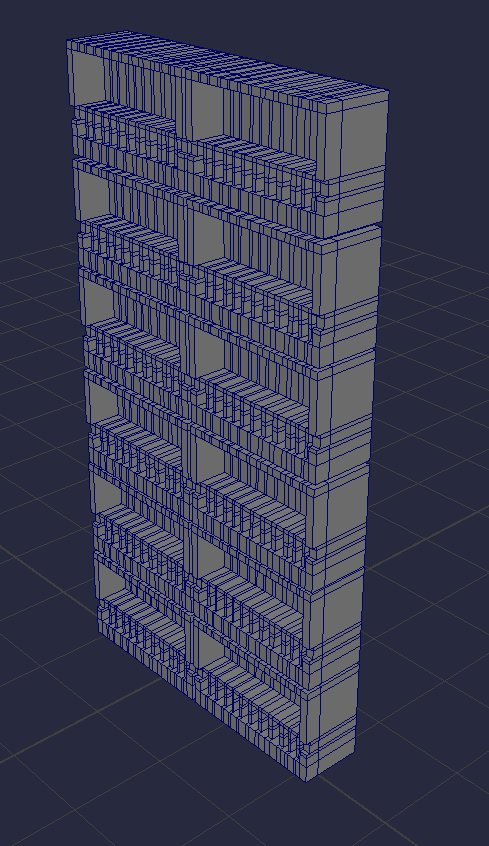

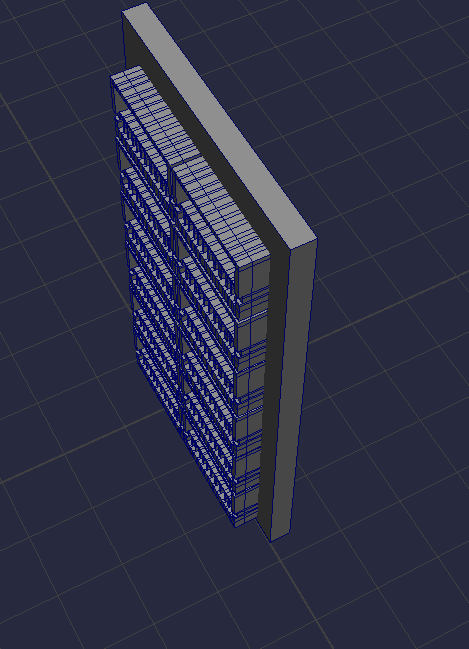

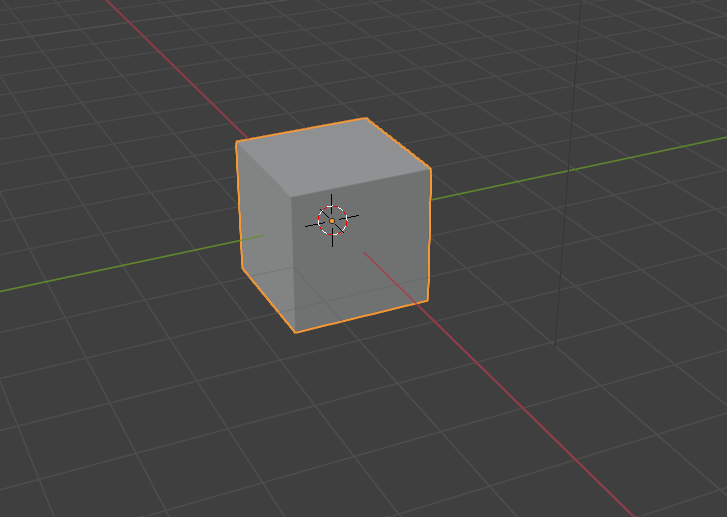

I modeled these buildings using the multi cut tool to separate the meshes into different sections before using the extrude tool to make finer details into the shapes and add depth to create certain objects such as railings, windows or balconies.

Afterwards I would duplicate this finished section into rows in which I make a separate mesh for the foundations of the building.

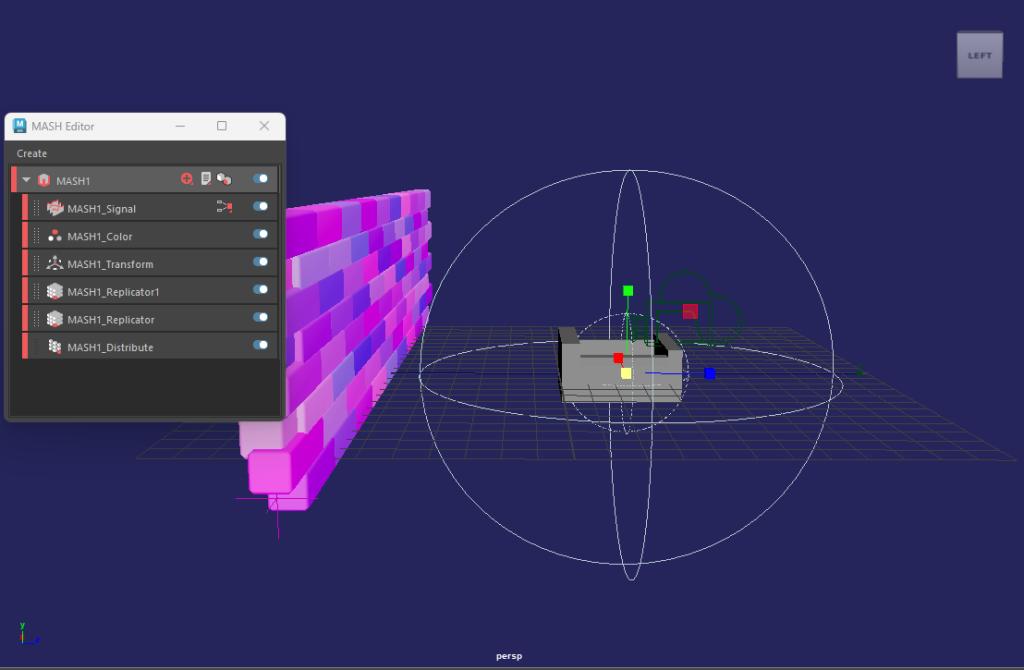

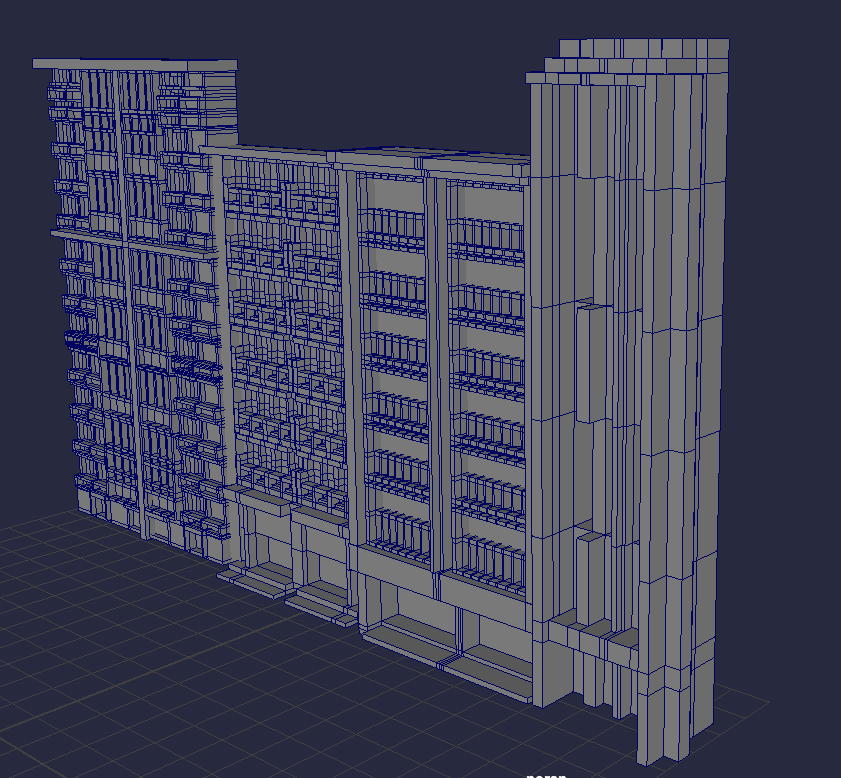

Originally, my plan was to use mash network’s replicator nodes in order to create these buildings efficiently, however I found that I couldn’t make multiple duplicates of the network. So instead, I made these buildings manually, taking longer within the production schedule but it meant that I had my own meshes. With my experience with Mash Networks, they sometimes tend to be unstable with multiple copies. Therefore, this was the best solution for this issue.

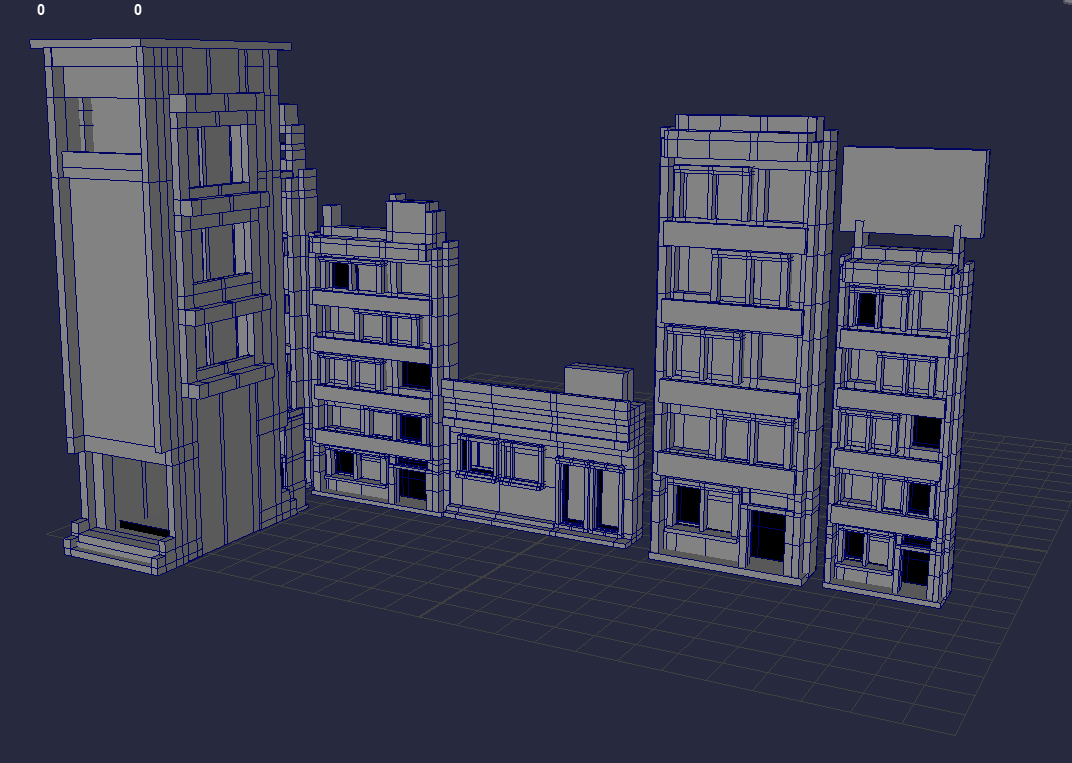

This was my general workflow for each building, although for certain ones such as the smaller ones I experimented with only making one mesh for the entire model rather than having separate sections built up. This was to add a detailed variety to the buildings such as having a convenient store or a smaller motel-like apartment.

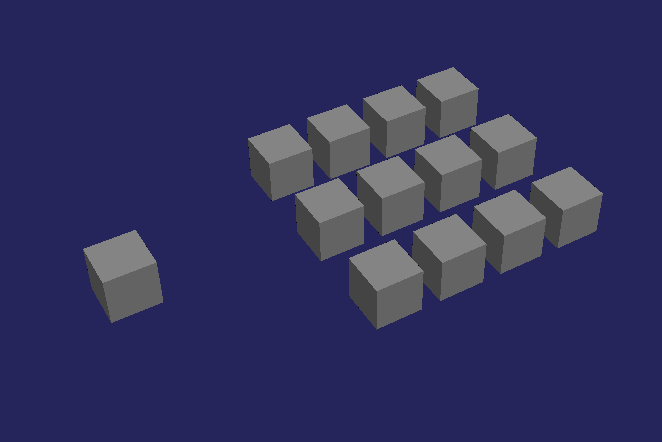

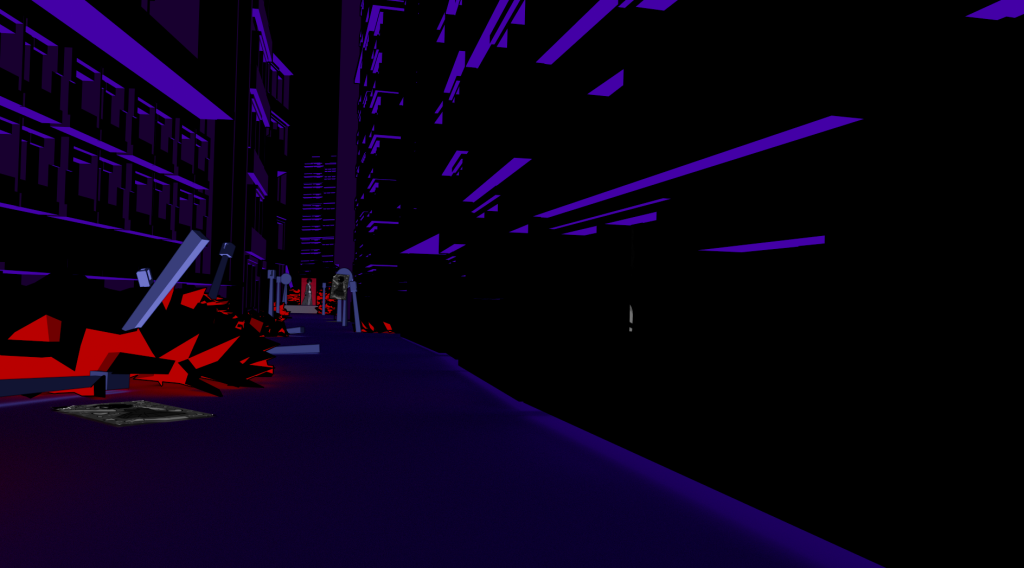

Due to this being a prototype and time constraints within the project, I only made 13 unique buildings with 7 of these buildings being variants with ‘open’ windows for the third section of the VR experience.

Because of this, my main concern was trying to make each building unique whilst reusing the same buildings throughout the experience. However, when I started setting up the city, by adding smaller adjustments such as proportion changes or rotating the buildings to make them fit within the composition. Once I added in the VR camera and animated it’s movements, this composition of neatly packed buildings felt different with each step in the city. Even if the buildings aren’t something that the user will fix their main focus on, they still make the walk through the streets visually unique whilst showcasing the models in different perspectives, therefore this solution resolves my concerns.

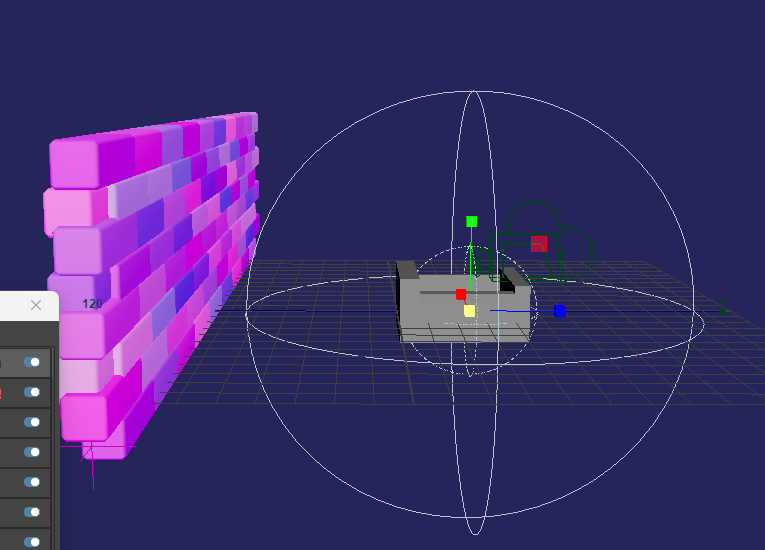

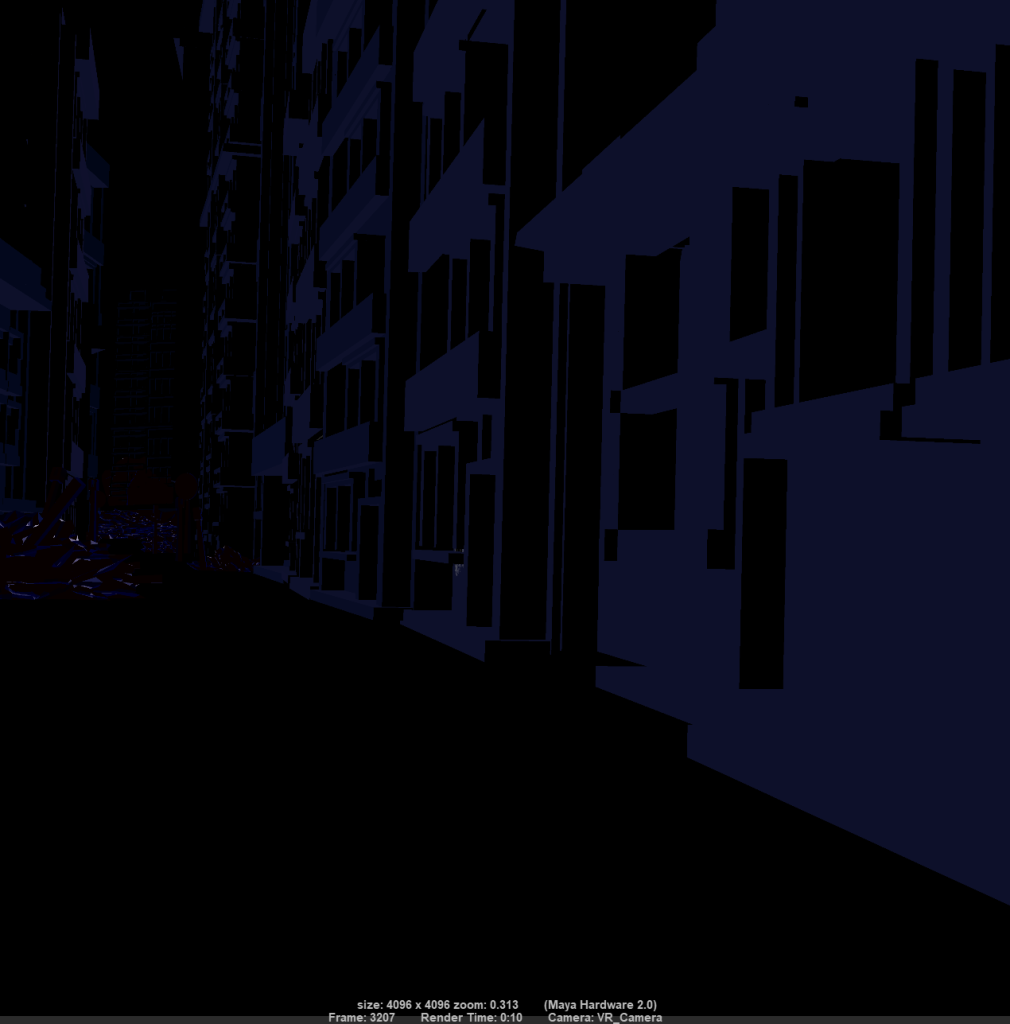

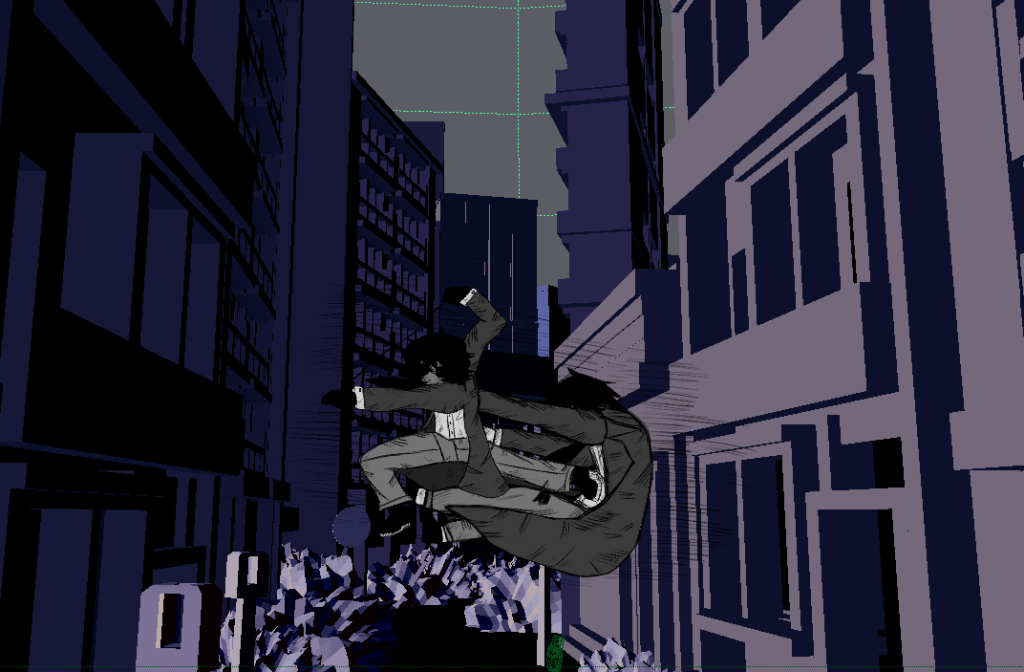

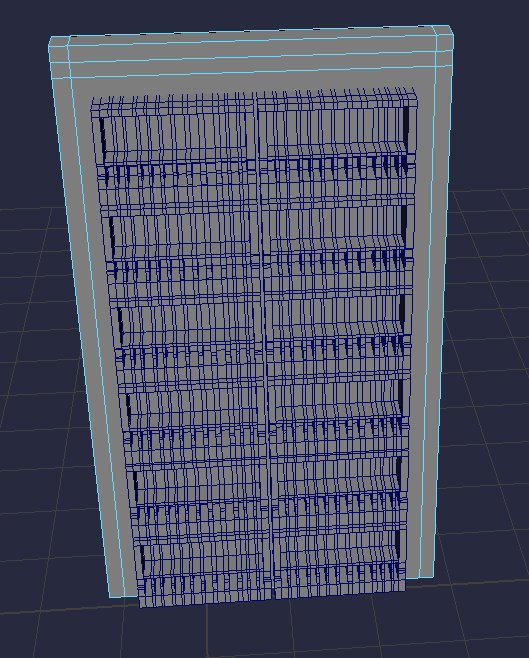

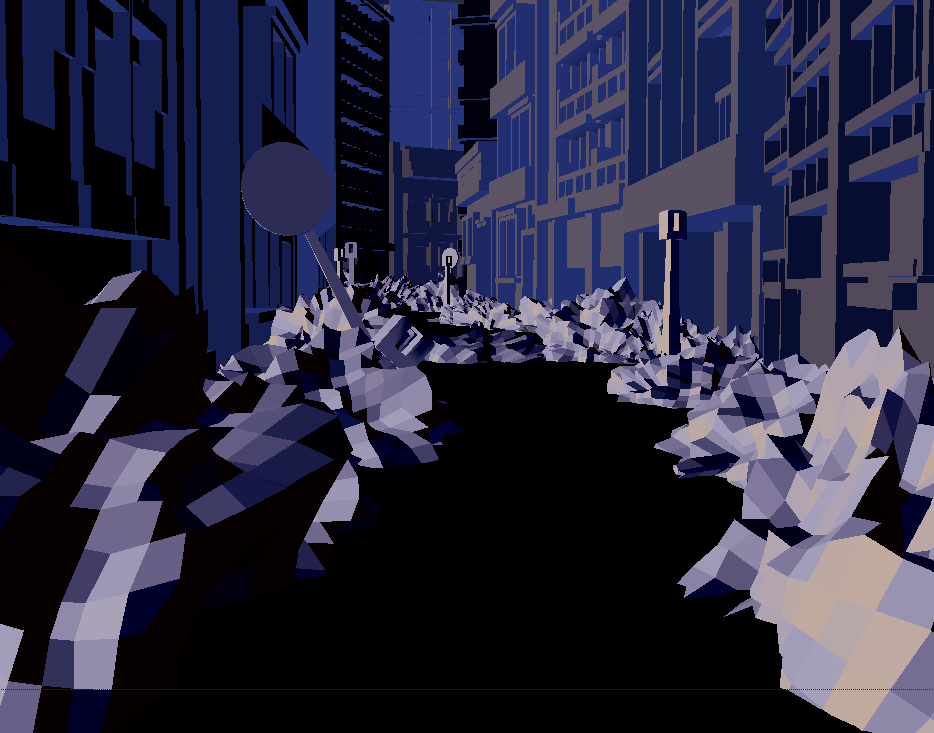

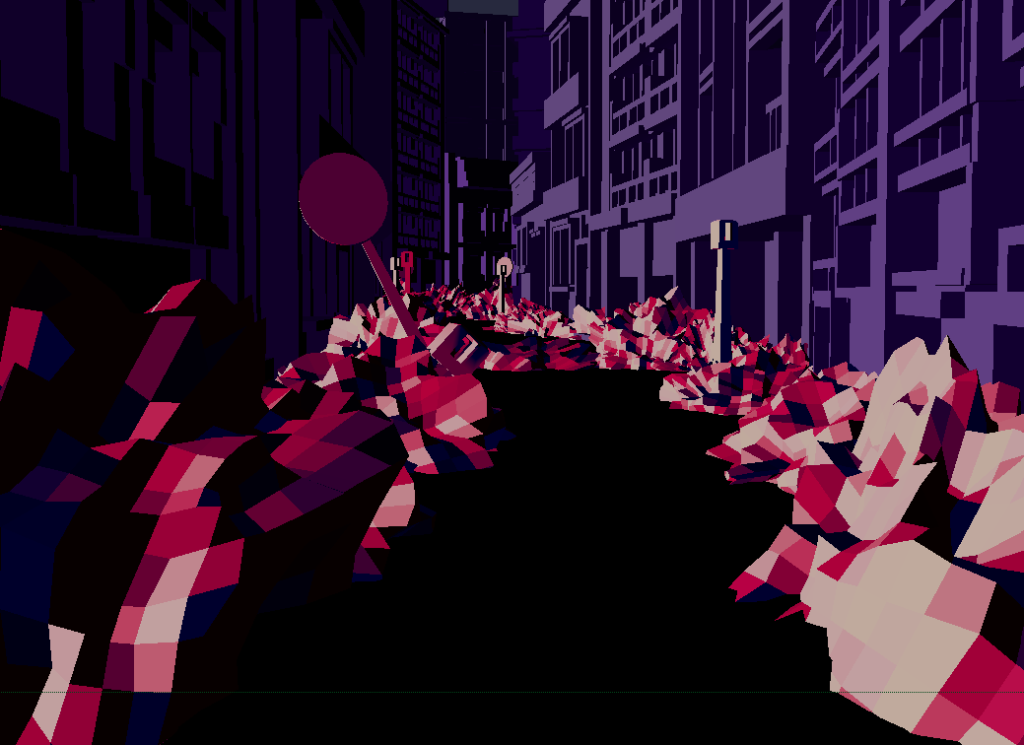

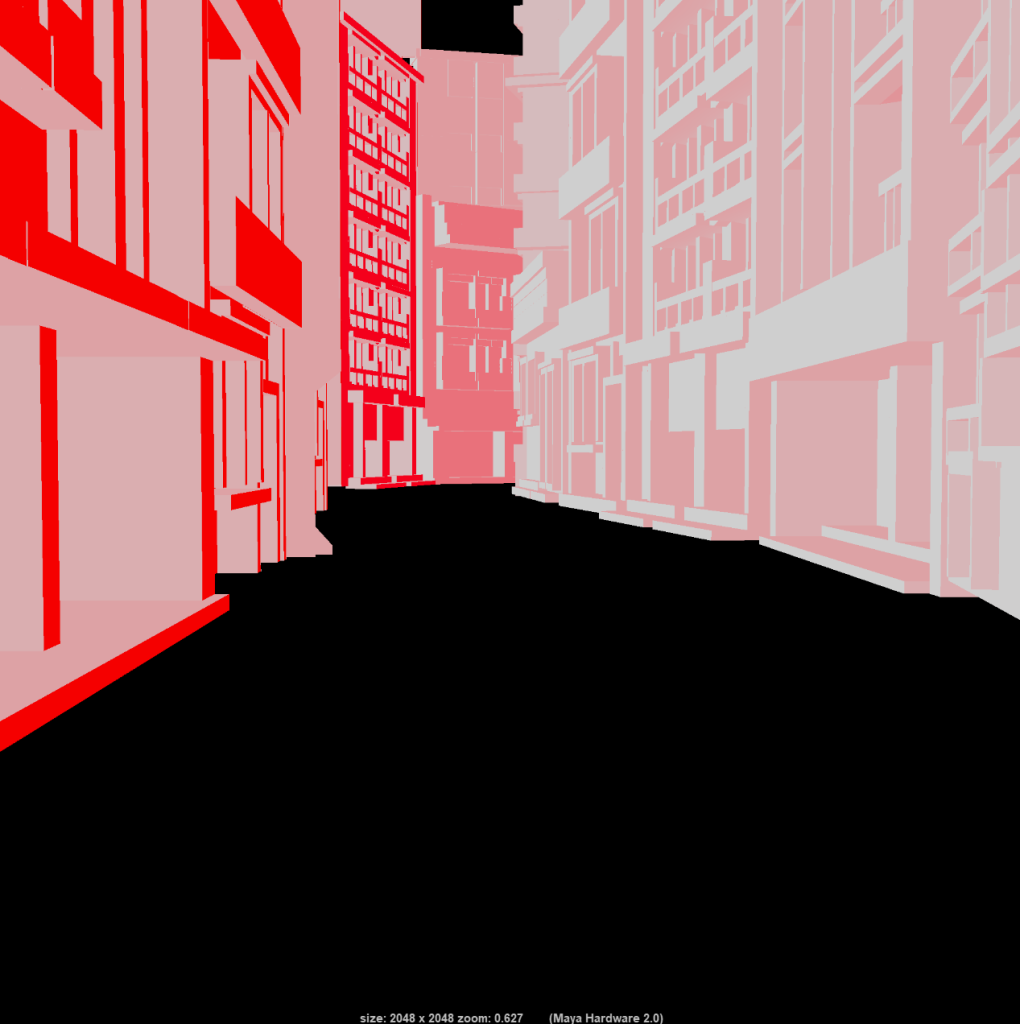

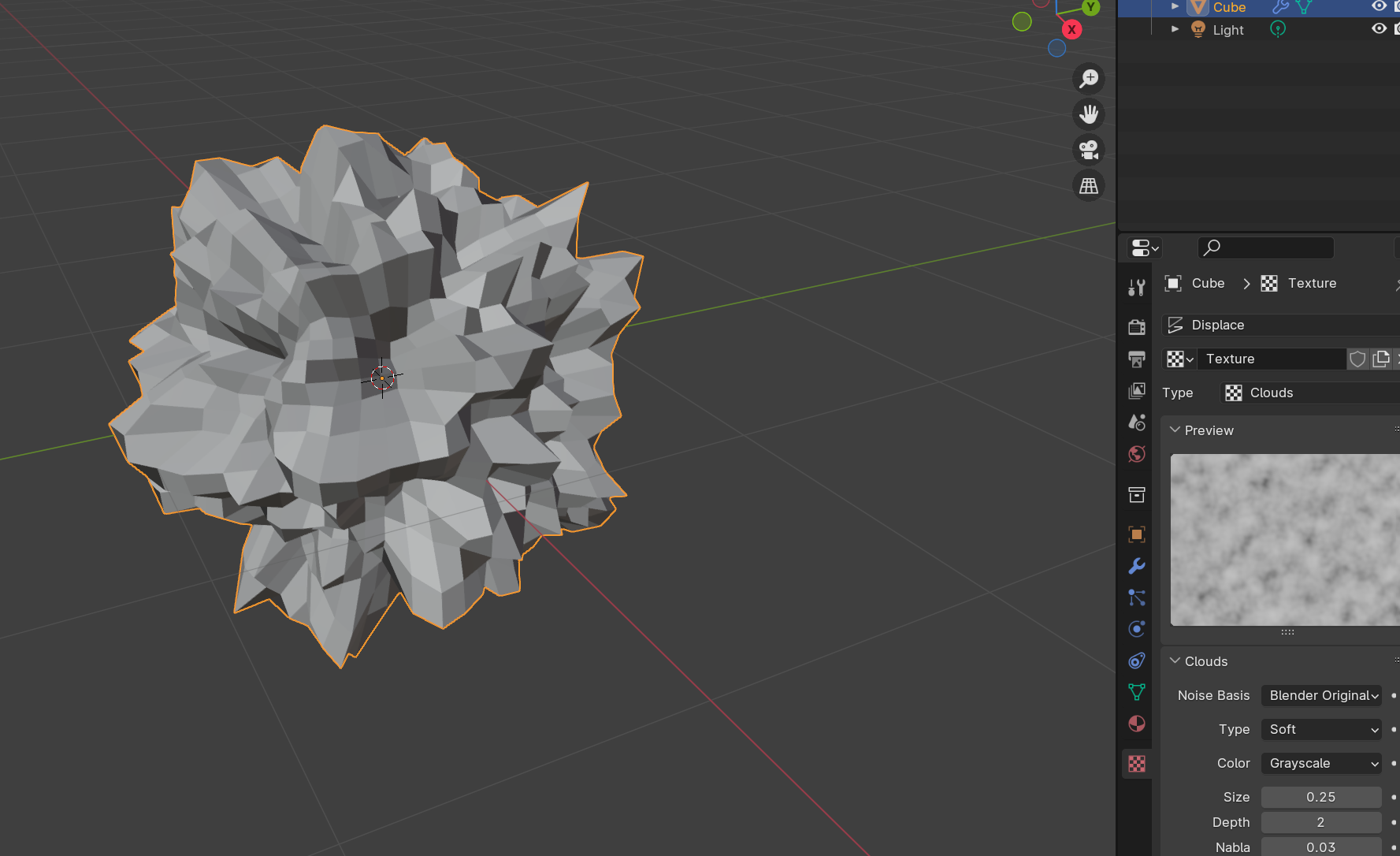

Once I applied the VR camera into the scene, I started to apply textures and lighting, experimenting with different tones and lighting techniques to see what the best render outcome would be. I created this shading effect using various colour ramps that helped adjust the lighting and shadows within the scene. At this time, I was planning to use Maya Hardware 2.0 to render.

However, due to rendering issues talked about in the project management section. I had to switch to AI shader to use Arnold Shader, therefore having to change the textures halfway through production. Despite this delay, I found this exploration to help further influence my choice in colour schemes once I made the switch. Whilst I liked this paler colour scheme, in a VR perspective, the colours blend in to the different objects, making that contrast severely less prominent and visually expressive.

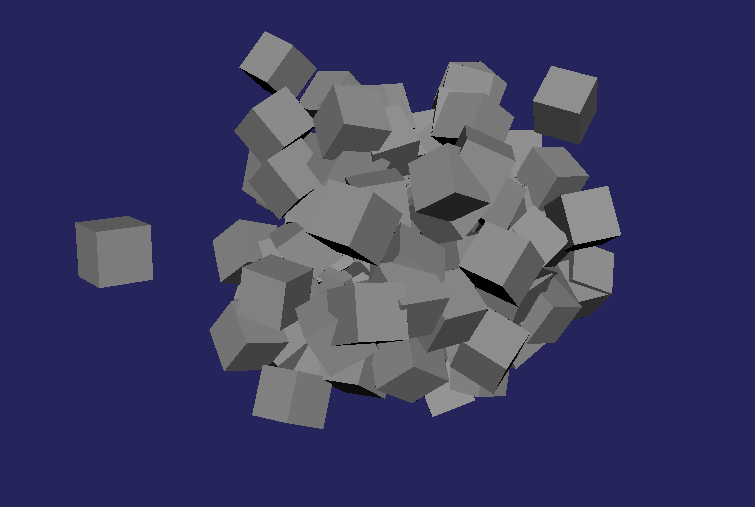

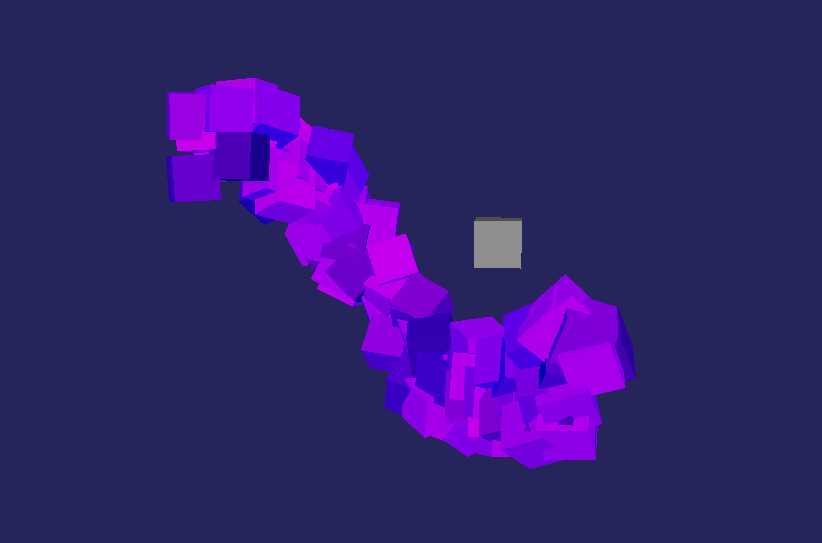

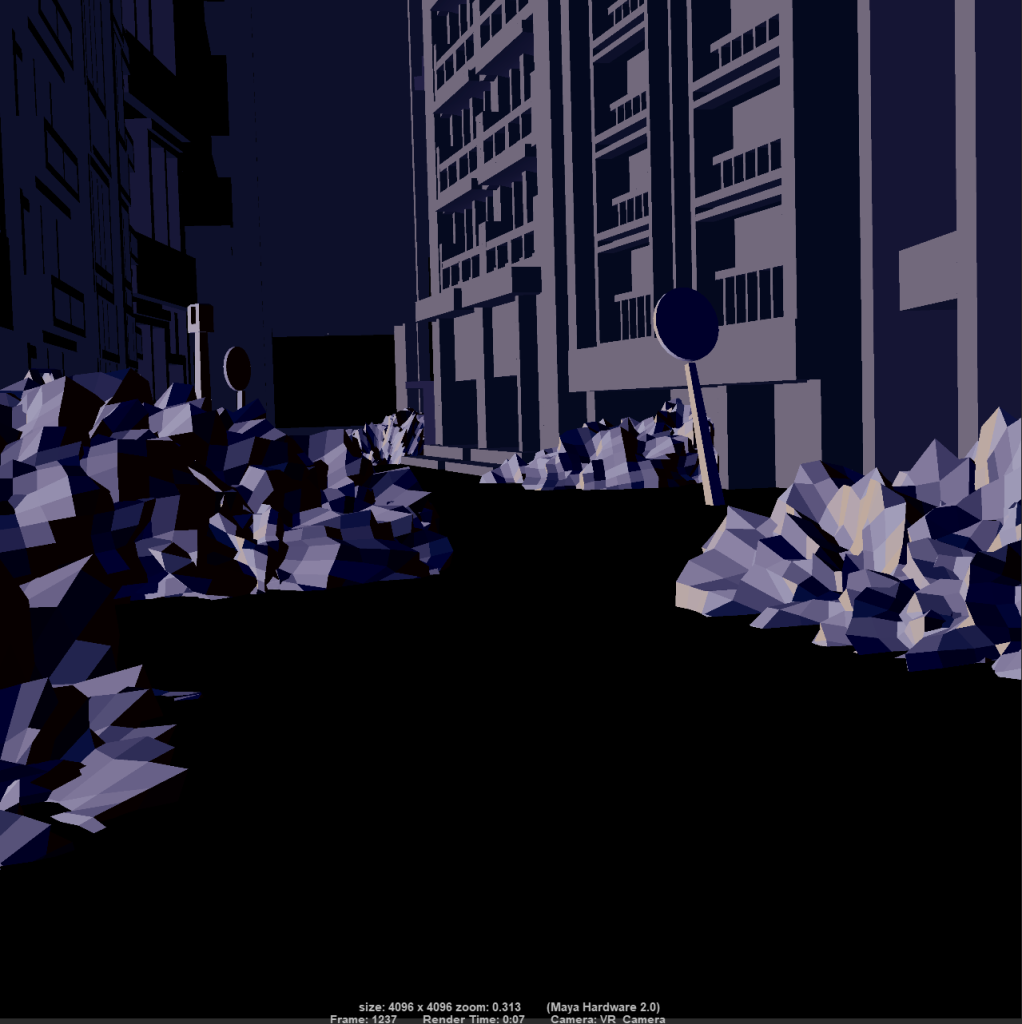

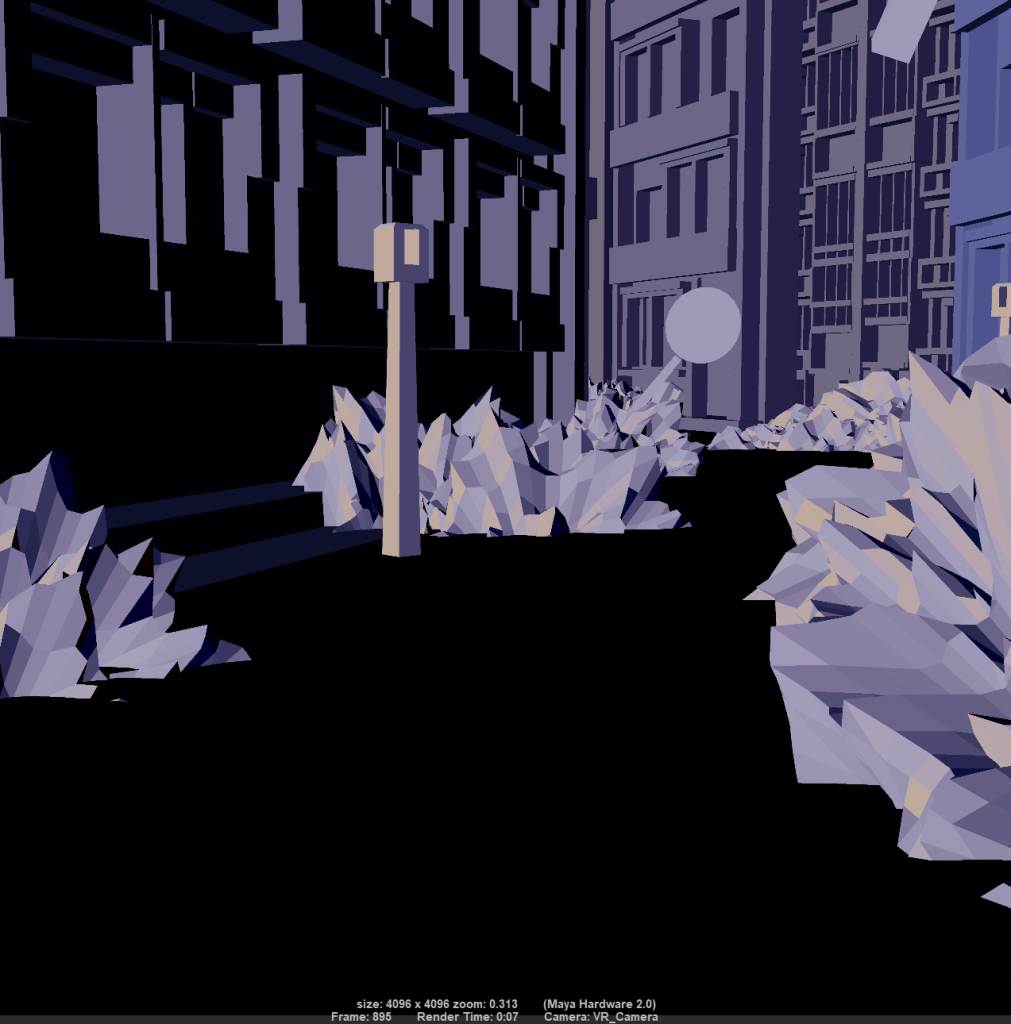

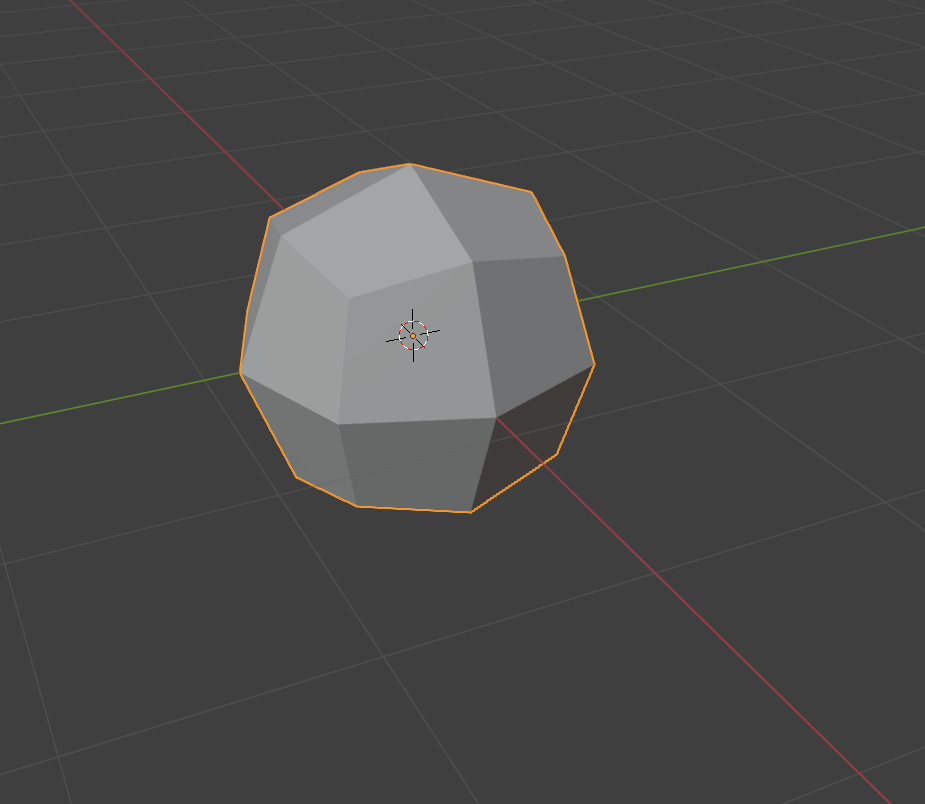

I started to also make objects during this time, such as simple street lamps, stop signs and billboards. The most challenging object to create and optimised however, was the rubble. I made two sets of rubble in blender, porting them into Maya to occupy the streets.

I arranged to in order to prevent them from being too cluttered and claustrophobic for the user. However, another issue arose when I finished applying the rubble onto the streets, I found out that the scene became completely unoptimized due to the rubble’s large polycount. So, to reduce the polycount – I used the reduce polygon tool and the count halfed, making the scene optimized for rendering in the future.

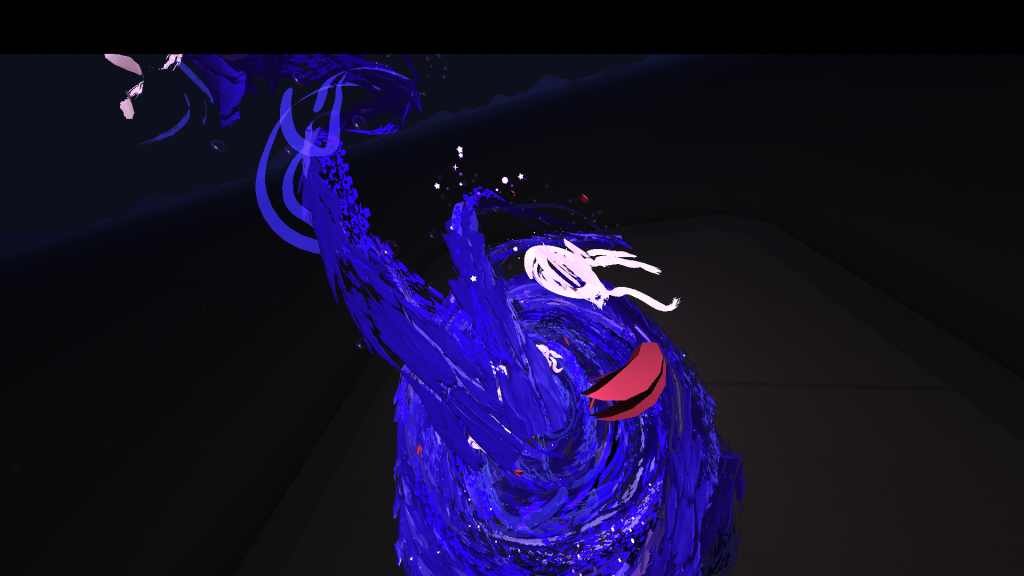

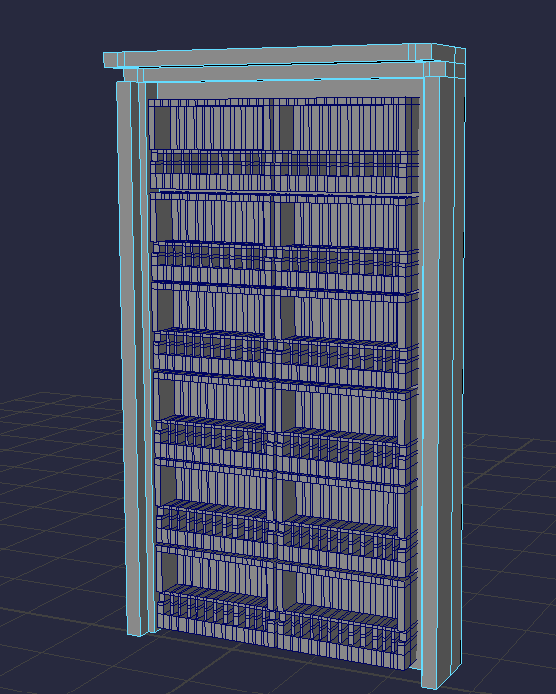

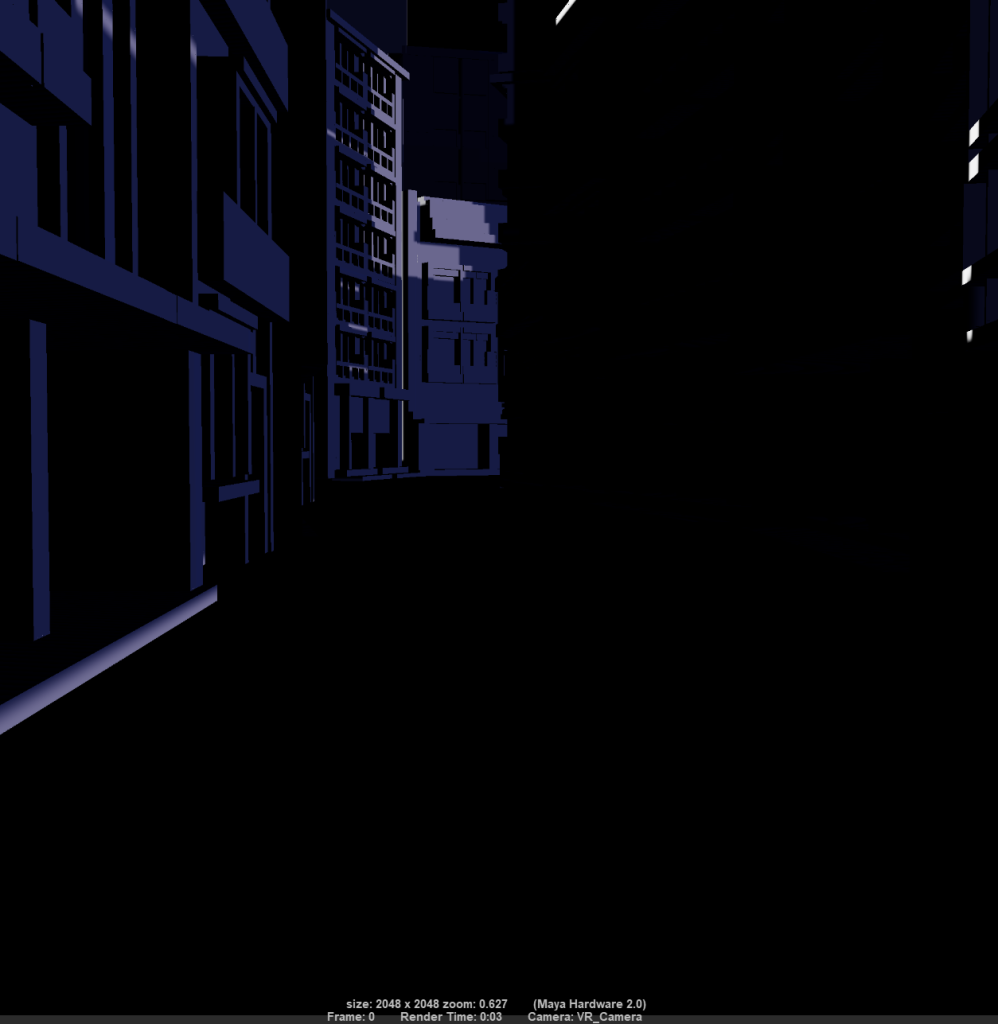

I also used this opportunity to offer a better visual contrast between the chaos of the rubble and the strict composition of the buildings. This also meant, however, that because I was using an AI toon shader, the line strokes for the outlines of each object makes them look more visually prominent. This also emphasized the visual manga theme and I was able to experiment with adjusting the lightning and line stroke width in order to make certain areas or items appear more prominent to the user. The images were added in as Arnold surface shader textures and images planes. The adverts, however, have AI toon, to add depth to the planes as they’d be the most noticeable to the user’s view as the user walks past, making these posters blend into the environment in a noticeable way.

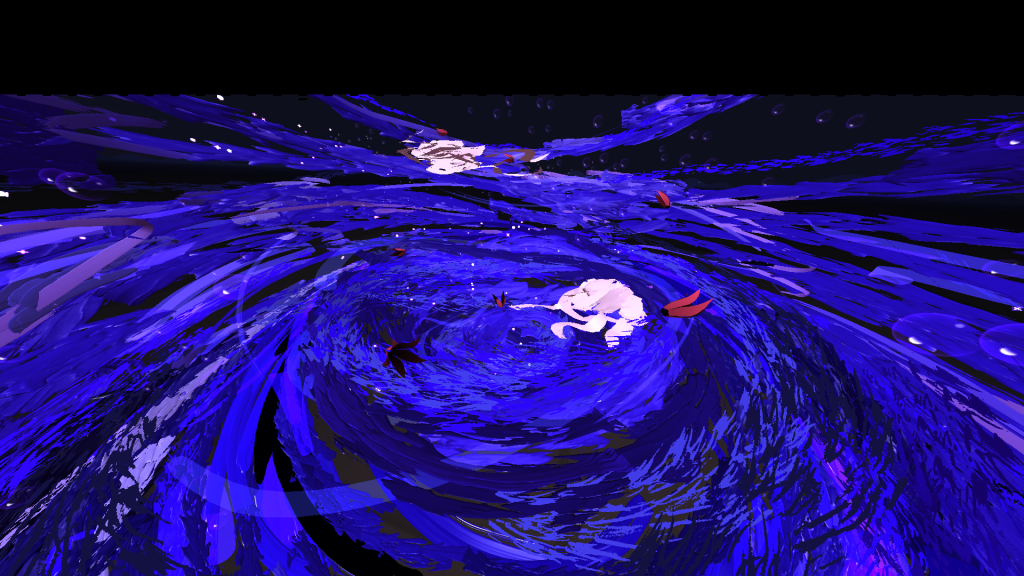

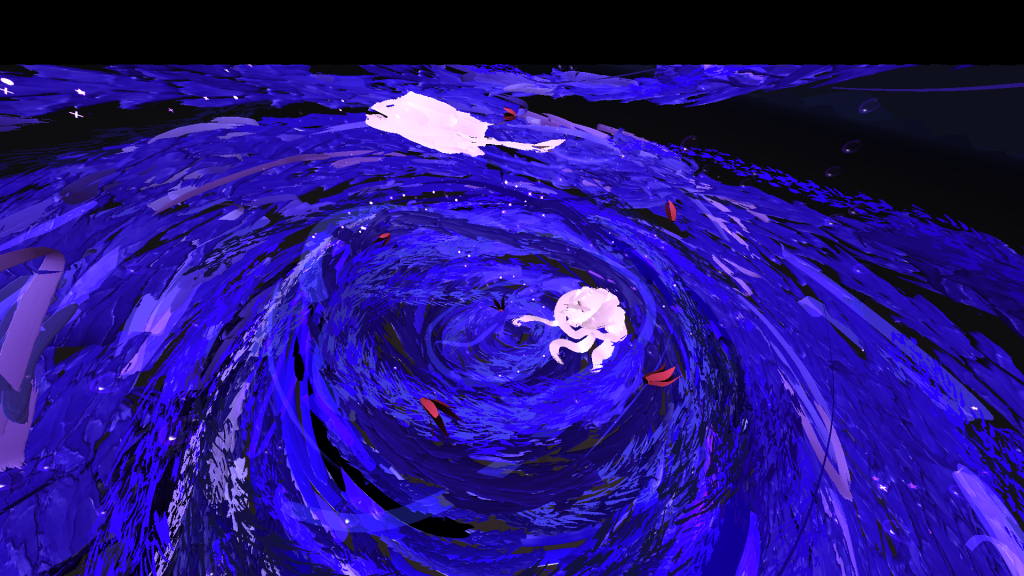

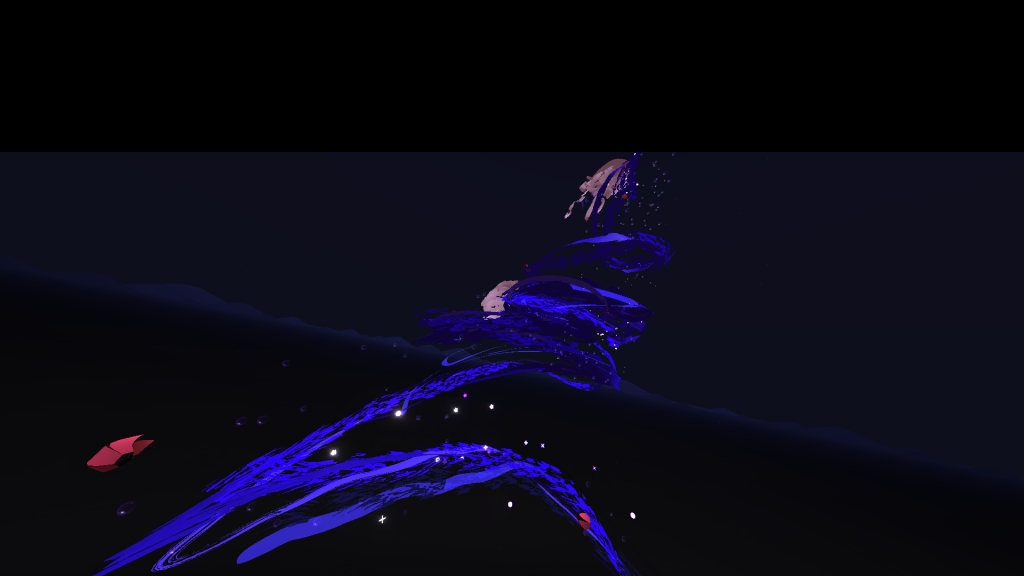

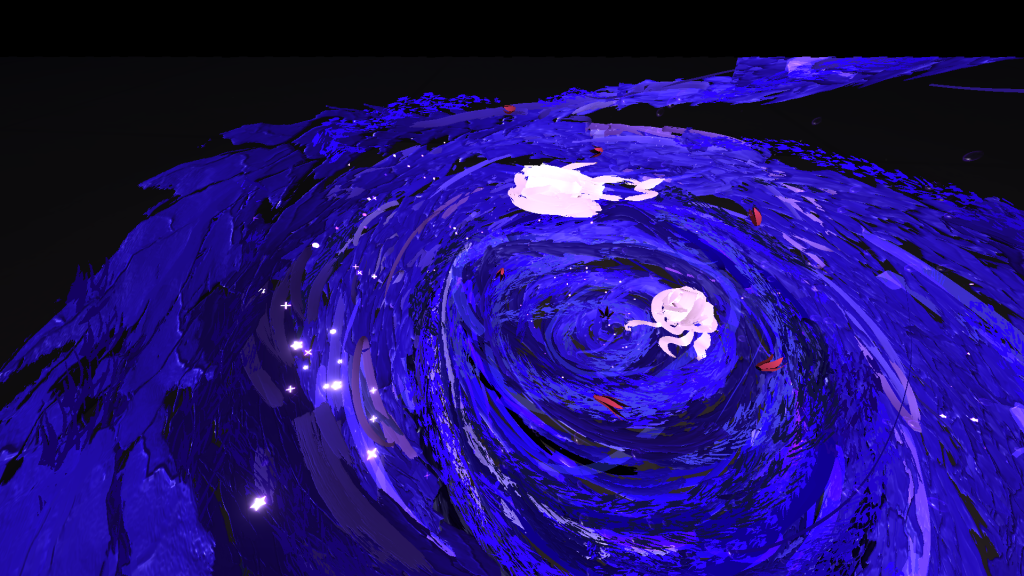

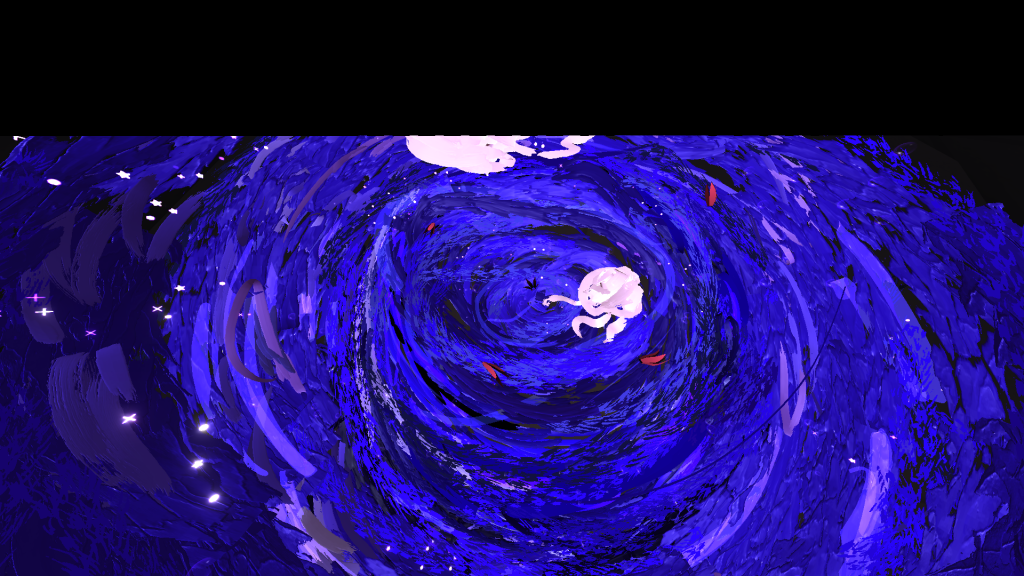

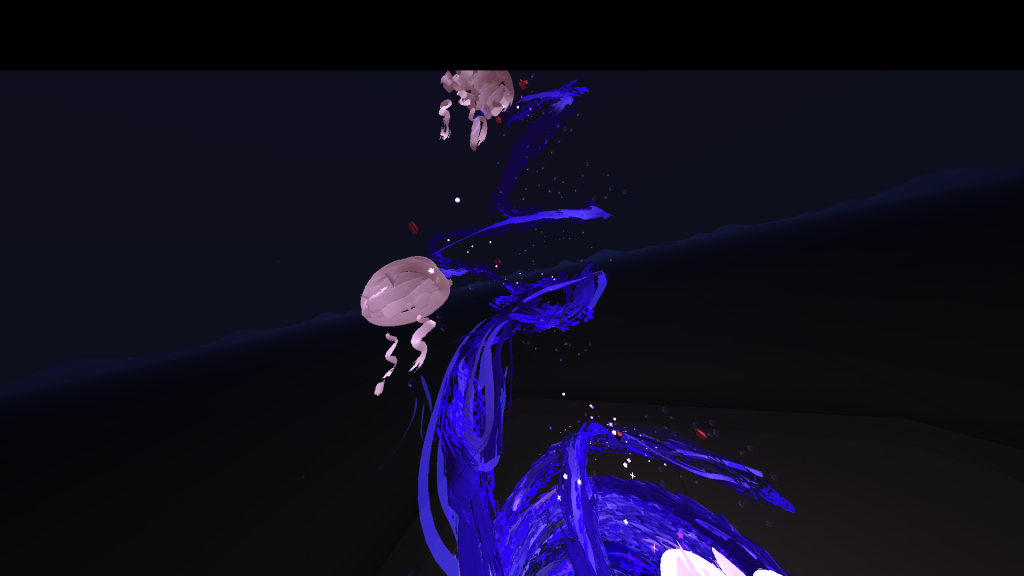

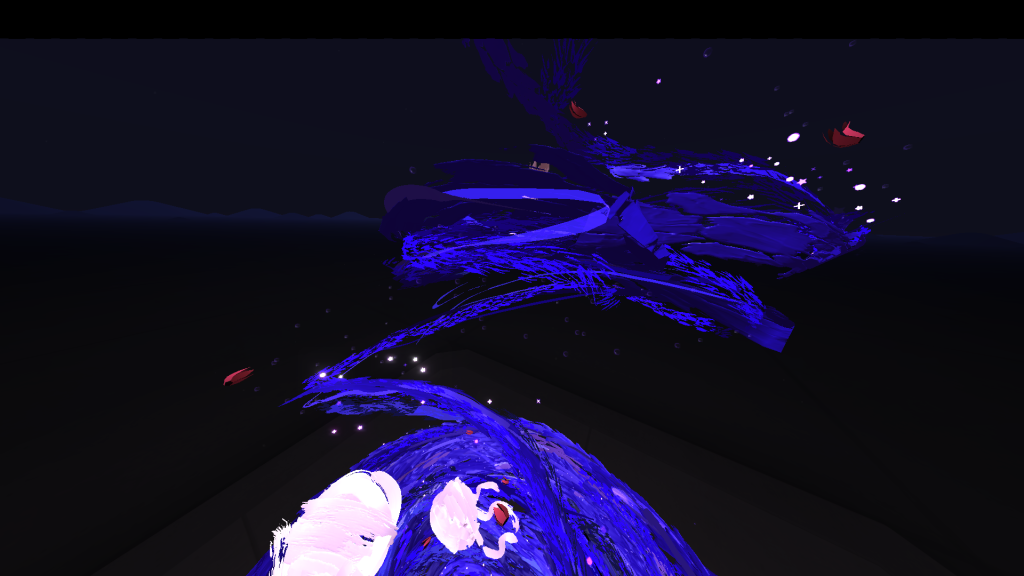

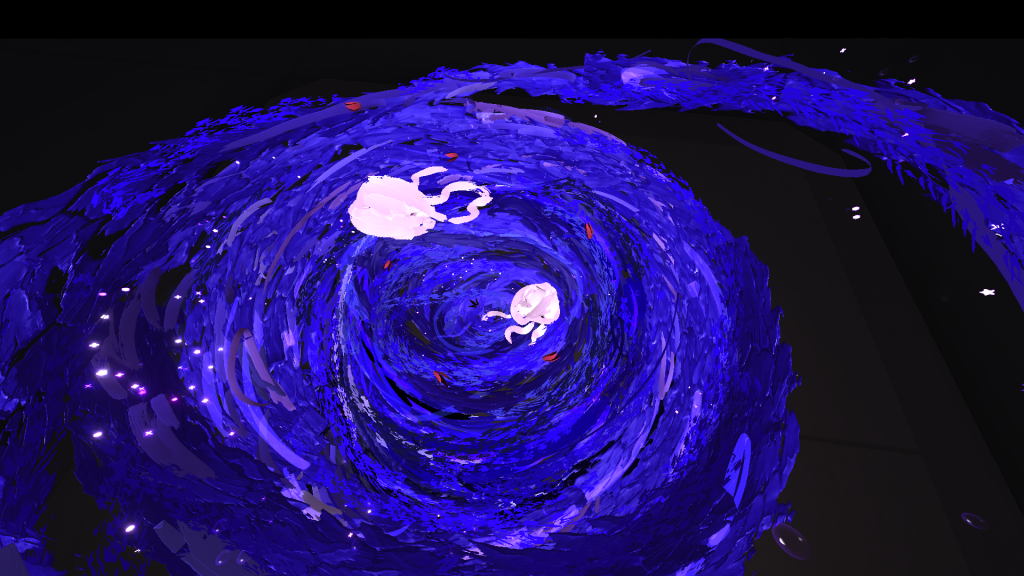

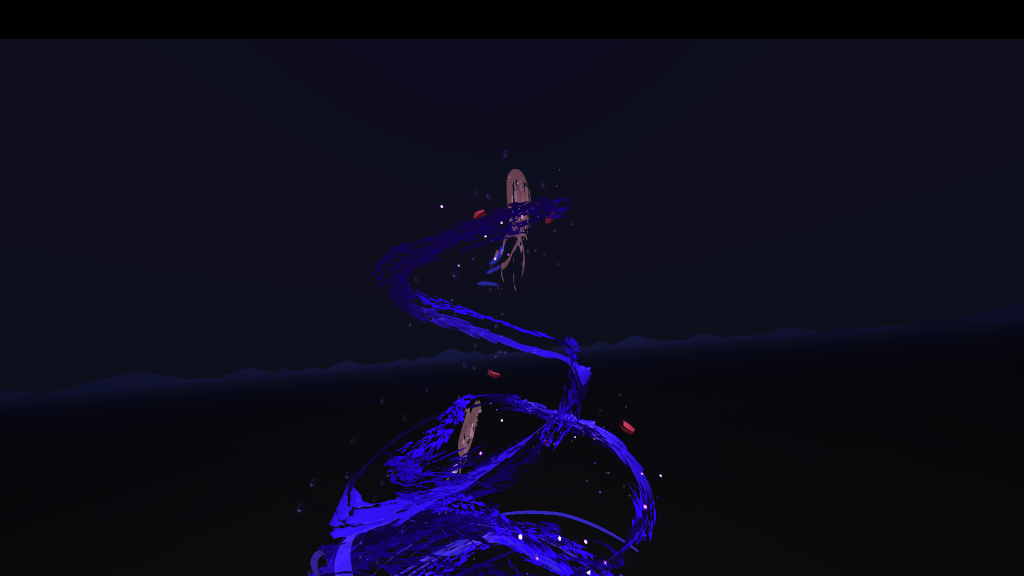

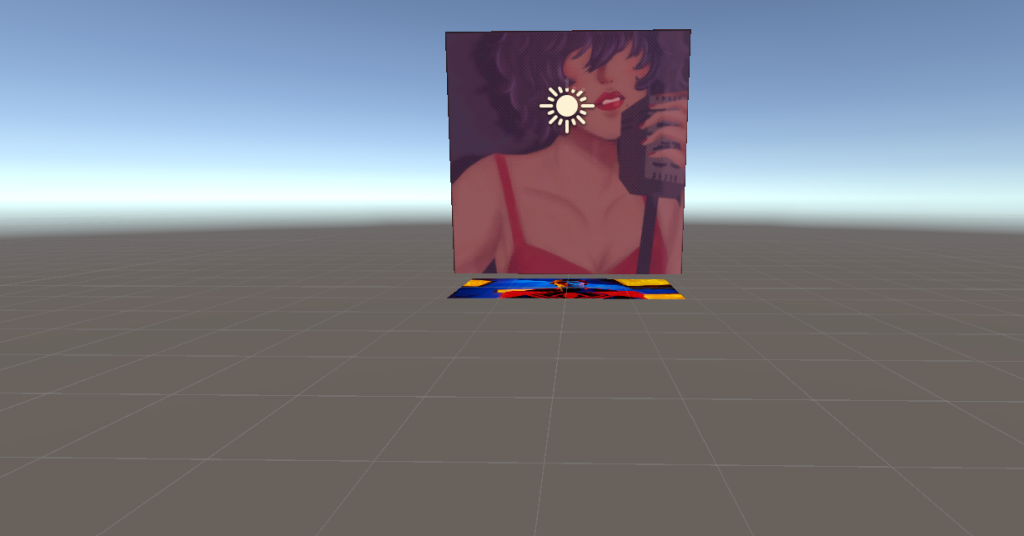

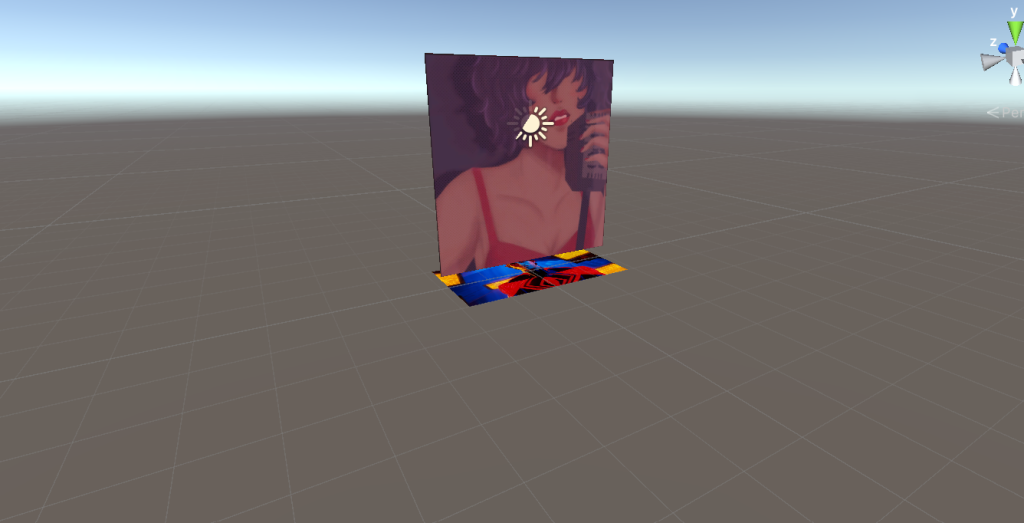

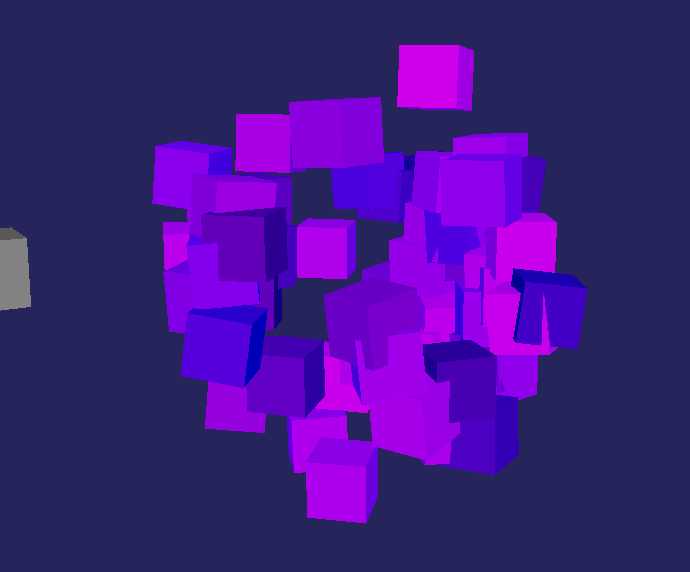

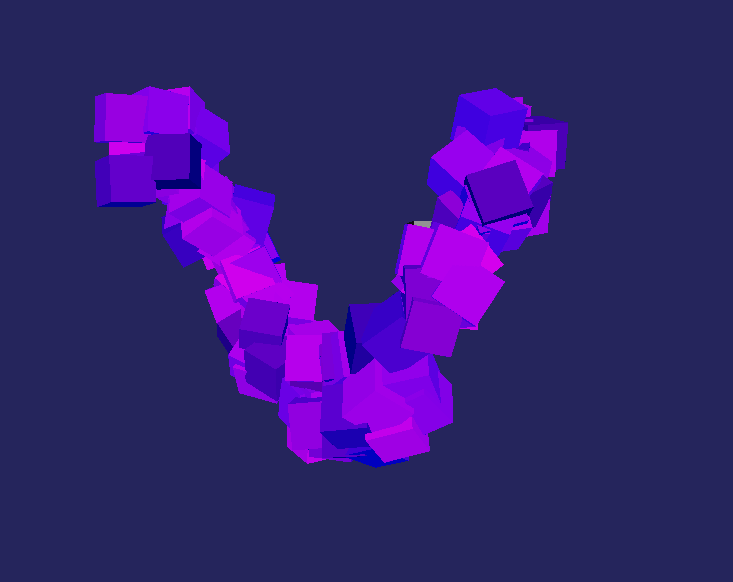

So, as shown here, I switched to an AI toon shader, here are a couple of shots to showcase the different environments with this shader. With this rendering method, there was also a slow, smooth transition into the darker areas of the district which makes each area more recognizable and tense as the user walks through the empty, visually emphasizing the horror elements without making it disorientating for the user.

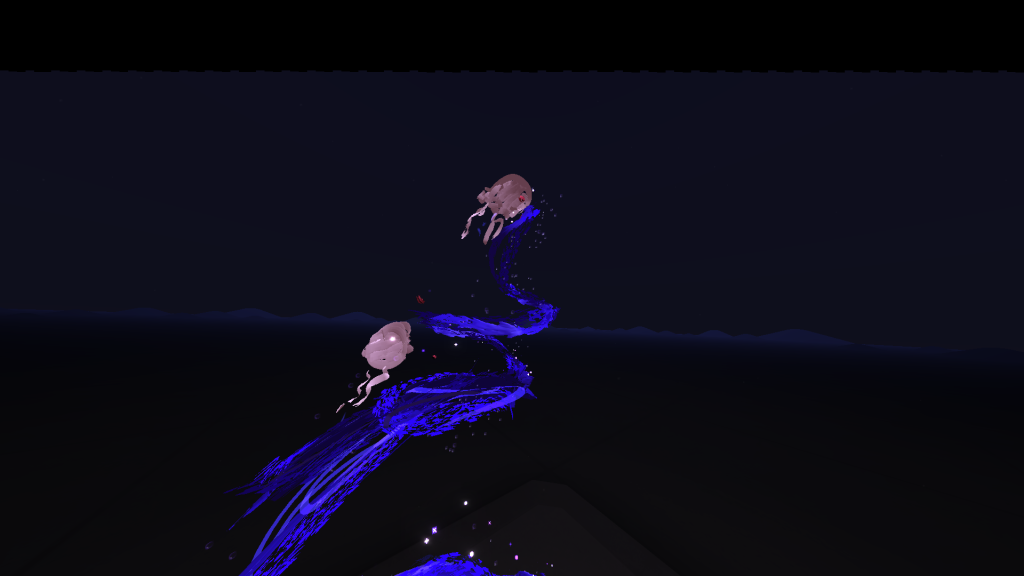

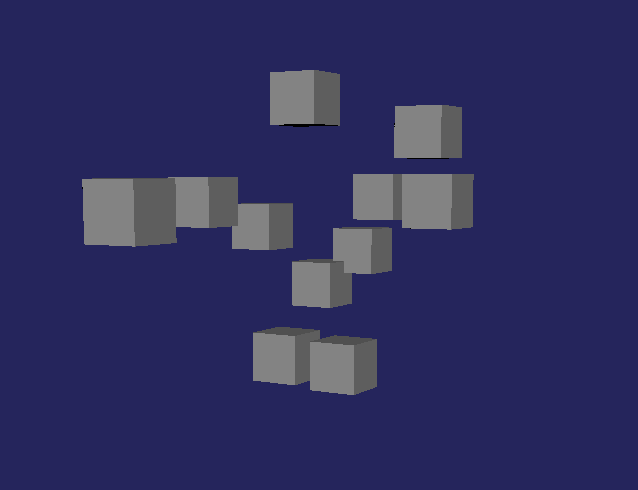

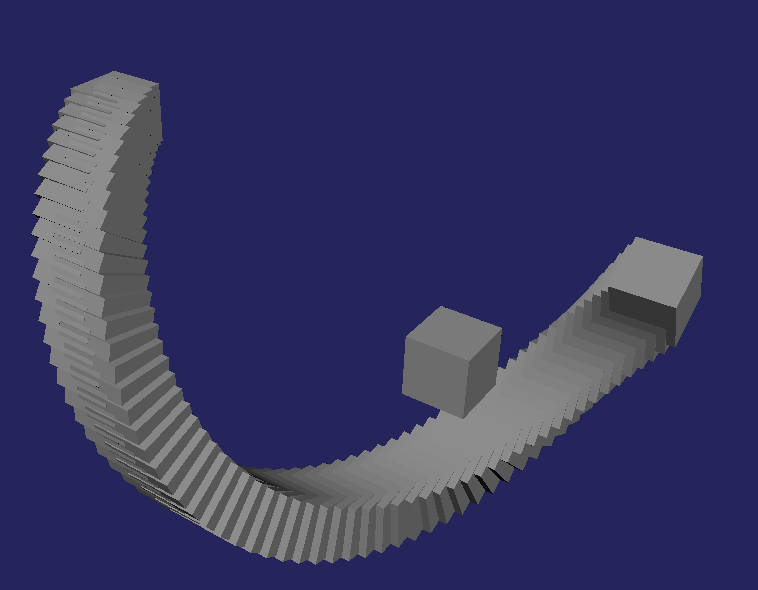

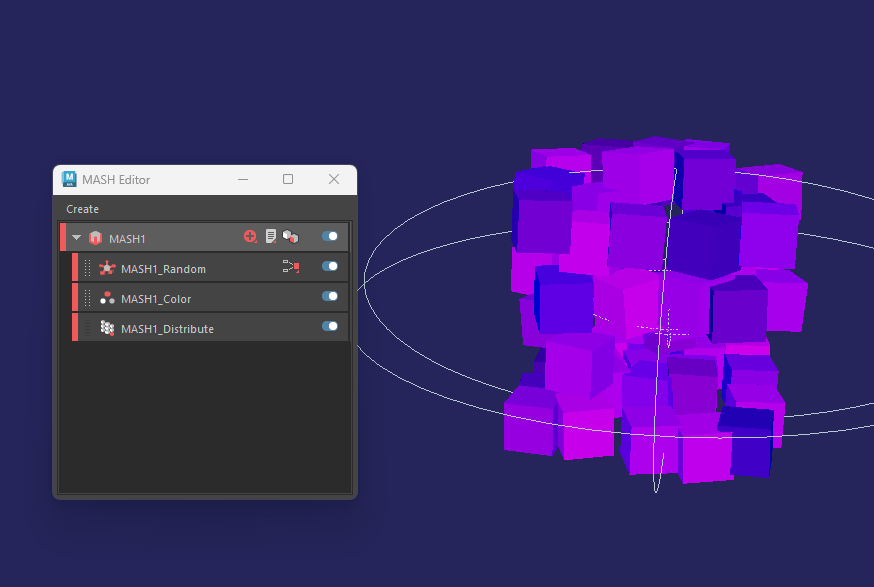

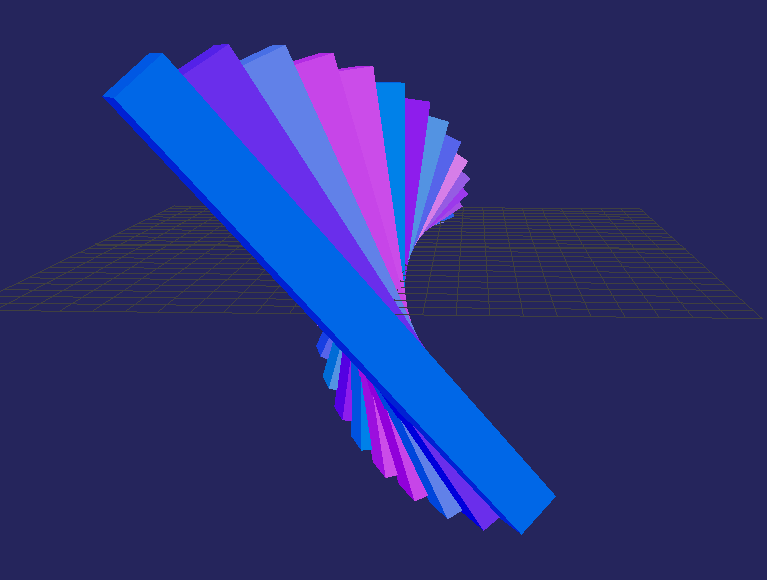

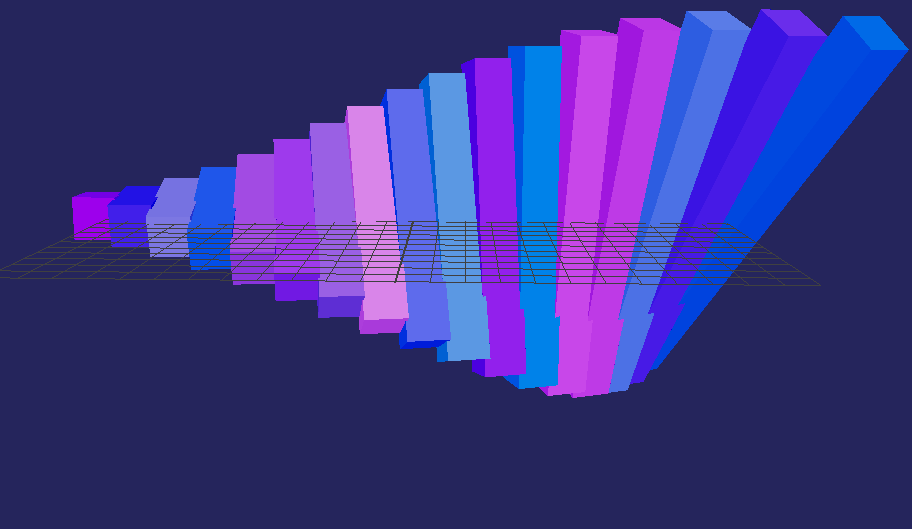

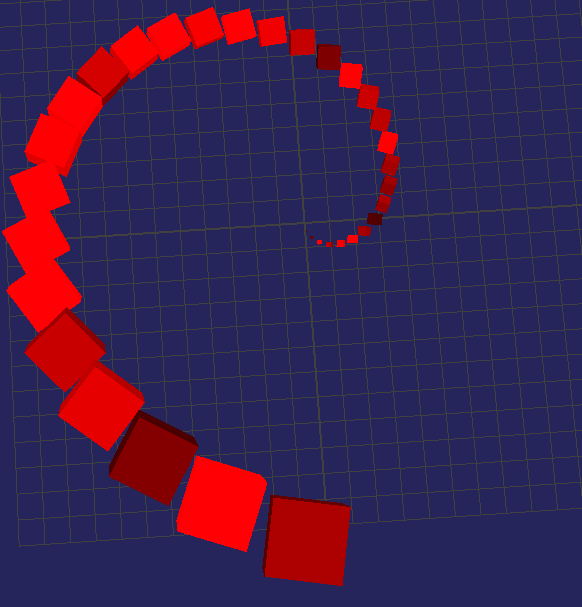

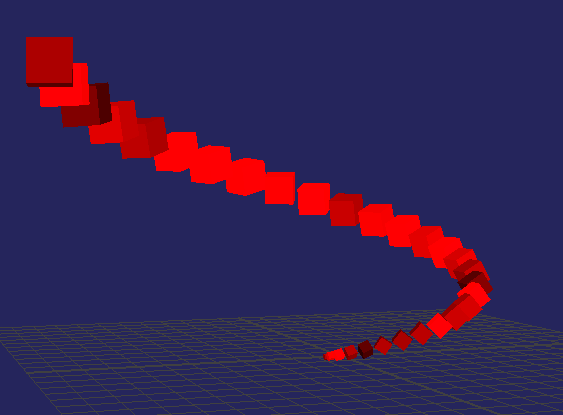

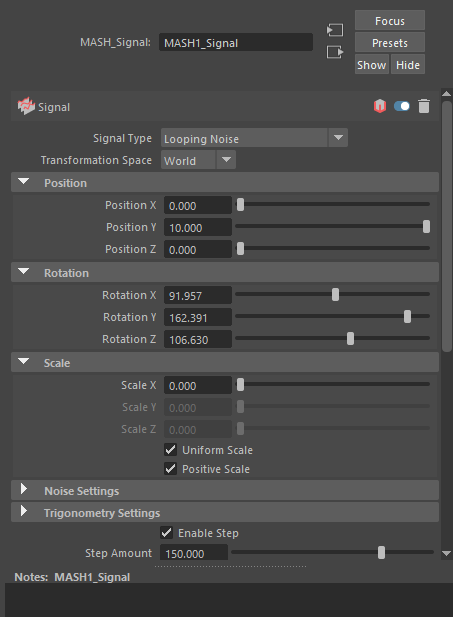

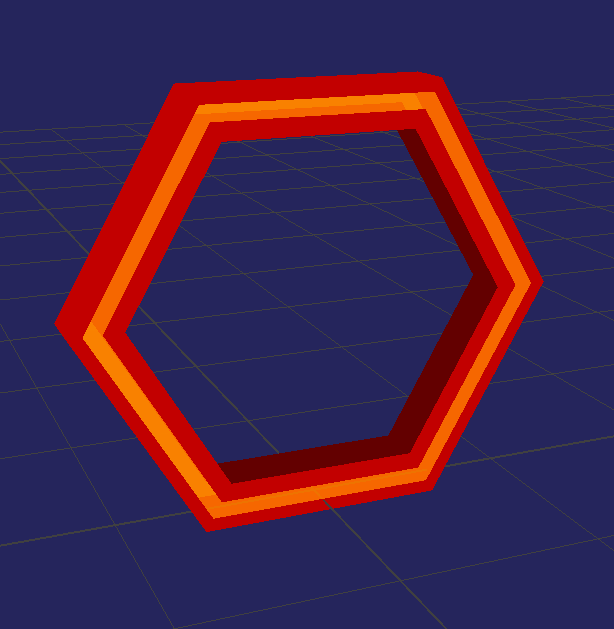

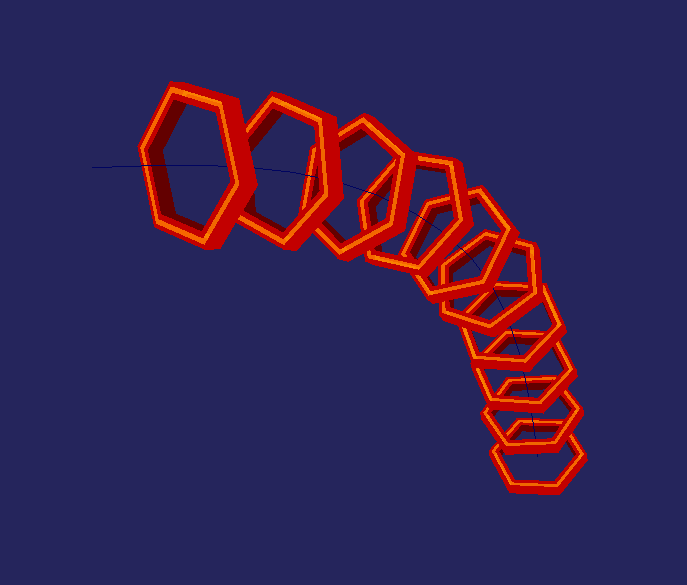

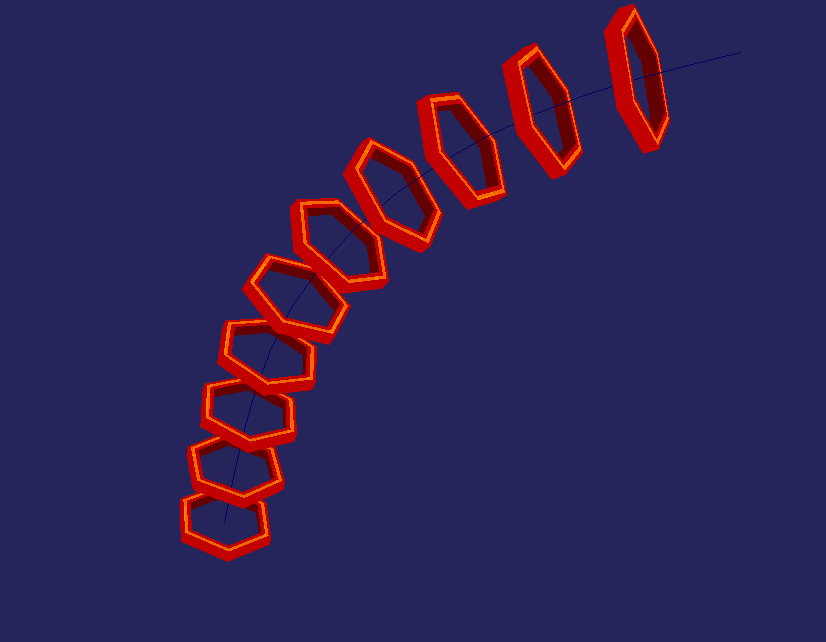

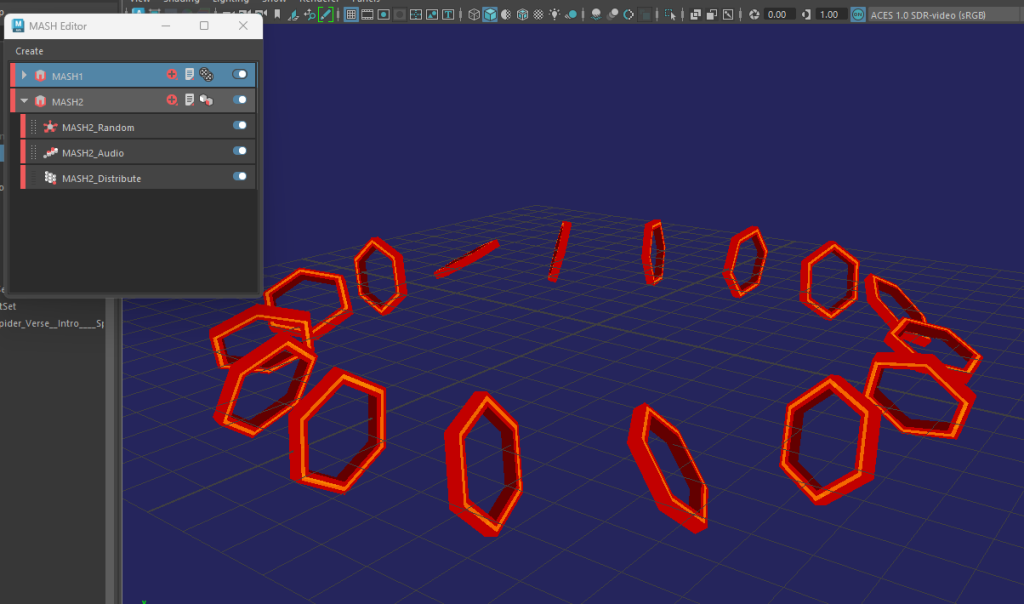

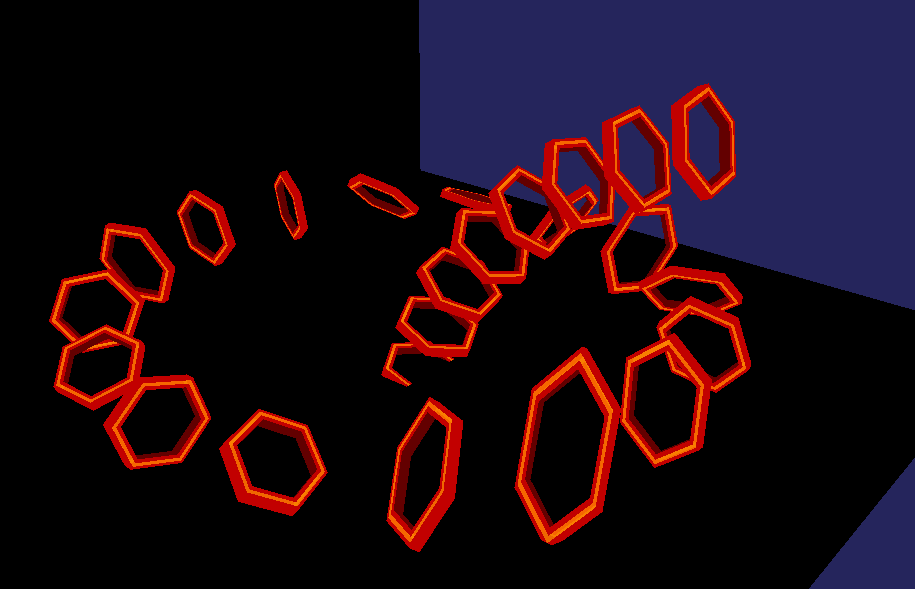

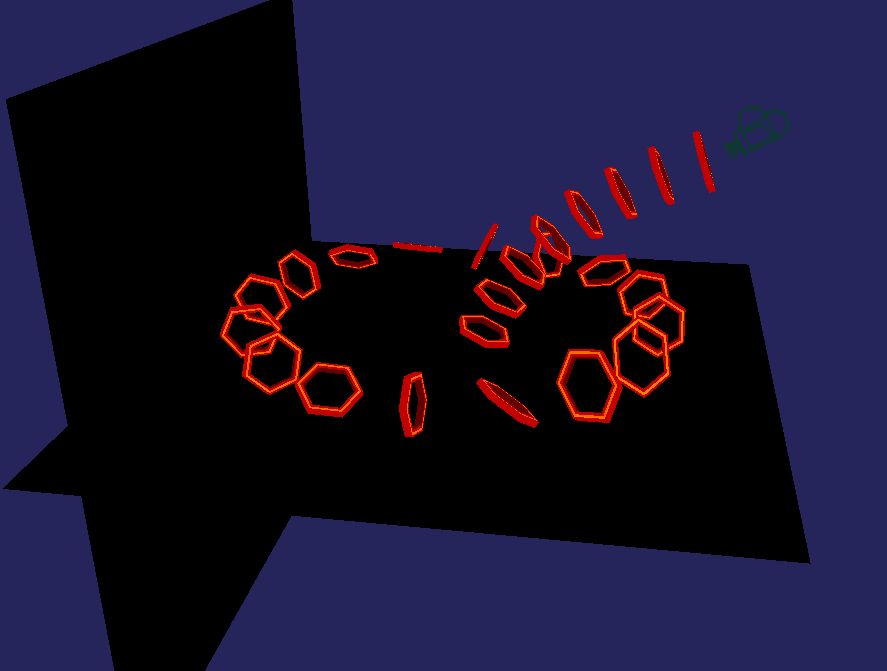

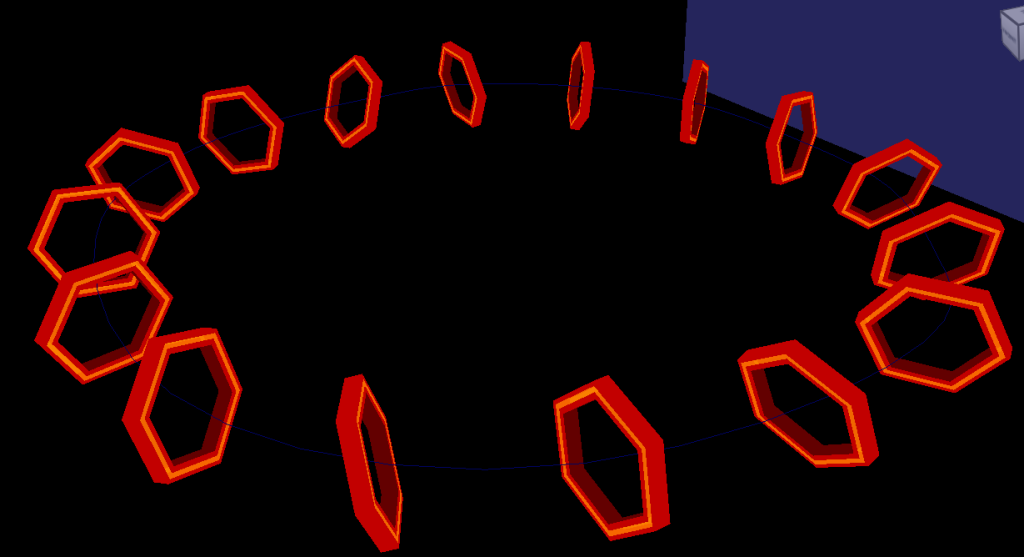

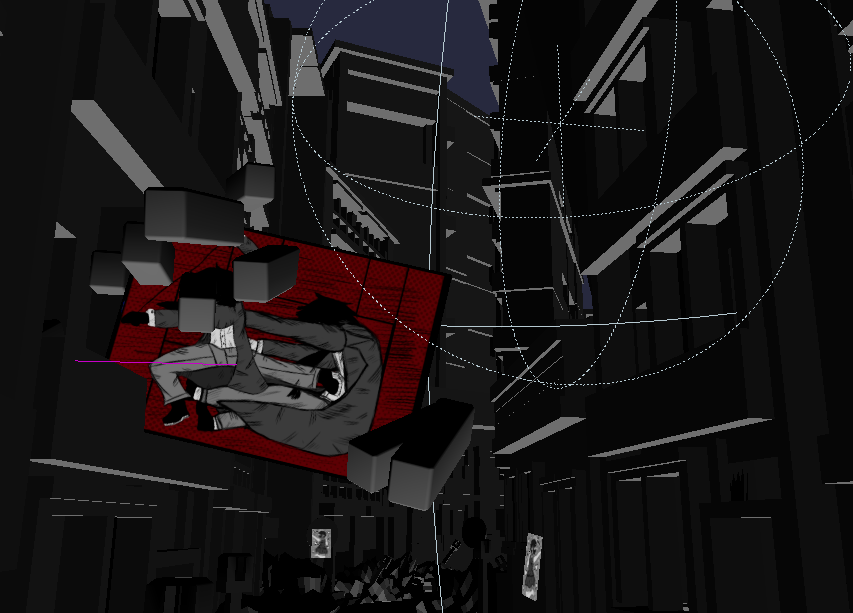

Before rendering and finalising the Maya scene, I added in the mash networks. There’s two different types – one being the wall destruction scene (which lasts for a couple of seconds before disappearing) and the spiderverse portals, inspired by the ones I created during my MASH network experimentation log ( https://samsudeen-2021.hulldesign.co.uk/2023/11/13/emerging-technologies-week-1-prototyping-your-immersive-experience-part-2/ ). These last a lot longer depending on the scene they’re in.

Finally I added a spherical effect to the VR camera, allowing it to render the entire environment from a 360 view. This spherical microscopic effect is similar to google images, creating flat image renders.

Finally, I started to render my environment in 10 second intervals using the Viper Render Farm. This was the hardest part of the project mainly due to the process taking two full days without breaks. In total, there were 22 render outputs and since Viper can be unpredictable, some renders would have to be redone. The reason why I rendered 10 seconds for each output was so I could calculate how much time it would take to render a set of outputs each day. This is so I could calculate any potential errors that could delay the rendering process. This is why, in my gannt chart, I left two weeks to dedicate to rendering in case any issues arise.

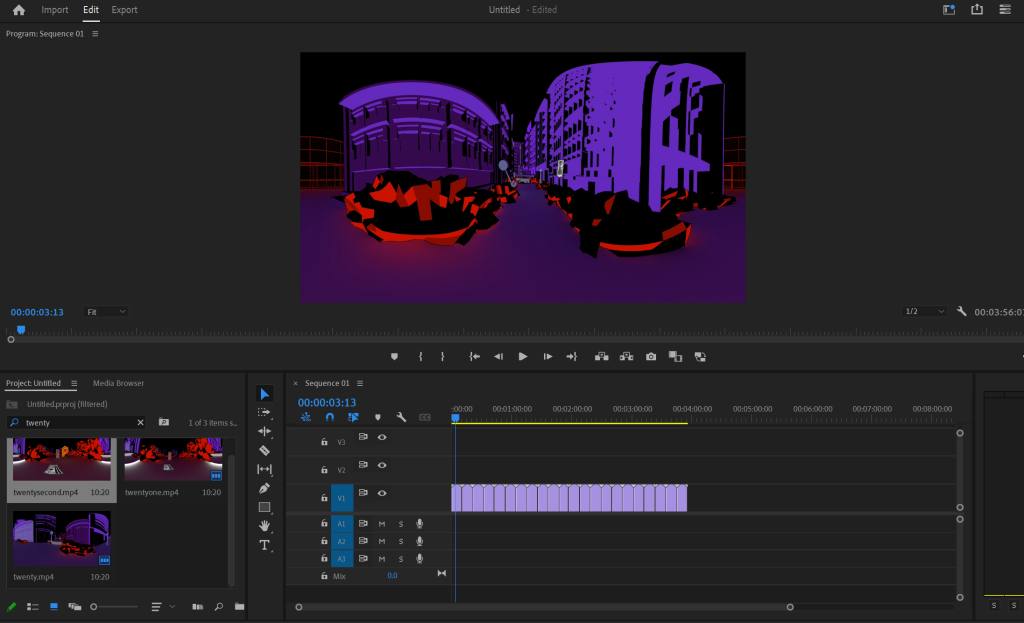

Editing – Premiere Pro

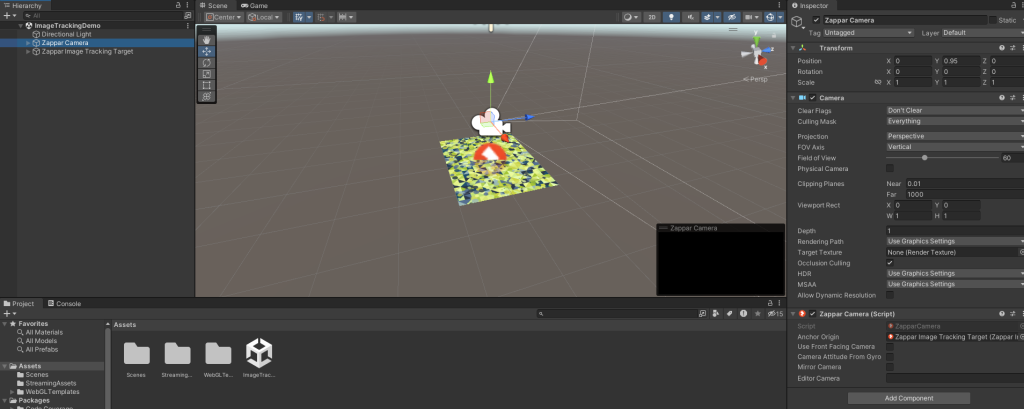

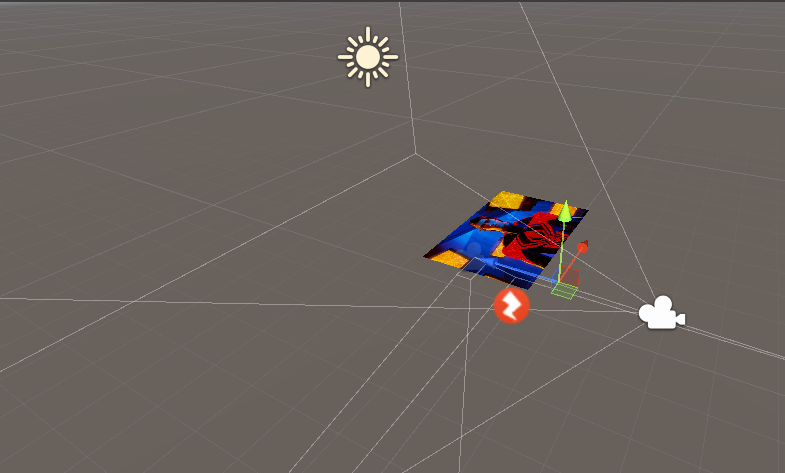

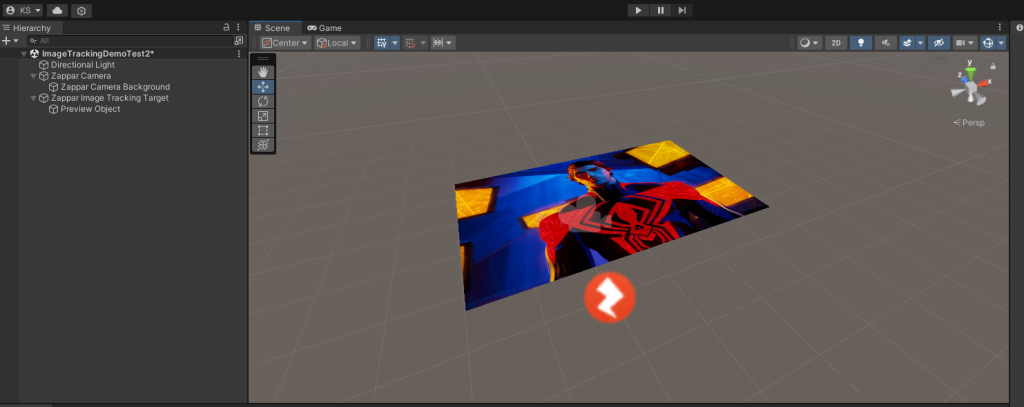

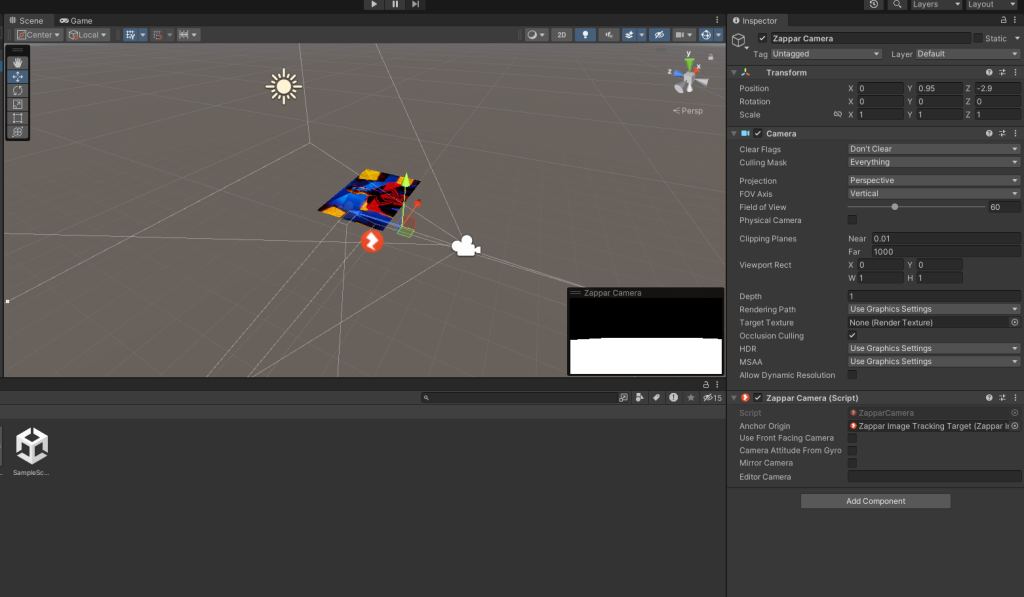

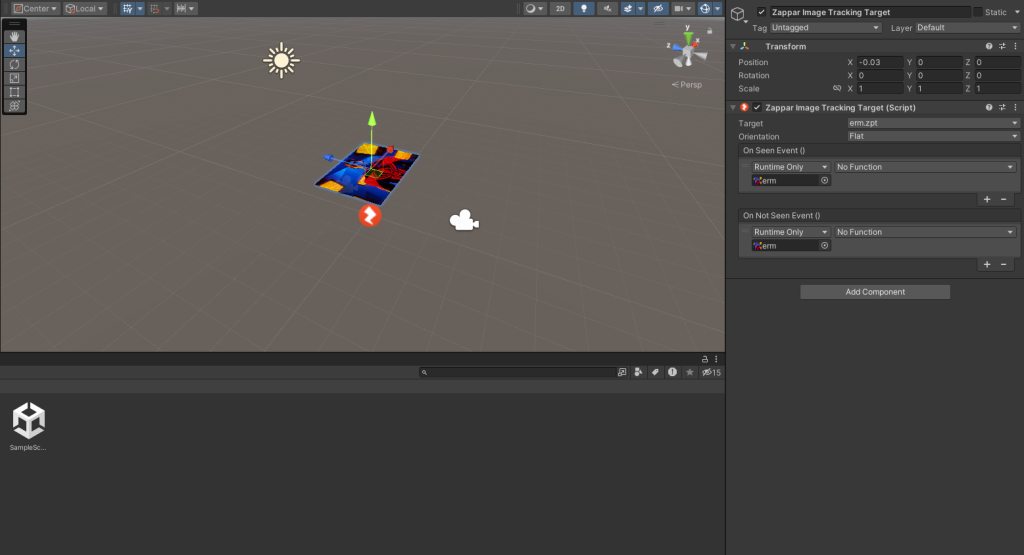

I used Premiere Pro and Adobe audition for my editing. I was working on this simultaneously alongside the rendering process. So I tested my renders to check if they worked in a VR space using Adobe After Effects. I was also following this tutorial during this time:

This how I safetly knew it was okay to continue with the rendering process with this spherical camera.

Using Premiere Pro was a lot easier than After effects, this was mainly due to how simple it was to connect the clips together, making each transition seamless to one another without tearing or any potential cuts.

Due to time constraints, I wasn’t able to add in the audio queues I set up for myself within the proposal. So instead, I compromised by adding in some city ambiance to make the city feel more immersive as the users walks through, editing the audio within adobe audition to add to seamless transitions.

However, this also means action scenes that would benefit to having audio (such as crashes or portal sounds) were unfortunately absent, making these scenes less visually impactful. More detailed audio queues is something I’d like to implement in future iterations of this prototype.

Finally, I rendered the sequence using Premiere Pro’s exporter – rendering the video at 12K before uploading the sequence onto Youtube, allowing accessibility to all users with or without a VR headset.

Ethics and Values

As discussed within my research proposal, a lot of my concerns and priorities lie within applying ethics and UX design into the art gallery. Here’s a comperative reflection between my proposal and the outcome of my project. I tested the project using a VR heaset and using youtube 360 view alongside another user to make these observations and discuss potential improvements.

Now, the main issue of this prototype is the camera work. This is a reoccurring issue that occurs throughout the ethics competencies and within this reflection I’ll also be discussing and reflecting the benefits but flaws to the camera usage in my project.

Ergonomics – For ergonomics, I discussed how the VR experience would be linear so that users could move through the experience at their own pace. During my research phase and due to user feedback, experiences with automatic movement causes disorientation and simulator sickness.

However, since I was creating an experience using Maya, I wasn’t able to add in key areas for players to manually traverse in. So instead, I added automatic movement to the project, making the cameras move slowly to avoid sudden movements for the user. Arguably, by having automatic movement in this experience, it means that those with physical impairments can still walk through the environment without excessive movement.

To avoid sensory overload, I tried to pick colors contrasts and soft lighting for the environment that were visually striking whilst applying color theory techniques (split complementary, use of hues and lighting) to create an appealing picturesque environment within a darker space. Since the VR experience is mainly accessed on YouTube, users can pause the experience at any time to step out and take breaks, allowing users to also take their time through their time traversing and experiencing the VR world at their own pace.

This also means that by uploading the video onto YouTube, it’s more accessible for users with or without VR headsets. Although, for users with visual impairments or limited wifi speeds, due to YouTube’s compression, the art pieces in particular are a lot harder to see without 4K resolution and during my research, this is unfortunately equivalent to 720p.

So, In the future, I’d like to experiment further with more detailed colour schemes in order to make the areas stand out and become more recognizable to the player beyond just different street layouts. For example, perhaps using more colour combinations similar to the Maya Hardware renders I showed previously.

Another potential idea in the future and something I’d like to consider in future iterations of this prototype resolves two of the main issues that affects visually impaired users as well as the overall quality of the experience. This is to port this project into VR software such as openbrush or FrameVR, therefore allowing easier access for user movement whilst still keeping a high resolution for the art pieces.

Range of User’s View – Originally in my proposal, the plan was to make the VR experience 200 degrees fully to prevent users from becoming lost within the environment. However, now with a 360 view, the world feels more immersive, allowing users to navigate their surroundings easily. Even if the experience is short, each area is also different than the last which keeps the user engaged with the environment and the areas more recognizable upon playback.

However, because of the camera movements, certain sections of the art gallery can feel restricting for the user’s field of view. This is mainly due to the camera moving to fixed points of each art piece rather than allowing the user to experience each scene for themselves.

User Interactivity – Due to time constraints, unfortunately, I was unable to add in audio queues for this prototype, instead adding in some background ambience to make the interactive video feel more immersive.

Users can have a 360 view of the VR experience and some art pieces have animated movements to them to make this aspect of the gallery interactive, even in a small sense. The animation also extends to the Maya Mash Networks too as they interact with the panels. Whilst the user’s interactive abilities is limited to watching the experience, the experience is able to serve it’s main objective of being an art gallery, allowing user’s to look at the different art pieces, how it blends into the world and it successfully establishes the user’s role in this world – a simple traveller exploring an unfamiliar district.

However, I’d like to improve on the limited interactivity by adding in elements I had left out previously such as audio queues and possibly more subtle visual guides like experimenting more with the lighting to make pieces appear more as a main focus. If I were to port this project to a different VR software, I’d also like to add the ability to allow users to look at pieces up close rather than at a distance, this is to further add to the art gallery appeal.

Avoiding sudden elements and simulator sickness – Overall, as mentioned in the ergonomics section, camera movements were fairly slow throughout the video, usually trying to keep a consistent angle without sudden turns. But with the restrictive movements, users that try to move their headgear around during fixed scenes, it can potentially cause some motion / simulator sickness and disorientation.

The reason behind relying on these camera movements is due to lack of testing, especially since rendering is a time consuming process. This meant however, with certain camera movements, it can leave the player feeling disorientated as to where their main focus is supposed to be, this is where the audio queues would’ve been incredibly beneficial.

If I were to continue working in Maya for my future improvements, I would like to add in audio queues and less camera work to allow users more freedom in their VR headset movement. I’d also research how to apply 4D audio potentially to users viewing the art gallery without a headset.

Researching Emerging Trends

VR art galleries have been done before, mainly for selling art pieces as NFTs – a unique identifer that cannot be copied. It is the equivalent of buying a unique piece that you own. Whilst there are benefits to owning these such as having digital ownership or owning a piece that is higher value – I’m not comfortable with the idea of NFT’s as it’s also known for harming the artist community as artists can essentially lose ownership of their work, making NFT’s easily exploitable for both the artist and it’s consumers since it’s also heavily linked to the blockchain.

Another trend, however, that I wanted to focus more on during the project was immersive videos. I used Youtube Videos as inspirations for my research – VR videos that showcased a unique 3D anime style to their production piece. For this, I looked at lepuha’s videos to see how I could apply camera work and storytelling to my work.

Whilst the first one is a short horror experience set in a stationary vehicle, the ECHO 360 Music Video has a forward moving camera that matches the intense tempo of the song. I liked both methods of camera movements – however, during my Maya production phase, I wanted to find a middle ground in order to keep user design in mind.

This is because, whilst the Echo music video was an engaging experience, the camera movements quickly became disorientating and hard to follow as the video progressed. Meanwhile, with the first video, whilst I considered the option of applying a stationary camera inside a vehicle to help the user travel around the street, in terms of Maya, it’d be difficult to set one up without further obscuring the user’s vision. I also realised that both videos use their environments, animation and camera work to keep their stories visually engaging.

So I used these inspirations to help further my understanding of immersive video techniques. From a technical standpoint, I made sure that the camera work overall moved at a slow, consistent rate which included slow turns for the user to adjust and for the creative viewpoint, I tried to make my art more recognisable in the user’s FOV, alongside adding small animations and darkening the lightening to visually envoke the horror tone of the production piece as the user delves into the video.

Reflection and Forward Thinking

In conclusion, this immersive 360 prototype served as a way to help me learn and understand how to develop an experience that could serve as a new and unique way to showcase my work as a narrative and creative designer. The main flaws of this project was not having a flexible work schedule that could take in account for any potential delays and restrictive camera work and youtube’s compression when rendering.

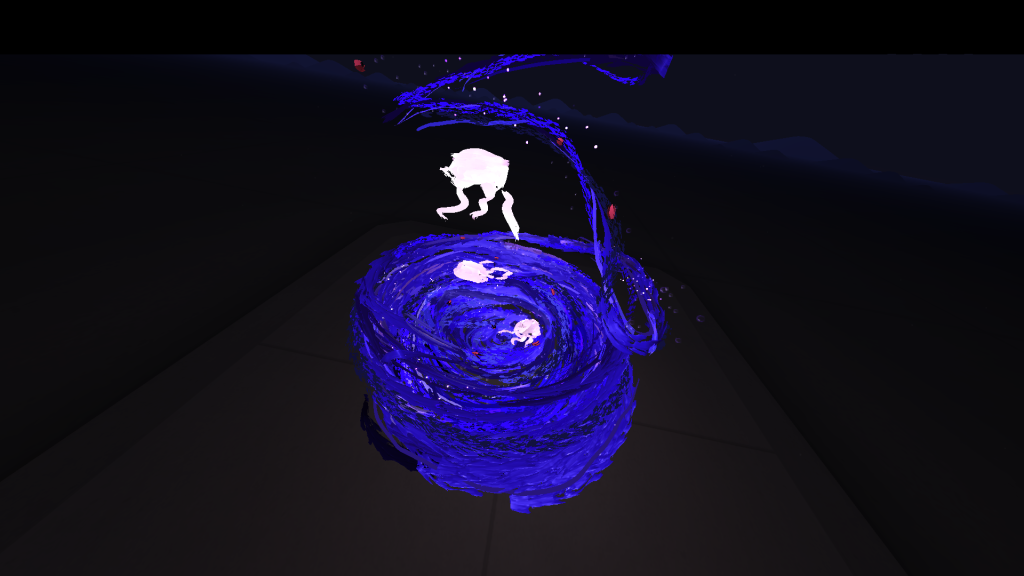

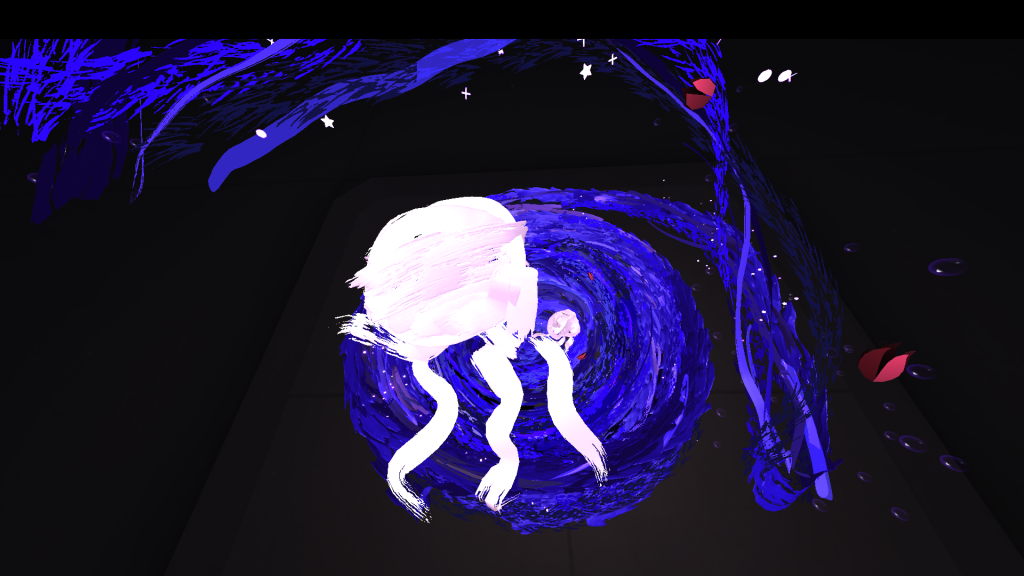

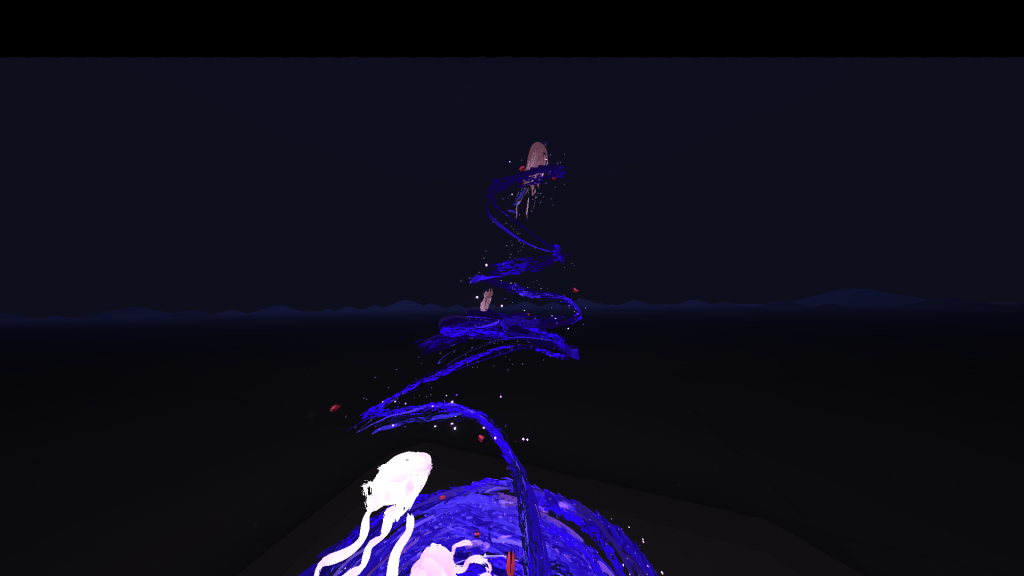

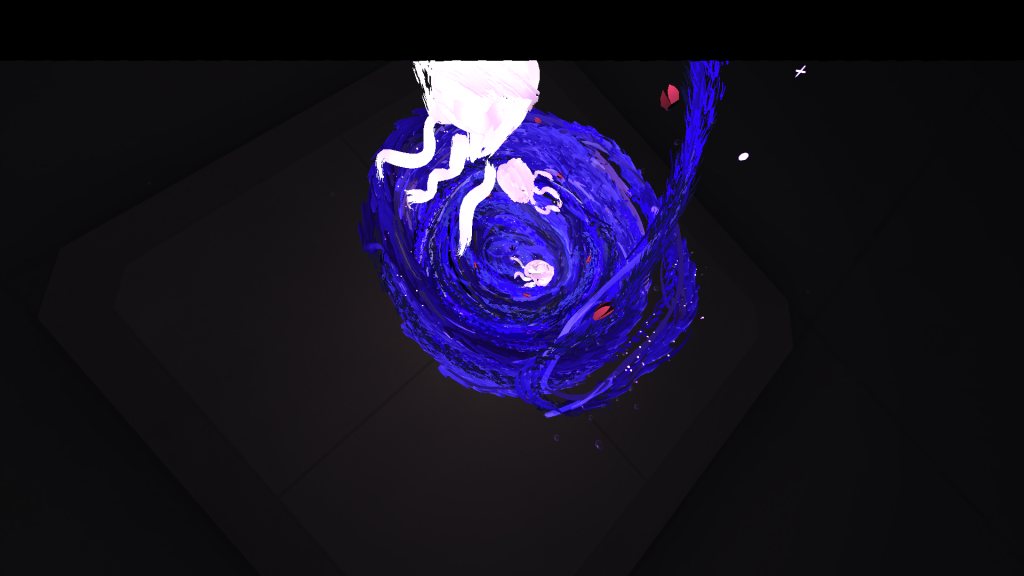

In consideration for further developments in this project, Openbrush is the best software to show examples of interactive art galleries, although not in a traditional sense. their environments often known for storytelling, often using 2D and 3D elements to showcase an entirely new world and was what inspired me to try and make an interactive environment in a similar fashion to begin with.

The way Openbrush allows users to explore the environment and the art within is the main inspiration of creating the city with my own art.

As shown in my experiments prior to my research proposal, I have used Openbrush in the past. I found the software to be intuitive but by making the prototype in Maya, I can understand the general layout and how beneficial my potential improvements could bring if I were to just work in an VR atmosphere.

In the future, I’d like to export or recreate this project to Openbrush and continue to work on the art gallery from there. This way I have a better understanding of an VR environment and how to better communicate and showcase my artwork to users within a narrative workspace without the reliance of heavy camera work or fixed scenes.